Using 21st Century Science to Improve Risk-Related Evaluations (2017)

Chapter: Summary

Summary

At the start of the 21st century, several federal agencies and organizations began to recognize the potential of improving chemical risk assessment by using the scientific and technological advances in biology and other related fields that were allowing the biological basis of disease to be better understood. Substantial increases in computational power and advances in analytical and integrative methods made incorporating the emerging evidence into risk assessment a possibility. Strategies were developed to use the advances to improve assessment of the effects of chemicals or other stressors that could potentially affect human health. Building on those efforts, the National Research Council (NRC) report Toxicity Testing in the 21st Century: A Vision and a Strategy1 envisioned a future in which toxicology relied primarily on high-throughput in vitro assays and computational models based on human biology to evaluate potential adverse effects of chemical exposures. Similarly, the NRC report Exposure Science in the 21st Century: A Vision and a Strategy2 articulated a long-term vision for exposure science motivated by the advances in analytical methods, sensor systems, molecular technologies, informatics, and computational modeling. That vision was to inspire a transformational change in the breadth and depth of exposure assessment that would improve integration with and responsiveness to toxicology and epidemiology.

Since release of those two reports, government collaborations have been formed, large-scale US and international programs have been initiated, and data are being generated from government, industry, and academic laboratories at an overwhelming pace. It is anticipated that the data being generated will inform risk assessment and support decision-making to improve public health and the environment. In the meantime, questions have arisen as to whether or how the data now being generated can be used to improve risk-based decision-making. Because several federal agencies recognize the potential value of such data in helping them to address their many challenging tasks, the US Environmental Protection Agency (EPA), US Food and Drug Administration (FDA), National Center for Advancing Translational Sciences (NCATS), National Institute of Environmental Health Sciences (NIEHS) and asked the National Academies of Sciences, Engineering, and Medicine to recommend the best ways to incorporate the emerging science into risk-based evaluations.3 As a result of the request, the National Academies convened the Committee on Incorporating 21st Century Science into Risk-Based Evaluations, which prepared this report.

SCIENTIFIC ADVANCES

To approach its task, the committee assessed scientific and technological advances in exposure science and toxicology that could be integrated into and used to improve any of the four elements of risk assessment—hazard identification, dose–response assessment, exposure assessment, and risk characterization. Although the National Academies has not been asked to produce a report on epidemiology comparable with its Tox21 and ES21 reports, epidemiological research is also undergoing a transformation. Because it plays a critical role in risk assessment by providing human evidence on adverse effects of chemical and other exposures, the committee assessed advances in epidemiology as part of its charge. The committee highlights here some of the advances, challenges, and needs in each field in the context of risk assessment. The committee’s report provides specific recommendations to address the challenges. Overall, a common theme is the need for a multidisciplinary approach. Exposure scientists, toxicologists, epidemiologists, and scientists in other disciplines need to collaborate closely to ensure that the full potential of 21st century science is realized to help to solve the complex environmental and public-health problems that society faces.

___________________

1 Referred to hereafter as the Tox21 report.

2 Referred to hereafter as the ES21 report.

3 The verbatim statement of task is provided in Chapter 1 of the committee’s report.

Exposure Science

A primary objective for improving exposure science is to build confidence in the exposure estimates used to support risk-based decision-making by enhancing quality, expanding coverage, and reducing uncertainty. The many scientific and technological advances that are transforming exposure science should help to meet that objective. Some of the endeavors that the committee considered promising for advancing that objective and in which progress has been made since the ES21 report are highlighted below.

- Remote sensing, personal sensors, and other sampling techniques. Remote sensing enhances the capacity to assess human and ecological exposures by helping to fill gaps in time and place left by traditional ground-based monitoring systems. Advances in passive sampling techniques and personal sensors offer unparalleled opportunities to characterize individual exposures, particularly in vulnerable populations. If remote sensing and personal sensors can be combined with global positioning systems, exposure and human-activity data can be linked to provide a more complete understanding of human exposures.

- Computational exposure tools. Because exposure-measurement data on many agents are not available, recent advances in computational tools for exposure science are expected to play a crucial role in most aspects of exposure estimation for risk assessments, not just high-throughput applications. However, improving the scope and quality of data that are needed to develop parameters for these tools is critically important because without such data the tools have greater uncertainty and less applicability. Comparisons of calculated and measured exposures are required to characterize uncertainties in the computational tools and their input parameters.

- Targeted and nontargeted analyses. Advances in two complementary approaches in analytical chemistry are improving the accuracy and breadth of human and ecological exposure characterizations and are expanding opportunities to investigate exposure–disease relationships. First, targeted analyses focus on identifying selected chemicals for which standards and methods are available. Improved analytical methods and expanded chemical-identification libraries are increasing opportunities for such analyses. Second, nontargeted analyses offer the ability to survey more broadly the presence of all chemicals in the environment and in biofluids regardless of whether standards and methods are available. Nontargeted analyses reveal the presence of numerous substances whose identities can be determined after an initial analysis by using cheminformatic approaches or advanced or novel analytical techniques.

- -Omics technologies. -Omics technologies can measure chemical or biological exposures directly or identify biomarkers of exposure or response that allow one to infer exposure on the basis of a mechanistic understanding of biological responses. These emerging technologies and data streams will complement other analyses, such as targeted and nontargeted analyses, and lead to a more comprehensive understanding of the exposure-to-outcome continuum. Identifying biomarkers of exposure to individual chemicals or chemical classes within the complex exposures of human populations remains a considerable challenge for these tools.

- Exposure matrices for life-span research. Responding to the need to improve the characterization of fetal exposures to chemicals, researchers have turned to new biological matrices, such as teeth, hair, nails, placental tissue, and meconium. The growth properties (the sequential deposition or addition of tissue with accumulation of chemicals) and availability of the biospecimens offer the opportunity to extract a record of exposure. The question that needs to be addressed now is how concentrations in these matrices are related to and can be integrated with measures of exposure that have been traditionally used to assess chemical toxicity or risk.

- Physiologically based pharmacokinetic (PBPK) models. PBPK models are being applied more regularly to support aggregate (multiroute) exposure assessment, to reconstruct exposure from biomonitoring data, to translate exposures between experimental systems, and to understand the relationship between biochemical and physiological variability and variability in population response. An important focus has been on the development of PBPK models for translating exposures between test systems and human-exposure scenarios, development that has been driven by the rapidly expanding use of high-throughput in vitro assays to characterize the bioactivity of chemicals and other materials. That research will remain critical as regulatory agencies, industry, and other organizations increase their dependence on in vitro systems.

The emerging technologies and data streams offer great promise for advancing exposure science and improving and refining exposure measurements and assessment. However, various challenges will need to be addressed. A few are highlighted here.

- Expanding and coordinating exposure-science infrastructure. A broad spectrum of disciplines and institutions are participating in advancing exposure methods, measurements, and models. Given the number and diversity of participants in exposure science, the information is mostly fragmented, incompletely organized, and in some cases not readily available or accessible. Thus,

-

an infrastructure is needed to improve the organization and coordination of the existing and evolving components for exposure science and ultimately to improve exposure assessment. Infrastructure development should include creating or expanding databases that contain information on chemical quantities in and chemical release rates from products and materials, on chemical properties and on processes, and analytical features that can be used in chemical identification.

- Aligning environmental and test-system exposures. Aligning information on environmental exposures with information obtained from experimental systems is a critical aspect of risk-based evaluation. Concentrations in test-system components need to be quantified by measurement, which is preferred, or by reliable estimation methods. Knowledge of physical processes, such as binding to plastic and volatilization, and of biological processes, such as metabolism, needs to be improved.

- Integrating exposure information. Integration and appropriate application of exposure data on environmental media, biomonitoring samples, conventional samples, and emerging matrices constitute a scientific, engineering, and big-data challenge. The committee emphasizes that integration of measured and modeled data is a key step in developing coherent exposure narratives, in evaluating data concordance, and ultimately in determining confidence in an exposure assessment. New multidisciplinary projects are needed to integrate exposure data and to gain experience that can be used to guide data collection and integration of conventional and emerging data streams.

Toxicology

The decade since publication of the Tox21 report has seen continued advances in an array of technologies that can be used to understand human biology and disease at the molecular level. Technologies are now available to profile the transcriptome, epigenome, proteome, and metabolome. There are large banks of immortalized cells collected from various populations to use for toxicological research; large compilations of publicly available biological data that can be mined to develop hypotheses about relationships between chemicals, genes, and diseases; and genetically diverse mouse strains and alternative species that can be used for toxicological research. Highlighted below are some assays, models, and approaches for predicting biological responses that have seen rapid advances over the last decade; they are arranged by increasing level of biological organization.

- Probing interactions with biological molecules. Chemical interactions with specific receptors, enzymes, or other discrete proteins and nucleic acids have long been known to have adverse effects on biological systems, and development of in vitro assays that probe chemical interactions with cellular components has been rapid, driven partly by the need to reduce high attrition rates in drug development. The assays can provide reliable and valid results with high agreement among laboratories and can be applied in low-, medium-, and high-throughput formats. Computational models have been developed to predict activity of chemical interactions with protein targets, and research to improve the prediction of protein–chemical interactions continues.

- Detecting cellular response. Cell cultures can be used to evaluate a number of cellular processes and responses, including receptor binding, gene activation, cell proliferation, mitochondrial dysfunction, morphological changes, cellular stress, genotoxicity, and cytotoxicity. Simultaneous measurements of multiple toxic responses are also possible with high-content imaging and other novel techniques. Furthermore, cell cultures can be scaled to a high-throughput format and can be derived from genetically different populations so that aspects of variability in response to chemical exposure that depend on genetic differences can be studied. In addition to cell-based assays, numerous mathematical models and systems-biology tools have been advanced to describe various aspects of cell function and response.

- Investigating effects at higher levels of biological organization. The last decade has seen advances in engineered three-dimensional (3-D) models of tissues. Organotypic or organ-on-a-chip models are types of 3-D models in which two or more cell types are combined in an arrangement intended to mimic an in vivo tissue and, therefore, recapitulate at least some of the physiological responses that the tissue or organ exhibits in vivo. NCATS, for example, has a number of efforts in this field. Although the models are promising, they are not yet ready for inclusion in risk assessment. In addition to cell cultures, computational systems-biology models have been developed to simulate tissue-level response. EPA, for example, has developed virtual-tissue models for the embryo and liver. Virtual-tissue models can potentially help in conceptualizing and integrating current knowledge about the factors that affect key pathways and the degree to which pathways must be perturbed to activate early and intermediate responses in human tissues and, when more fully developed, in supporting risk assessments.

- Predicting organism and population response. Animal studies remain an important tool in risk assessment, but scientific advances are providing opportunities to enhance the utility of whole-animal testing. Gene-editing technologies, for example, have led to the creation of transgenic rodents that can be used to investigate specific questions, such as those related to susceptibility or gene–environment interactions. Genetically diverse rodent strains have provided another approach for addressing questions related to interindividual sensitivity to toxi-

cants. Combining transgenic or genetically diverse rodent strains with -omics and other emerging technologies can increase the information gained from whole-animal testing alone. Those targeted studies can help to address knowledge gaps in risk assessment and can link in vitro observations to molecular, cellular, or physiological effects in the whole animal. In addition to the mammalian species, scientific advances have made some alternative species—such as the nematode Caenorhabditis elegans, the fruit fly Drosophila melanogaster, and the zebrafish Danio rerio—useful animal models for hazard identification and investigation of biological mechanisms.

The assays, models, and tools noted above hold great promise in the evolution of toxicology, but there are important technical and research challenges, a few of which are highlighted below.

- Accounting for metabolic capacity in assays. Current in vitro assays generally have little or no metabolic capability, and this aspect potentially constrains their usefulness in evaluating chemical exposures that are representative of human exposures that could lead to toxicity. Research to address the metabolic-capacity issues needs to have high priority, and formalized approaches need to be developed to characterize the metabolic competence of assays, to determine for which assays it is not an essential consideration, and to account for the toxicity of metabolites appropriately.

- Understanding and addressing other limitations of cell systems. Cell cultures can be extremely sensitive to environmental conditions, responses can depend on the cell type used, and current assays can evaluate only chemicals that have particular properties. Research is needed to determine the breadth of cell types required to capture toxicity adequately; cell batches need to be characterized sufficiently before, during, and after experimentation; and practical guidance will need to be developed for cell systems regarding their range of applicability and for describing the uncertainty of test results.

- Addressing biological coverage. Developing a comprehensive battery of in vitro assays that covers the important biological responses to the chemical exposures that contribute to adverse health effects is a considerable challenge. In addition, most assays used in the federal government high-throughput testing programs were developed by the pharmaceutical industry and were not designed to cover the full array of biological response. As emphasized in the Tox21 report, research is needed to determine the extent of relevant mechanisms that lead to adverse responses in humans and to determine which experimental models are needed to cover these mechanisms adequately. Using -omics technologies and targeted testing approaches with transgenic and genetically diverse rodent species and alternative species will address knowledge gaps more comprehensively.

When one considers the progress in implementing the Tox21 vision and the current challenges, it is important to remember that many assays, models, and tools were not developed with risk-assessment applications as a primary objective. Thus, understanding of how best to apply them and interpret the data is evolving. The usefulness or applicability of various in vitro assays will need to be determined by continued data generation and critical analysis, and some assays that are highly effective for some purposes, such as pharmaceutical development, might not be as useful for risk assessment of commodity chemicals or environmental pollutants. It will most likely be necessary to adapt current assays or develop new assays specifically intended for risk-assessment purposes.

Epidemiology

The scientific advances that have propelled exposure science and toxicology onto new paths have also substantially influenced the direction of epidemiological studies and research. The factors reshaping epidemiology in the 21st century include expansion of the interdisciplinary nature of the field; the increasing complexity of scientific inquiry; emergence of new data sources and technologies for data generation, such as new medical and environmental data sources and -omics technologies; advances in exposure characterization; and increasing demands to integrate new knowledge from basic, clinical, and population sciences. There is also a movement to register past and present datasets so that on particular issues datasets can be identified and combined.

One of the most important developments has been the emergence of the -omics technologies and their incorporation into epidemiological research. -Omics technologies have substantially transformed epidemiological research and advanced the paradigm of molecular epidemiology, which focuses on underlying biology (pathogenesis) rather than on empirical observations alone. The utility of -omics technologies in epidemiological research is already clear and well exemplified by the many studies that have incorporated genomics. For example, the genetic basis of disease has been explored in genome-wide association studies in which the genomic markers in people who have and do not have a disease or condition of interest are compared. The -omics technologies that have been applied in epidemiological research, however, have now expanded beyond genomics to include epigenomics, proteomics, transcriptomics, and metabolomics. New studies are being designed with the intent of prospectively storing samples that can be used for existing and future -omics technologies. Thus, obtaining data from human population studies that are parallel to data obtained from in vi-

tro and in vivo assays or studies is already possible and potentially can help in harmonizing comparisons of exposure and dose. Furthermore, -omics technologies have the potential for providing a suite of new biomarkers for hazard identification and risk assessment.

Like exposure science and toxicology, epidemiology faces challenges in incorporating 21st century science into its practice. -Omics assays can generate extremely large datasets that need to be managed and curated in ways that facilitate access and analysis. Databases that can accommodate the large datasets, support analyses for multiple purposes, and foster data-sharing need to be developed. Powerful and robust statistical techniques also are required to analyze all the data. And standard ways to describe the data are needed so that data can be harmonized among investigative groups and internationally.

The landscape of epidemiological research is changing rapidly as the focus shifts from fixed, specific cohorts, such as those in the Nurses’ Health Study,4 to large cohorts enrolled from health-care organizations or other resources that incorporate biospecimen banks and use health-care records to characterize participants and to track outcomes. Such studies offer large samples but will need new approaches to estimate exposures that will work in this context. Thus, there will be a need for close collaboration with exposure scientists to ensure that exposure data are generated in the best and most comprehensive way possible. Furthermore, various biospecimens are being collected and stored with the underlying assumption that they will be useful in future studies; researchers involved in such future-looking collections need to seek input from the scientists who are developing new assays so that the biospecimens can be collected and stored in a way that maximizes the potential for their future use. All those concerns emphasize the need to expand the multidisciplinary teams involved in epidemiological research.

APPLICATIONS OF 21st CENTURY SCIENCE

The scientific and technological advances described above and in further detail in this report offer opportunities to improve the assessment or characterization of risk for the purpose of environmental and public-health decision-making. The committee highlights below several activities—priority-setting, chemical assessment, site-specific assessment, and assessments of new chemistries—that could benefit from the incorporation of 21st century science. Case studies of practical applications are provided in Appendixes B–D.

Priority-setting has been seen as a principal initial application for 21st century science. High-throughput screening programs have produced toxicity data on thousands of chemicals, and high-throughput methods have provided quantitative exposure estimates. Several methods have been proposed for priority-setting, including risk-based approaches that use a combination of the high-throughput exposure and hazard information to calculate margins of exposure (differences between toxicity and exposure metrics). For that approach, chemicals that have a small margin of exposure would be seen as having high priority for further testing and assessment.

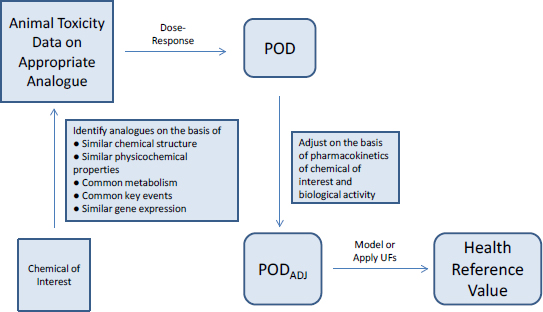

Chemical assessment is another activity in which the committee sees great potential for application of 21st century science. Chemical assessments encompass a broad array of analyses. Some cover chemicals that have a substantial database for decision-making, and for these assessments scientific and technical advances can be used to reduce uncertainties around key issues and to address unanswered questions. Many assessments, however, cover chemicals on which there are few data to use in decision-making, and for these assessments the committee finds an especially promising application for 21st century science. One approach for evaluating data-poor chemicals is to use toxicity data on well-tested chemicals (analogues) that are similar to the chemicals of interest in their structure, metabolism, or biological activity in a process known as read-across (see Figure S-1). The assumption is that a chemical of interest and its analogues are metabolized to common or biologically similar metabolites or that they are sufficiently similar in structure to have the same or similar biological activity. The method is facilitated by having a comprehensive database of toxicity data that is searchable by curated and annotated chemical structures and by using a consistent decision process for selecting suitable analogues. The approach illustrated in Figure S-1 can be combined with high-throughput in vitro assays, such as gene-expression analysis, or possibly with a targeted in vivo study to allow better selection of the analogues to ensure that the biological activities of a chemical of interest and its analogues are comparable. The committee notes that computational exposure assessment, which includes predictive fate and transport modeling, is an important complement to the approach described and can provide information on exposure potential, environmental persistence, and likelihood of bioaccumulation.

Site-specific assessment represents another application for which 21st century science can play an important role. Understanding the risks associated with a chemical spill or the extent to which a hazardous-waste site needs to be remediated depends on understanding exposures to various chemicals and their toxicity. The assessment problem contains three elements—identifying and quantifying chemicals present at the site, characterizing their toxicity, and characterizing the toxicity of chemical mixtures—and the advances described in this report can address each element. First, targeted analytical-chemistry

___________________

4 The Nurses’ Health Study is a prospective study that has followed a large cohort of women over many decades to identify risk factors for major chronic diseases.

approaches can identify and quantify chemicals for which standards are available, and nontargeted analyses can help to assign provisional identities to previously unidentified chemicals. Second, analogue-based methods coupled with high-throughput or high-content screening methods have the potential to characterize the toxicity of data-poor chemicals. Third, high-throughput screening methods can provide information on mechanisms that can be useful in determining whether mixture components might act via a common mechanism, affect the same organ, or cause the same outcome and thus should be considered as posing a cumulative risk. High-throughput methods can also be used to assess the toxicity of mixtures that are present at specific sites empirically rather than assessing individual chemicals.

Assessment of new chemistries is similar to the chemical assessment described above except that it typically involves new molecules on which there are no toxicity data and that might not have close analogues. Here, modern in vitro toxicology methods could have great utility by providing guidance on which molecular features are associated with greater or less toxicity and by identifying chemicals that do not affect biological pathways that are known to be relevant for toxicity. Modern exposure-science methods might also help to identify chemicals that have the highest potential for widespread environmental or human exposure and for bioaccumulation.

VALIDATION

Before new assays, models, or test systems can be used in regulatory-decision contexts, it is expected and for some purposes legally required that their relevance, reliability, and fitness for purpose be established and documented. That activity has evolved into elaborate processes that are commonly referred to as validation of alternative methods. One critical issue is that current processes for validation cannot match the pace of development of new assays, models, and test systems, and many have argued that validation processes need to evolve. Important elements of the validation process that need to be addressed include finding appropriate comparators for enabling fit-for-purpose validation of new test methods, clearly defining assay utility and how assay data should be interpreted, establishing performance standards for assays and clear reporting standards for testing methods, and determining how to validate batteries of assays that might be used to replace toxicity tests. The committee discusses those challenges further and offers some recommendations in Chapter 6.

A NEW DIRECTION FOR RISK ASSESSMENT AND THE CHALLENGES IT POSES

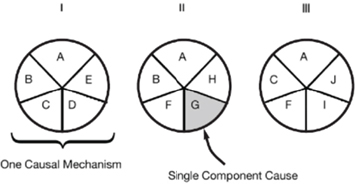

The advances in exposure science, toxicology, and epidemiology described in this report support a new direction for risk assessment, one based on biological pathways and processes rather than on observation of apical responses and one incorporating the more comprehensive exposure information emerging from new tools and approaches in exposure science. The exposure aspect of the new direction focuses on estimating or predicting internal and external exposures to multiple chemicals and stressors, characterizing human variability in those exposures, providing exposure data that can inform toxicity testing, and translating exposures between test systems and humans. The toxicology and epidemiology elements of the new direction focus on the multifactorial and nonspecific nature of disease causation; that is, stressors from multiple sources can contribute to a single disease, and a single stressor can lead to multiple adverse outcomes. The question shifts from whether A causes B to whether A increases the risk of B. The committee found that the sufficient-component-cause model, which is illustrated in Figure S-2, is a useful tool for conceptualizing the new direction. The same outcome can result from more than one causal complex or mechanism; each mechanism generally involves joint action of multiple components.

Most diseases that are the focus of risk assessment have a multifactorial etiology; some disease components arise from endogenous processes, and some result from the human experience, such as background health conditions, co-occurring chemical exposures, food and nutrition, and psychosocial stressors. Those additional components might be independent of the environmental stressor under study but nonetheless influence and contribute to the overall risk and incidence of disease. As shown in the case studies in this report, one does not need to know all the pathways or components involved in a particular disease to begin to apply the new tools to risk assessment. The 21st century tools provide the mechanistic and exposure data to support dose–response characterizations and human-variability derivations described in the NRC report Science and Decisions: Advancing Risk Assessment. They also support the understanding of relationships between disease and components and can be used to probe specific chemicals for their potential to perturb pathways or activate mechanisms and increase risk.

The 21st century science with its diverse, complex, and very large datasets, however, poses challenges related to analysis, interpretation, and integration of data and evidence for risk assessment. In fact, the technology has evolved far faster than the approaches for those activities. The committee found that Bradford-Hill causal guidelines could be extended to help to answer such questions as whether specific pathways, components, or mechanisms contribute to a disease or outcome and whether a particular agent is linked to pathway perturbation or mechanism activation. Although the committee considered various methods for data integration, it concluded that guided expert judgment should be used in the near term for integrating diverse data streams for drawing causal conclusions. In the future, pathway-modeling approaches that incorporate uncertainties and integrate multiple data streams might become an adjunct to or perhaps a replacement for guided expert judgment, but research will be needed to advance those approaches. The committee emphasizes that insufficient attention has been given to analysis, interpretation, and integration of various data streams from exposure science, toxicology, and epidemiology. It proposes a research agenda that includes developing case studies that reflect various scenarios of decision-making and data availability; testing case studies with multidisciplinary panels; cataloguing evidence evaluations and decisions that have been made on various agents so that expert judgments can be tracked and evaluated, and expert processes calibrated; and determining how statistically based tools for combining and integrating evidence, such as Bayesian approaches, can be used for incorporating 21st century science into all elements of risk assessment.

CONCLUDING REMARKS

As highlighted here and detailed in the committee’s report, many scientific and technical advances have followed publication of the Tox21 and ES21 reports. The committee concludes that the data that are being generated today can be used to address many of the risk-related tasks that the agencies face, and it provides several case studies in its report to illustrate the potential applications. Although the challenges to achieving the visions of the earlier reports often seem daunting, 21st century science holds great promise for advancing risk assessment and

ultimately for improving public health and the environment. The committee emphasizes, however, that communicating the strengths and limitations of the approaches in a transparent and understandable way will be necessary if the results are to be applied appropriately and will be critical for the ultimate acceptance of the approaches.