Promising Practices and Innovative Programs in the Responsible Conduct of Research: Proceedings of a Workshop (2023)

Chapter: 3 Reproducibility and Data Reuse

3

Reproducibility and Data Reuse

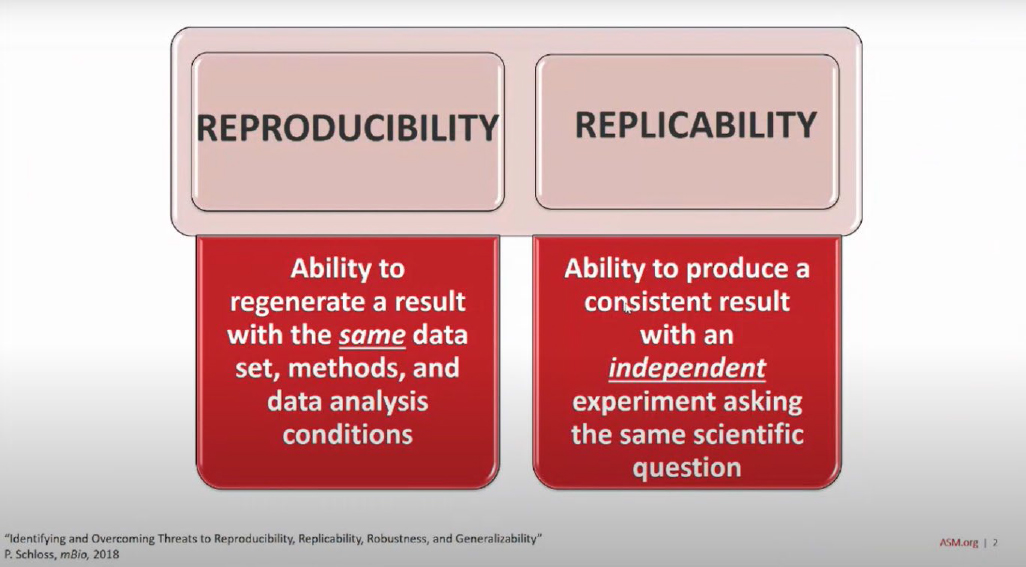

Amy Kullas (American Society for Microbiology) began her presentation on reproducibility and data use by defining two terms that many use interchangeably but are in fact distinct concepts, reproducibility and replicability (see Figure 3-1). While both reproducibility and replicability are hallmarks of good science, a successful replication does not guarantee that the original scientific results of a study were correct, nor does a single failed replication refute the original claims.

SOURCE: Presented by Amy Kullas on October 21, 2021; definitions adopted from Schloss, 2018.

Kullas noted that in 2015, the American Society for Microbiology (ASM) hosted a colloquium that considered issues related to reproducibility, the ethical conduct of scientific research, and good practices (Davies and Edwards 2016). There was consensus among the attendees that systemic problems in the conduct of science are likely to be responsible for most instances of irreproducible results. Irreproducible results, said Kullas, make it difficult to have confidence that a finding can be replicated or generalized, and they also lead to decreased public confidence in science. Poorly designed experiments that lack sufficient controls are often a threat

to reproducibility, particularly when investigators fail to account for confounding variables that affect the variables under study and suggest a relationship between them that does not exist. Subsequent studies, then, may fail to generate the same results because the same confounding variables do not affect the design of those studies. Other contributors to irreproducibility include “documented and honest laboratory errors not due to misconduct, lack of or inappropriate controls, faulty statistical analysis, invalid reagents such as contaminated cell lines, favoring certain experimental outcomes over others, disregarding contradictory results, and bias in data selection and use,” said Kullas. She added that it is the responsibility of publishers such as ASM to maintain the integrity of the scientific record.

Regarding what researchers could consider when deciding where to publish their results, Kullas suggested choosing a publisher that is reputable or well-established, note whether PubMed or Scopus index the journal’s articles, and look for non-scientific or non-academic ads on the journal’s website. She noted that there has been an increase in predatory publishers and journals over the past decade. These journals publish papers with little or no review, actively solicit papers from early career or inexperienced researchers, and may even use a title of an established journal.

The current preoccupation many scientists have with publishing their work in a journal with the highest journal impact factor (JIF) is having a detrimental effect on the biological sciences, said Kullas. Despite almost universal condemning of the use of the JIF to assess the significance of scientific work, its use in rating publications and scientists remains highly prevalent in decisions involving hiring, funding, and promotion. “JIF mania bears on the reproducibility problem,” said Kullas, “because it produces intense pressure on scientists to publish in journals with the highest impact factor,” many of which have publication criteria “that could lead some authors to sanitize or overstate their conclusions to make their paper more attractive in a process that runs from the gambit of bad science to outright misconduct.” The bottom line, she added, is to remember “impact does not equate with importance.”

In Spring 2021, one of ASM’s journals relaunched as a new open-access journal that follows the above recommendations. Rather than making subjective evaluations of potential impact, Microbiology Spectrum13 will publish results that are of high technical quality and that are useful to the community, said Kullas. In addition to studies that advance the understanding of microbial life, the journal will also consider publishing replication studies, technically robust datasets that may contradict published findings, negative results, descriptive datasets that would serve as a community resource, and methodological advances and detailed experimental protocols.

RESEARCH MISCONDUCT

Turning to the issue of research misconduct, Kullas defined it as “fabrication, falsification, or plagiarism in proposing, performing, or reviewing research or in reporting research results.” Fabrication refers to making up data or results and recoding or reporting them, while falsification is manipulating research materials, equipment, or processes or changing or omitting data. Plagiarism is the appropriation of another person's ideas, processes, results or words without giving the appropriate credit. Importantly, said Kullas, research misconduct does not include honest errors or differences of opinion.

___________________

13 Available at https://journals.asm.org/journal/spectrum

Kullas then described three examples of scientific misconduct. In 1974, William Summerlin published results suggesting that he was able to transplant skin from mice with black fur onto mice with white fur, but the black fur on the white mice had been colored with a Sharpie marker. In 1998, Andrew Wakefield claimed there was a link between the measles, mumps, and rubella vaccine and autism. Though the paper was retracted in 2010 when it became clear that he falsified data, it continues to have a negative impact that ripples through science and public health. More recently, Haruko Obokata was lead author on two papers claiming that a safe method for creating stem cells from regular cells was doing a simple acid wash. Within five months, both papers were retracted when an investigation found that she had manipulated the images and found her guilty of scientific misconduct.

In some instances, duplications within a paper can be deceptive, such as rotating or cropping images to present them as a different image representing a different data set. Control plots, she noted, can look surprisingly alike, but if controls from the same experiment are used in different panels in a paper, the author could state that in the figure legends. Copy and paste errors may not be intentional but rather the result of sloppy science, pointing to the importance of carefully reviewing the original data, as well as the proposed publication, said Kullas. Preventing sloppy science includes labeling files appropriately, depositing the original images into a repository, or providing the original images at the time of submission. Most importantly, investigators should repeat their experiments to verify their reproducibility, Kullas said.

Kullas went on to describe a post-publication review of 960 papers published between 2009 and 2016 in the journal Molecular and Cellular Biology that found that 59 papers, or 6.1 percent, contained inappropriately duplicated images (Bik et al. 2018). Although the problematic images in 41 of the papers resulted from human error and were corrected by the authors, the journal retracted five of the papers with errors because of scientific misconduct.

Following publication of this review, ASM published an editorial noting that the society promotes a culture of rigor, transparency, and data sharing (Kullas and Davis 2017). Kullas explained that ASM analyzes accepted manuscripts, performs post-publication evaluation, and develops training modules and tools for the microbial sciences and the larger scientific community. She suggested that journals establish a standard set of approaches for dealing with both honest and dishonest errors and noted that correcting errors identified in the above study took six hours of journal staff time per problem. “The good news is that increased screening of manuscripts at our journals has led to a reduction in the number of problems,” said Kullas.

To correct the scientific record, authors can issue corrections, expressions of concern, or outright retractions. Kullas said that an author correction or retraction can be published when honest mistakes happen because it is important to correct and safeguard the scientific record, particularly in an era of fake news and alternative facts. She also noted that there some emerging solutions to assist with this important task. Preprints, draft research articles that researchers post publicly before or during the peer review process, can help by allowing the scientific community to provide feedback, including pointing out errors, prior to publication. Increased education and vigilance can also help lessen the need for corrections. For the review and publication process, reviewer education is important, as is enhanced editorial scrutiny and depositing primary data. There are also post-publication solutions to protect the scientific record in addition to retractions or corrections, including Pubpeer, an online journal club; Retraction Watch,14 a blog that reports on retractions of scientific papers; and comments from readers published in journals.

___________________

INCREASING TRANSPARENCY AND REPRODUCIBILITY

Similar to most scientific publishers, ASM has an open data policy and requests researchers make their data and code used in experiments and studies available publicly in data repositories upon publication. Authors must provide a data availability paragraph that describes where they have deposited their data, including the name of the repository and the DOI or accession numbers to access the data. Journal editors then certify compliance with this policy. ASM defines scientific data as the recorded factual material that the scientific community would commonly accept as necessary to validate and replicate the research findings. This includes nucleotide and amino acid sequences, microarray and next-generation sequencing data, high-throughput functional genomics data, structural and X-ray crystallography data, software and code, proteomics and metabolomics data, mass spectrometry images, and video imaging analyses.

Kullas noted that link rot—when hyperlinks to no longer point to their originally targeted file—has become a significant problem for those attempting to access data and methods they use to reproduce results. In addition, rapid advances in sequencing technology, data curation, database structures, and statistical techniques present an additional threat to reproducibility because resources that the scientific community considers best practices are evolving constantly.

The data life cycle, Kullas explained, begins in the experimental planning and design phase. It then proceeds through data collection, data processing and analysis, data sharing and publication, and data preservation, curation and stewardship, which then promotes data reuse and reanalysis of datasets and leads to planning and design of the next set of experiments. She noted that sharing data has many benefits that extend beyond the scientific enterprise. For the individual researcher, data sharing promotes reliability, leads to citations, and increases the ability of others to discover the data, which can lead to fruitful collaborations and research resources, including funding. For the research field or discipline, data sharing increases collaborations, benefits teaching by making new experimental methods available to students, and improves the replication and reliability of the discipline as a whole, which leads to advancements in the field. For the larger community, data sharing increases public trust in and understanding of science, triggers innovation, informs policy decisions, and strengthens the economy.

Two more options to increase transparency and reproducibility are Contributor Roles Taxonomy,15 which identifies the roles played by contributors to scientific scholarly output, and ORCiD,16 a persistent digital identifier that an individual owns and controls. Including it in a manuscript, grant submission, or with datasets in a repository supports automatic linkage between an individual investigator and their professional activities and ensures the individual is recognized for their work. ORCiD has emerged as a technology to solve the link rot problem. Kullas said that many journals are now using ORCiD as a persistent link to an individual's work over their entire career, from graduate student to postdoctoral fellow to faculty member.

Too often, she said, the underlying raw sequencing data and associated metadata that contextualize the sequencing data are not available, making reproducing prior results or analyses impossible. Kullas suggested that investigators use well-established databases for storing the wide range of microbiological data as well as archiving other types of data, research protocols and methods, and software and code in open-access, third party databases such as Figshare,

___________________

15 Available at https://casrai.org/credit/

16 Available at https://orcid.org/

Dryad, Zenodo, protocols.io, Code Ocean, and GitHub.17 Some universities have begun offering their own institutional repositories that are compliant with both federal and state guidelines.

“It is important to assess and learn about the different repository options and determine which is best for the type of data generated,” said Kullas. Key components for a repository include sustainability, accessibility, preservation, and system integrity. Her suggestions for increasing data usage, sustainability, and curation included “improvements to manuscript tables, texts, or figures to aid in understanding and reuse of the work; data access or license conditions updated at repository or in manuscripts to aid accessibility; repository metadata for improved aid of dataset discoverability; and improvements for the file names or structure at the repository to aid in the understanding and subsequent reuse of the work.”

On a final note, Kullas pointed out that there are many stakeholders for upholding research and data integrity. While researchers and institutions play a significant role, so too do funders, government, and publishers, as well as database and analytics providers. “It is a shared responsibility that requires each participant to seek out information on how to identify and address data concerns,” she said.

DISCUSSION

To start the discussion, Michael Kalichman (University of California, San Diego) asked Kullas if she has seen any promising practices that could increase RCR. Kullas replied that one of the most promising practices is having the underlying data available and archived for others to review and use. She noted that since data storage has become so reasonably priced, there is no reason not to archive original data, and pointed out that some publishers are asking for original blots and microscopy images to accompany submitted manuscripts.

Kalichman credited ASM with being a leader in fostering scientific integrity, and asked Kullas what other fields could do to move the needle in RCR. Kullas said that ASM conducted a robust, comprehensive, organization-wide ethics review of all of its processes and procedures and has begun centralizing ethics resources. What other organizations can do, she said, is establish a committee to take a 360-degree view of ethical issues in the organization and identify areas where it could improve its processes and procedures.

An attendee18 commented on the link Kullas made between research integrity and public trust and asked her what she thought of community peer review of research pertaining to specific communities, such as patient or environmental justice communities. Kullas replied that community peer review using preprints would work for some communities. One approach would be for a researcher to discuss their preprint at a journal club involving the community and post the comments on a data archive site. This could start a conversation even before the publication stage that might raise potential concerns.

Asked for advice on how researchers could advocate for the value of publishing negative results and replications studies, Kullas suggested using preprints and choosing journals that will publish negative results and replication studies but acknowledged that this is one of the bigger

___________________

17 Available at https://figshare.com/, https://datadryad.org/stash, https://zenodo.org/, https://www.protocols.io/, https://codeocean.com/, and https://github.com, respectively.

18 The ePosterBoards platform supports text-based questions and also allows questions to be asked anonymously. For consistency, all individuals who asked questions aside from session moderators or planning committee members are not named.

challenges to increasing research integrity. Kullas also called on scientific societies that publish journals to take the step of recognizing the value of publishing negative results and replication studies.

Responding to Kalichman’s question about the responsibilities of reviewers to watch for misconduct, Kullas said that ASM is working on educating reviewers and is starting to see reviewers note potential concerns in the papers they are reviewing. ASM then investigates them by asking the researchers to provide their original data. She knows of several instances where the authors could not provide the original data and therefore the ASM journal did not proceed with publication.

BREAKOUT DISCUSSION

Following Kullas’s presentation on reproducibility and data reuse, attendees discussed actions they planned to take based on the information learned at the workshop. The following section presents the highlights of that discussion as reported by Sonia Chawla (Eastern Michigan University), the designated rapporteur for the group.

One topic the group discussed was the importance of researchers evaluating themselves and their research, particularly around ethical decision-making. They observed that offering RCR courses for credit might incentivize students to take such a course, and wondered how to implement team-based learning in such courses. The group discussed publishers’ requirement to provide original data and suggested that publishers could create a publication ethics checklist that authors would complete before submitting their paper.

The group commented on the tremendous effort and resources needed to correct mistakes and discussed ways of preventing mistakes before publication, including asking investigators to turn over their data to an artist or other researcher who was not involved in data collection who could create graphics from those data; extending the practice of preregistering clinical trials to other areas of research with the proviso of making allowances for exploratory research; collaborating with the Committee on Publication Ethics19 to bridge the gap between researchers, editors, and publishers; teaching ethical decision making; requiring every researcher take statistics; and blinded peer review.

There was also some discussion about changing the focus from ethics, which can be a loaded term, to promoting good research practices that would continuously move the needle in the direction of good science and engineering practice. The group suggested that concentrating RCR training on graduate students could have a trickle-up effect that might influence faculty members who may be resistant to change. On a final note, some members of the group noted that equity and inclusion might be considered a part of research ethics, particularly regarding how research results might affect certain populations.

___________________

19 More information is at https://publicationethics.org/.