Incorporating Integrated Diagnostics into Precision Oncology Care: Proceedings of a Workshop (2024)

Chapter: Proceedings of a Workshop

Proceedings of a Workshop

WORKSHOP OVERVIEW1

Advances in informatics and the diagnostic medical specialties (radiology, pathology, and laboratory medicine) could potentially reshape cancer diagnosis and enhance precision oncology care. Integrating multiple types of diagnostic data, combined with analysis with artificial intelligence (AI) algorithms, could help to guide personalized treatment and improve patient outcomes. No uniform strategy currently exists, however, to develop, validate, implement, and use integrated diagnostics in cancer care.

The National Cancer Policy Forum, in collaboration with the Computer Science and Telecommunications Board and the Board on Human–Systems Integration of the National Academies of Sciences, Engineering, and Medicine, hosted a public workshop on incorporating integrated diagnostics into precision oncology care on March 6 and 7, 2023. Hedvig Hricak, the Carroll and Milton Petrie endowed chair in the Department of Radiology at the Memorial Sloan Kettering Cancer Center (MSK), described the workshop as “an opportunity for the cancer community to discuss the current state of the

___________________

1 This workshop was organized by an independent planning committee whose role was limited to identification of topics and speakers. This Proceedings of a Workshop was prepared by the rapporteurs as a factual summary of the presentations and discussions that took place at the workshop. Statements, recommendations, and opinions expressed are those of individual presenters and participants and are not endorsed or verified by the National Academies of Sciences, Engineering, and Medicine, and they should not be construed as reflecting any group consensus.

field of integrated diagnostics, including the purpose, goals, and components of integrated diagnostics.” It featured presentations and panel discussions on a range of topics, including:

- current efforts to develop and implement integrated diagnostics to inform treatment decision making in cancer care;

- implications of integrated diagnostics for clinician training, care workflows, and the organization of care teams;

- opportunities for evidence generation to inform validation, clinical utility, regulatory oversight, and insurance coverage for integrated diagnostics;

- strategies for ongoing quality assurance, evaluation, and refinement of integrated cancer diagnostics based on new evidence; and

- mechanisms to enable broad patient access to integrated diagnostics, particularly in community-based settings of cancer care.

This Proceedings of a Workshop summarizes the presentations and discussions from the workshop. Observations and suggestions from individual participants are discussed throughout the proceedings and highlighted in Boxes 1 and 2. Appendixes A and B provide the Statement of Task and agenda, respectively. Speaker presentations and the workshop webcast are archived online.2

OVERVIEW OF THE CURRENT STATUS OF AND VISION FOR INTEGRATED DIAGNOSTICS

An Evolving Integrated Data Science Approach to Personalized Oncology Care

There is currently no consensus definition of integrated diagnostics, said Kojo Elenitoba-Johnson, inaugural chair of the department of pathology and laboratory medicine and James Ewing Alumni Chair of Pathology at MSK. He referred to Hricak’s working definition as “the convergence of imaging, pathology, and laboratory testing, supplemented by advanced information technology, which has enormous potential for revolutionizing the diagnosis and therapeutic management of many diseases, including cancer.”

___________________

2 See https://www.nationalacademies.org/event/03-06-2023/incorporating-integrated-diagnostics-into-precision-oncology-care-a-workshop (accessed May 26, 2023).

BOX 1

Observations on the Development and Use of Integrated Diagnostics in Cancer Care Made by Individual Workshop Participants

Describing Integrated Diagnostics

- The concept of integrated diagnostics refers to the convergence of imaging, pathology, and laboratory testing data, augmented with information technology. (Elenitoba-Johnson, Hricak)

- Integrated diagnostics are not a clinical decision support system (although they support clinical decision making), and they are much more than a data aggregation dashboard. (Hricak)

- Integrated diagnostics are intended to be patient centered. (Elenitoba-Johnson, Hirsch, Hricak, Lennerz, Osterman)

Diagnostics Research and Practice

- Diagnostic testing is siloed by discipline, making it challenging for oncology clinicians to assimilate patient data to inform a patient’s care plan. (Hricak)

- Multidisciplinary teams are essential to develop, implement, and use integrated diagnostics. (Hricak, Osterman, Shah, Thai)

- The goal of integrated diagnostics is to create clinical partnerships and align workflows to facilitate the integration of diagnostic data from across disciplines. (Lennerz, Salto-Tellez, Schnall)

- Cultural barriers to implementing integrated diagnostics persist; despite growing evidence supporting the use of artificial intelligence (AI) in precision oncology, many clinicians still hesitate to do so in practice. (Choudhury, Elenitoba-Johnson, Krestin, Oyer)

- Key factors that affect clinician adoption of AI-based integrated diagnostics are trust in the technology and accountability. (Choudhury, Dorr)

- Incentives are needed to promote the adoption of integrated diagnostics into practice. (Elenitoba-Johnson)

Evidence Generation

- Developing a learning health care system is one option to generate the evidence needed to evaluate integrated diagnostics. (Levy)

- Electronic health record (EHR) data and capabilities can be leveraged to support the evaluation of integrated diagnostics. (Levy, Meropol)

-

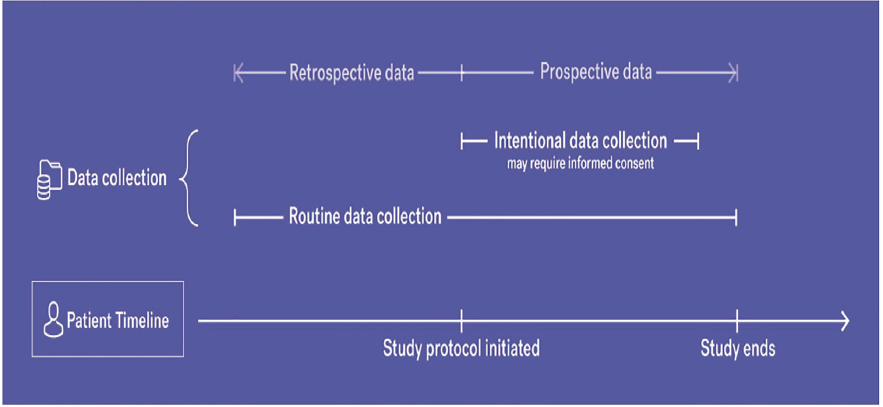

- EHR data collected during routine care can be used for pragmatic studies. (Sawyers)

- Intentional EHR-based data collection can support prospective observational studies (i.e., data that may not be needed for a patient’s routine care but are collected as part of routine workflows). (Meropol, Sawyers)

Design and Use in Clinical Practice

AI Models

- AI models in diagnostics are becoming increasingly computationally complex but also less transparent. (Thai)

- AI algorithms can perpetuate and amplify biases in training datasets, leading to biased interpretations when applied to broader populations. (Dorr, Thai)

- An AI algorithm developed at one institution often does not perform as expected or required at different institutions. (Barzilay, Levy, Salto-Tellez)

- Machine learning algorithms that are incorporated into clinical workflow can nudge clinicians toward testing or treatment options based on patient results, new research, or other information. (Robison)

- Self-supervised learning by AI is one approach to handling the massive volumes of health data being generated by medical technologies and devices. (Comaniciu)

Workflow and Workforce

- The use of unstructured, text-based clinical notes in the EHR persists, and the lack of uniform, structured, and synoptic reporting contributes to diagnostic uncertainty. (Hricak, Osterman)

- Data standards exist, but entry of structured data does not always fit well within clinical workflow. (Osterman)

- Patient-centric user interfaces for integrated diagnostics are needed, using design principles to prioritize the most important information for patient care and actions to consider. (Dorr, Robison, Shah)

- A diagnostic management team approach can improve care and reduce medical errors by providing guidance on appropriate diagnostic test ordering and use and interpretation of test results. (Laposata)

- Investments in data engineering are needed to scale integrated diagnostics. (Shah)

Regulatory and Coverage Considerations

- The U.S. Food and Drug Administration evaluates integrated diagnostics and AI-based digital technologies according to the traditional risk-based review framework for medical devices. (Philip)

- Clinical coverage for a service generally requires a high level of evidence of clinical utility, with clearly defined improvement in outcome (which might be an intermediate outcome for diagnostics). (Malin)

- Billing codes are now available for the time laboratory medicine experts spend advising clinicians on diagnostic test selection and result interpretation. (Laposata)

Access and Equity

- Equity is central to all efforts in developing and using integrated diagnostics. (Nilsen)

- Validating AI-based risk prediction algorithms across institutions and populations is a challenge. (Barzilay)

- One algorithmic approach to improve equity in AI model design is distributional shift detection, in which the algorithm learns to detect bias automatically. (Barzilay)

- Because it may not be possible to explain how an algorithm makes a prediction, an alternative approach is to design the model to learn and report when its prediction cannot be trusted, based on calibrated uncertainty. (Barzilay)

- Most patients with cancer receive their care in community settings, and disparities in access to high-quality care and new technologies persist in many communities outside the catchment area of National Cancer Institute–designated cancer centers or other major academic centers. (Brawley, Oyer, Shulman)

- Introducing new technologies into clinical practice can create disparities in care (e.g., due to lack of access, uneven implementation, and the use of limited resources for unnecessary care). (Brawley)

NOTE: This list is the rapporteurs’ synopsis of observations made by one or more individual speakers as identified. These statements have not been endorsed or verified by the National Academies of Sciences, Engineering, and Medicine. They are not intended to reflect a consensus among workshop participants.

BOX 2

Opportunities to Advance the Development, Implementation, and Use of Integrated Diagnostics in Precision Cancer Care Suggested by Individual Workshop Participants

Implementing Integrated Diagnostics

- Ensure interoperability of data systems across clinical disciplines. (Osterman, Trentadue)

- Establish, adopt, and integrate data standards into clinical workflows. (Osterman)

- Deploy structured electronic health record fields for standardized data elements. (Osterman)

- Adopt synoptic operative reports. (Hricak, Osterman)

- Develop a lexicon to convey degree of diagnostic certainty. (Hricak)

- Incorporate patient-level longitudinal data. (Levy, Robison)

- Collaborate to advance the process in automating tumor imaging annotation and segmentation. (Hricak)

- Develop institutional practices for data governance and facilitating a culture of data sharing. (Butte, Hricak)

- Ensure integrated diagnostics have feedback loops to support a learning health care system. (Kronander, Levy)

- Recruit more data scientists to cancer research and care to enhance efficient translation of scientific and technological advances in patient care. (Shah)

Improving Design and Use

- Use training and testing datasets that are representative of the population for which the technology is intended. (Barzilay Levy, Thai)

- Develop a code of conduct for implementing AI-based integrated diagnostics. (Dorr)

- Establish shared or distributed accountability based on risk and intended use. (Choudhury)

- Promote open data policies, requiring public release of data used in algorithm training and testing. (Shah)

- Use principles of risk communication to support shared decision making (e.g., use numbers; avoid imprecise terms; use data visualizations to convey risk; keep denominators consistent; discuss absolute and not relative risk). (Politi)

- Apply implementation science principles to promote the uptake of integrated diagnostics into clinical workflows and prevent unintended consequences. (Dorr)

- Train the next generation of scientists and clinicians to be able to communicate across disciplines and apply an integrated approach to diagnostics. (Gold, Schnall)

- Embrace regulatory science, and actively engage with regulators on issues related to the evaluation and implementation of integrated diagnostics. (Lennerz)

- Provide insurance reimbursement for patient diagnosis and care rather than conducting specific diagnostic tests and procedures. (Kronander)

Facilitating Access and Equity

- Build a national hub-and-spoke system to address gaps in access to high-quality cancer care to facilitate the equitable deployment of integrated diagnostics. (Oyer)

- Apply implementation science approaches to promote equitable uptake and diffusion of new tools and technologies and avoid worsening disparities in resource-poor settings. (Brawley)

- Leverage new digital technologies to increase the capacity and effectiveness of the cancer care workforce and improve patient experience and outcomes. (Oyer)

- Provide training to increase the ability and skills of the current oncology workforce to integrate diagnostic tools into practice. (Oyer)

NOTE: This list is the rapporteurs’ synopsis of suggestions made by one or more individual speakers as identified. These statements have not been endorsed or verified by the National Academies of Sciences, Engineering, and Medicine. They are not intended to reflect a consensus among workshop participants.

Elenitoba-Johnson said the core patient-centered goals for integrated diagnostics include:

- Diagnosis: “What disease does the patient have?”

- Residual disease: “How much disease is there?”

- Prognosis: “Who needs treatment?”

- Therapy: “What is the best treatment for this patient?”

- Pharmacodynamics/pharmacogenomics: “What dose?”

He characterized the overall goal of integrated diagnostics as “precision diagnostics scaled at a population level for impact at an individual level.”

Integrated diagnostics present a range of opportunities to reduce medical errors and improve patient outcomes, noted Hricak. From a clinical perspective, she said integrated diagnostics can function as AI-facilitated tumor boards.3 For research, large annotated and curated databases can provide opportunities for new discoveries. Moreover, continuous feedback from integrated diagnostics supports education. Access to data from integrated diagnostics can facilitate shared decision making among patients and their clinicians and promote health equity by enabling personalized precision cancer care, she said.

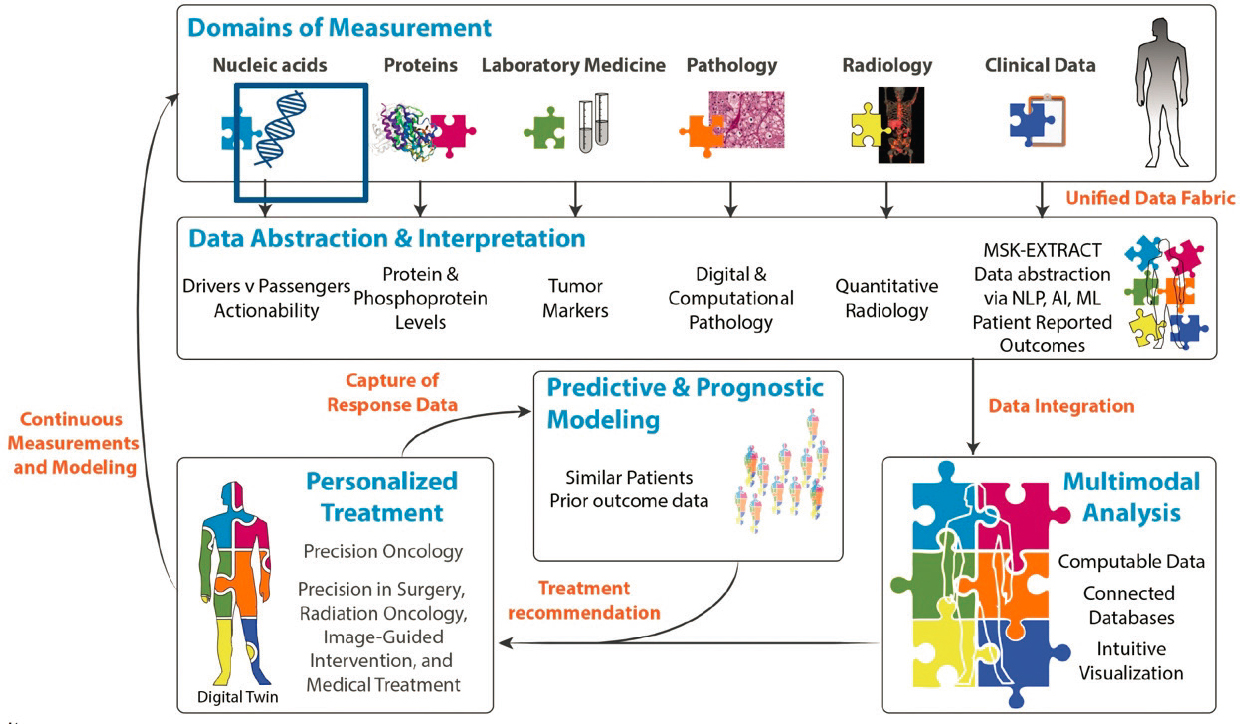

For personalized treatment, Elenitoba-Johnson said that no single entity or clinical domain has all the tools or expertise necessary to measure, abstract, and interpret all the necessary data. To address this gap, integrated data science is “leveraging platforms that horizontally integrate information from disparate sources in a standardized fashion,” he noted (see Figure 1).

As an example, Elenitoba-Johnson described the interconnected Honest Broker for BioInformatics Technology platform to integrate digital pathology data from multiple sources and support its use for clinical, research, and educational purposes at MSK. He noted that MSK has digitally archived more than 5 million pathology slides to enable reliable retrieval of imaging data and the development of enhanced reporting through detailed digital annotation (Roth et al., 2021).

Another example of an integrated information system he described is Integrated Mutation Profiling of Actionable Cancer Targets (MSK-IMPACT®),4

___________________

3 A tumor board is “a treatment planning process in which a group of cancer doctors and other health care specialists meet regularly to review and discuss new and complex cancer cases. The goal of a tumor board review is to decide as a group on the best treatment plan for a patient. These meetings can involve specialists from many areas of health care, including medical oncologists, radiation oncologists, surgeons, pathologists, radiologists, genetics experts, nurses, physical therapists, and social workers.” See https://www.cancer.gov/publications/dictionaries/cancer-terms/def/tumor-board-review (accessed December 27, 2023).

4 See https://www.mskcc.org/msk-impact (accessed August 30, 2023).

SOURCES: Kojo Elenitoba-Johnson and Hedvig Hricak presentations, March 6, 2023, and N. Schulz, L. Mellinghoff, and H. Hricak. 2017. Physical Sciences into Medicine Memorial Sloan Kettering Strategic Workshop.

a tumor gene sequencing system targeting 505 genes to create a molecular tumor tissue profile (Zehir et al., 2017). To support the standardization, interpretation, and dissemination of the clinical and genomic tumor data generated,5 a precision oncology knowledge base called “OncoKB” was developed (Chakravarty et al., 2017). Elenitoba-Johnson explained that MSK provides every patient with a personalized, comprehensive diagnostic assessment based on their MSK-IMPACT tumor testing, which includes “information about actionable mutations that are prognostically relevant.”

An Expanding Role for AI

Elenitoba-Johnson shared several examples of how AI is being leveraged to enhance the capabilities of integrated diagnostics in precision oncology care. As noted, MSK has digitized more than 5 million pathology slides, which can be used to train machine learning (ML) algorithms to identify cancer in tissue samples, often with improved accuracy compared to human review. He shared other examples of how AI is being used to improve data integration, such as studies using ML to improve risk stratification of patients with high-grade serous ovarian cancer (Boehm et al., 2022a) and prediction of response to immunotherapy in patients with non-small-cell lung cancer (Vanguri et al., 2022). He noted that digital pathology platforms also enable remote diagnostics, which can increase capacity, promote health equity, and, when implemented at scale, could potentially be used to improve cancer care and outcomes at a population level.

But Elenitoba-Johnson also cautioned that “AI is not infallible intelligence.” He explained that AI’s potential is affected by the quality of the datasets used to train the algorithms, automated discrimination associated with training datasets, human error, and the inability of algorithms to understand the context of the data (Chakravorti, 2022). He quoted four lessons learned from an analysis of the use of AI in the response to the COVID-19 pandemic (Chakravorti, 2022):

- Better ways to assemble comprehensive datasets and merge data from multiple sources are needed.

- A diversity of data sources are necessary.

- Aligned incentives can help ensure greater cooperation across teams and systems.

- International rules for data sharing are lacking.

___________________

5 See https://www.oncokb.org/ (accessed August 30, 2023).

To address these issues and advance the use of AI in biomedical research, the National Institutes of Health (NIH) Common Fund has launched the Bridge to Artificial Intelligence (Bridge2AI) program.6 Areas of focus include creating “flagship datasets” that are ethically sourced and adhere to the FAIR (findable, accessible, interoperable, and reusable) principles.7

Implementation Challenges

Hricak mentioned the exponential growth in diagnostic testing, but noted that testing tends to be siloed, which presents significant challenges for oncology clinicians in assimilating and interpreting the disparate diagnostic data for patient care. She underscored that the overall goal of an integrated data science approach to personalized treatment is to integrate all domains of measurement. However, challenges to achieving this goal range from data extraction and aggregation to integration and analysis.

Hricak said that data extraction challenges are substantial for pathology, radiology, and clinical data domains. Taking radiology data as an example, she noted that results reporting has remained essentially unchanged for well over a century and is still largely free text. Hricak explained that the lack of uniform, structured, and synoptic reporting8 leads to diagnostic uncertainty. Despite great interest in AI for tumor segmentation, feature extraction, and classification, this process remains largely manual. Manual segmentation, which identifies the spatial location of a tumor, is “the greatest roadblock to integrated diagnostics in both clinical and research workflows,” according to Hricak. She emphasized the need for “automated, algorithmic, validated tumor segmentation for every [body] site and every [imaging] modality.”

Hricak pointed to data governance and the culture around data sharing as additional challenges affecting the fields of radiology and pathology. Protections are needed to “prevent misuse and misinterpretation of data; protect interests of patients, faculty, multidisciplinary tumor boards, departments, and institutions; and ensure proper recognition of contributions,” noted Hricak, adding that it is also important to “be mindful of both scientific and business interests for all parties.” Hricak said that challenges for data integration and multimodal analysis include the need to develop new algorithms and identify new biomarkers for predictive and prognostic modeling.

___________________

6 See https://commonfund.nih.gov/bridge2ai (accessed May 26, 2023).

7 See https://www.go-fair.org/fair-principles/ (accessed December 27, 2023).

8 Synoptic reporting “is a method of clinical documentation that captures and displays specific data elements in a specific format.” See https://radiopaedia.org/articles/structured-reporting (accessed December 27, 2023).

Hricak stressed that the concept of integrated diagnostics is much more than a data aggregation dashboard; integrated diagnostics are an essential element of clinical decision support (NAM, 2017). Although both integrated diagnostics and clinical decision support tools are used with the goal of reducing medical errors, integrated diagnostics specifically focus on diagnostic errors (NASEM, 2015).

Until the goal of integrating all domains of measurement can be achieved, “every integration helps,” Hricak said. For example, when ruling out bone metastasis in patients with multiple myeloma, 18F-Fluorodeoxyglucose (FDG)–positron emission tomography (PET) results were negative in 11 percent of patients who had magnetic resonance imaging (MRI) findings of bony lesions in the spine (Rasche et al., 2017). Thus, integrating MRI and FDG-PET results can help ensure that patients who have metastatic tumors are not missed. Referencing another study that examined the use of integrated multiplatform omics tests and imaging to identify potential mechanisms of therapeutic response and resistance in metastatic cancers (Johnson et al., 2022), Hricak pointed out that these data were manually curated by 20 people over nearly a year. The study authors noted that further development of methodology for integrative analysis would support broad implementation in both research and clinical practice. She concluded that making integrated diagnostics a reality requires vision, courage, agility, collaboration, and perseverance.

Efforts to Improve Interoperability

Travis Osterman, director of cancer clinical informatics at Vanderbilt-Ingram Cancer Center, likened integrated diagnostics to a pipeline being filled with information from different clinical disciplines. Interoperability, which connects all of these multidisciplinary segments of pipe together, requires not only establishing data standards but adopting and integrating them into clinical workflows, he said. Integrated diagnostics falter when gaps in interoperability result in suboptimal data handoffs. Osterman likened this to the pipeline ending and pouring data into a bucket that must then be hand carried to another pipeline. Text-based clinical notes, explained Osterman, are one example of data that end up in a “bucket” for manual transport.

Examples of Progress Toward Interoperability

Development of data standards

Osterman explained that some data in operative reports are entered as unstructured notes, which creates a gap in the integrated diagnostics pipeline. To address this, the American College of Surgeons Commission on Cancer requires that operative reports for select

oncologic surgeries meet technical and synoptic formatting standards.9 The incorporation of standardized data elements in structured fields will better enable the collection, retrieval, and sharing of surgical information, which Osterman called “a breakthrough for the field.” He added that standards for synoptic operative reports for additional oncologic surgeries are expected to be forthcoming. He explained that academic cancer centers accredited by the Commission on Cancer are now in the process of implementing the formatting standards, and such requirements by accreditors are one approach to facilitate adoption. Osterman pointed out that it remains to be determined how well these standards can be embedded into clinical workflows where surgeons often type or dictate their notes.

Alignment with clinical workflow

Osterman pointed out that standards for capturing cancer staging as structured data exist, although implementation has been generally poor. One study found that before launching an implementation initiative, only 20 percent of patient records contained such information (Emamekhoo et al., 2022). Osterman suggested that rates of structured staging at many cancer centers are even lower. Clinical staging is often recorded as text in the clinical note or assessment plan, even though most electronic health records (EHRs) have structured fields for it. The problem is that making the structured entry requires the oncologist to take extra time to launch a separate form, and Osterman said, “many don’t see the direct benefit to either them or their patients.” Thus, the standards exist, but the process to enter structured information does not fit into the workflow. He pointed out that the lack of such structured data negatively affects research, as it can be extremely challenging to identify patients with a particular stage of cancer for clinical studies. In the study cited, the solution deployed was to “force” oncologists to complete the structured staging fields before they can close out the clinical encounter, Osterman said. Although this approach increased entry of structured staging information to 90 percent, he called for better solutions that align with clinical workflows so clinicians can spend their time on patient care.

Adoption of standards

Recalling the early days of genomic testing, Osterman explained that results from outside laboratories were provided by fax and not only unstructured but often difficult to interpret. Reports were later provided in PDF, and many oncologists now access testing results from an outside vendor via an online portal. The challenge, he said, is that the results often stay in that portal and are not uploaded to the patient’s EHR. Using the pipeline imagery, Osterman pointed out that in these situations, the integrated diagnostics pipe-

___________________

9 See https://www.facs.org/media/avukq4nc/coc_standards_5_3_5_6_synoptic_operative_report_requirements.pdf (accessed May 26, 2023).

line ends, and it is not clear where to even find the bucket of data to carry to the next section of pipe.

To address this gap, the health level 7 (HL7) standards for reporting structured clinical genomics data (including cancer-based testing, as well as somatic, germline, and pharmacogenomic testing) were introduced in 2019.10 These standards were designed to align with the oncology workflow by making it easy to order genomic testing and receive the results. The HL7 reporting standards have been widely adopted by genomic testing laboratories, EHR vendors, and health care systems, Osterman said, and the number of institutions receiving genomic data from vendors in structured format continues to increase. Achieving interoperability means these data also are available for other diagnostic uses (e.g., clinical decision support, recommending clinical trials, population health).

Gaps and Opportunities for Improvement

Despite progress toward interoperability, Osterman said that ongoing work is needed to identify and fill gaps in data standards. One area for attention is disease status. Osterman pointed to the “minimal Common Oncology Data Elements” (mCODE) interoperable data standard. Built on Fast Healthcare Interoperability Resources (FHIR) standards for exchanging health care data, mCODE leverages existing data standards.11 Osterman said that it enables clinicians and researchers to understand and describe a patient’s trajectory across the cancer care continuum. He noted that the concept of disease status in mCODE is defined as a clinician’s qualitative judgment on the current trend of a patient’s cancer—whether it is stable, worsening (progressing), or improving (responding), based on one or more sources of clinical evidence. While criteria exist for assessing disease status, such as the Response Evaluation Criteria in Solid Tumors (RECIST),12 it was decided during the development of mCODE that no existing data standard for disease status fit all cases, and this definition was proposed. Osterman explained that adoption of data standards has been a persistent challenge, and it remains difficult to extract data on disease status from the EHR. He added that this is partly a workflow issue, and mCODE is working with EHR vendors to improve the structured capture of disease status information.

Osterman said that clinical trials are another area that requires attention. He identified a need to structure the inclusion and exclusion criteria in ClinicalTrials.gov such that they are interoperable with the patient data in the

___________________

10 See https://build.fhir.org/ig/HL7/genomics-reporting/ (accessed May 26, 2023).

11 See http://hl7.org/fhir/us/mcode/ (accessed May 26, 2023).

12 See https://recist.eortc.org/ (accessed August 31, 2023).

integrated diagnostics pipeline for clinical trial matching.13 Osterman also noted the opportunity to improve the interoperability of clinical protocols so they can readily be launched across multiple institutions, and the Clinical Trials Rapid Activation Consortium is working to make this a reality (Osterman et al., 2023).

Lawrence Shulman, professor of medicine and director of the Center for Global Cancer Medicine at the University of Pennsylvania Abramson Cancer Center, emphasized the importance of structuring patient-reported outcomes and the clinician’s overall assessment of a patient for inclusion in integrated diagnostics. Mia Levy, chief medical officer of Foundation Medicine, pointed out that it can take many years for consensus standards to be developed and then implemented by vendors, and standards-setting bodies are generally volunteer organizations. She inquired how the development of standards might be accelerated. Osterman highlighted the differences between the HL7’s approach in proposing standards for structured clinical genomics data and the approach taken by mCODE. The HL7 process was much slower because it was intended to be full scope at implementation and therefore necessarily considered all use cases during development, Osterman explained, whereas mCODE was developed based on two use cases, and it was understood from the start that it was a minimum set of common data elements that would not fully cover all use cases. He pointed out that the mCODE governance structure allows for rapid feedback from users and rapid addition of new data elements. Osterman added that mCODE follows the FHIR maturity model,14 and a committee of mCODE implementers works to ensure that new additions are compatible with current installations.

Several speakers discussed the transition from manual to automated annotation and segmentation of image-based diagnostics. Hricak described the extensive and time-consuming manual work needed to annotate images and the need to assemble very large datasets of annotated images for training AI algorithms. She emphasized the importance of collaboration among radiology, pathology, and AI experts in developing and validating these algorithms. Hricak also called for a standard lexicon to convey the degree of diagnostic certainty. Elenitoba-Johnson highlighted infrastructure challenges to achieving automated annotation, such as the U.S. health care system’s lack of any standardized mechanism to pay for the expertise and effort spent on manually annotating images at the scale required to train AI algorithms. “Somewhere in our health care delivery system, we have to account for the individuals who

___________________

13 See https://clinicaltrials.gov/about-site/about-ctg (accessed October 24, 2023).

14 See https://confluence.hl7.org/display/FHIR/FHIR+Maturity+Model (accessed January 25, 2024).

are going to do that work,” emphasized Elenitoba-Johnson, rather than relying on the current patchwork funding.

Levy agreed that lack of annotated images is a workflow problem, with no incentive for annotation to be completed. However, a drawback to incentivizing time spent for annotation could be that clinicians and health systems may be reluctant to lose reimbursement in favor of automated annotation. Sohrab Shah, chief of computational oncology and Nicholls-Biondi Chair of the department of epidemiology and biostatistics at MSK, noted that “manual, precise … and thorough segmentation is completely unscalable to the volume that is needed to actually train the models.” He raised the possibility that semiautomated, large-volume datasets, although potentially less accurate, could be an option for training algorithms. He wondered whether the decisions of radiologists and pathologists could be captured as they are reviewing images in their routine workflow and whether that information could be used for training. Hricak pointed to self-supervised algorithm training (discussed further below).

EFFORTS TO DEVELOP, IMPLEMENT, AND USE INTEGRATED DIAGNOSTICS

Representatives from academic medical centers, industry, and large health care organizations shared their perspectives and lessons learned from efforts to develop, implement, and use integrated diagnostics in clinical practice.

Perspectives from Academic Medical Centers

Overcoming Cultural Barriers to Change

Gabriel Krestin, emeritus professor of radiology at Erasmus Medical Center, University Medical Center (MC), Rotterdam, shared lessons learned from implementing integrated diagnostics at Erasmus MC. To provide context, Krestin explained that the Netherlands has a highly regulated national health care system. Erasmus MC is the largest academic medical center in the Netherlands, with patient care organized into seven divisions, one of which is Diagnostics and Advice, incorporating the departments of pathology, laboratory medicine, medical imaging, and pharmacy.

Krestin said that the vision for Diagnostics and Advice is to implement an integrated approach that encompassed three key steps: requests for diagnostic testing are sent to a front office; tests are performed in the appropriate department; and a comprehensive, integrated, final report is provided to the multidisciplinary care team. He said a survey found that hospital leadership and information technology (IT) vendors supported this approach, but there

was unexpected resistance from physicians. Krestin explained that pathologists expressed concerns about digitization turning pathology into a commodity and radiologists expressed concerns about additional workload and responsibilities. Referring clinicians expressed concerns about ceding control of diagnostic decision making for their patients.

Krestin explained that Erasmus MC began by implementing integrated diagnostics for several complex diseases for which the institution expected particular benefit—for patients and the health care system. However, referring physicians responded that integrated diagnostics were unnecessary, because they could consult clinical practice guidelines for complex diseases. Erasmus sought to understand how well guidelines were being followed for these types of diseases. Krestin cited a retrospective review of 604 cases in which patients had incidental adrenal findings; of these, less than 15 percent followed Erasmus guidelines that called for both imaging and biochemical workups (de Haan et al., 2019). Retrospective reviews of guideline adherence for other scenarios yielded similar results. Even with this evidence of limited guideline adherence, “we couldn’t convince the clinicians that integrated diagnostics would be the way to go forward,” said Krestin.

A second attempt at implementing integrated diagnostics was to launch a pilot study to demonstrate how integrated diagnostics could improve workflow by addressing discrepancies between radiology and pathology findings before presenting a patient’s case to the multidisciplinary tumor board. Krestin provided an example showing agreement in diagnosis between radiology and pathology in approximately 75 percent of 89 patients with suspected lung cancer. An integrated approach led to reconciliation of an additional 20 percent of patients (unpublished data). Krestin pointed out that despite these results, hesitancy about integrated diagnostics among referring physicians persisted.

Krestin underscored that the main barrier to implementing integrated diagnostics was cultural (i.e., resistance from physicians). He said the necessary elements for successful implementation include transitioning from a process of multiple sequential orders to order sets (combinations of tests that support the determination of a comprehensive final diagnosis); development of an order management system that generates the order sets and defines the materials needed for testing; and structured, integrated reports delivering the combined results. Krestin added that Erasmus MC is now looking to integrate clinical and omics data for use in predictive analytics.

Patient-Centric, Functional Alignment of Diagnostic and Administrative Components

“Integrated diagnostics is a functional alignment of the meaningful diagnostic and relevant administrative components for a specific patient,” said

Jochen Lennerz, medical director of the Center for Integrated Diagnostics (CID) and associate chief of pathology at Massachusetts General Hospital. “It is not just the integration of multiple modalities.”

Lennerz described the work of CID, which aims to bridge the gap between clinical research and clinical practice. CID integrates a research laboratory, a clinical laboratory certified under the Clinical Laboratory Improvement Amendments15 and approved by New York State, faculty in pathology, and faculty in pathology informatics. CID runs approximately 14,000 clinical tests per year, with a baseline battery of tests and an agile infrastructure that facilitates both bringing new tests on board (including adopting payer policies) and removing tests.

Achieving diagnostic quality requires aligning the diagnostic components within the health care ecosystem, Lennerz explained. He described diagnostic quality as the sum of the quality of the diagnostic test itself, plus the quality of the diagnostic procedure (i.e., the execution of the test), and the quality of the diagnostic service (Lennerz et al., 2023). The integration process for a quality diagnostic test involves assessing the technology, determining financial sustainability (i.e., reimbursement by payers), and integrating it at the relevant laboratory.

As an example of implementing integrated diagnostics in practice, Lennerz described the testing approach to determine eligibility for targeted therapy among patients with lung cancer. The process involves collection of a biopsy sample, diagnosis by frozen section and fine needle aspiration, next-generation sequencing of extracted DNA, reporting of the genotyping results to the specialty pharmacy for dispensing of medication, and initiation of targeted therapy, which he said would ideally occur within 48 hours. In the example of initiating osimertinib16 therapy for patients who have lung cancer with EGRF mutations,17 the median time from the biopsy order to initiating therapy in a rapid specialty pharmacy cohort was 5 days compared to around 40 days in a nonrapid cohort (Dagogo-Jack et al., 2023). The potential of integrated diagnostics, he said, is “to work as seamlessly as possible to achieve your intended outcome.”

___________________

15 See https://www.cms.gov/medicare/quality/clinical-laboratory-improvement-amendments (accessed January 30, 2024).

16 Osimertinib is a kinase inhibitor used to treat or inhibit the formation of non-small-cell lung cancer. See https://medlineplus.gov/druginfo/meds/a616005.html (accessed September 1, 2023).

17 EGRF stands for epidermal grown factor receptor, and a mutation in this protein can cause uncontrolled growth, leading to cancer. See https://www.lung.org/lung-health-diseases/lung-disease-lookup/lung-cancer/symptoms-diagnosis/biomarker-testing/egfr (accessed September 1, 2023).

Despite frequent discussion about eliminating silos within the data infrastructure, Lennerz said “in our experience, integrated diagnostics works best if you have the siloed infrastructure but align all the components, including the administrative components, in a way that [is] harmonized for a specific workflow.”

Lennerz highlighted a variety of integrated diagnostics initiatives at Massachusetts General Hospital, including a cross-discipline and multimodality AI initiative in which diagnostic computed tomography (CT) scans are used to predict the molecular findings after biopsy and then, in a continuous feedback loop, those findings inform a continuously learning radiology model (Lennerz et al., 2023). He noted that a primary aim is to expedite authorization procedures.

Lennerz summarized that integrated diagnostics at CID value “individuals and interactions over processes and tools, sustainability over quick wins, specific journeys rather than general application, payer operations in addition to innovation-driven funding streams, [and] patient centricity rather than solely a scholarly exercise.”

Integrated Diagnostics as the Fourth Revolution in Pathology

Manuel Salto-Tellez, professor of integrative pathology at the Institute for Cancer Research, London (ICR) and director of the Integrated Pathology Unit at ICR and the Royal Marsden Hospital, posited that integrated diagnostics are the “fourth revolution in pathology,” following the introduction of immunohistochemistry in the 1980s, molecular diagnostics in the 2000s, and AI pathology solutions in the late 2010s (Salto-Tellez et al., 2019). He observed that, even with ideal application of genomic analysis, the number of patients with cancer who benefit from genome-targeted therapy remains low (Marquart et al., 2018), and although the performance of AI for digital pathology has been shown to improve with algorithm training, it appears to have plateaued (Echle et al., 2020). In his view, the next leap in pathology will likely not be another disruptive technology but an approach to integrating information for diagnostics and discovery of complex biomarkers.

Salto-Tellez defined integrated diagnostics as an “amalgamation of multiple analytical modalities, with evolved information technology, applied to a defined patient cohort, and resulting in a synergistic effect in the clinical value of the diagnostic tools” (Messiou et al., 2023). Current models of multimodal integration incorporate three components aligned with this definition: data modalities (the “what”), ML and integration analysis (the “how”), and opportunities for precision health (the “why”), and he cited five examples (Acosta et al., 2022; Cui et al., 2023; He et al., 2023; Lipkova et al., 2022; Lippi and Plebani, 2020). He suggested that a hierarchy for integration exists, considering the relative importance and timing of the information. In addi-

tion to providing opportunities for precision health, these models show that multimodal data integration can also support research and development, citing two examples of studies of integrated diagnostic approaches to predicting response to therapy (Sammut et al., 2022; Vanguri et al., 2022). Salto-Tellez shared that the Royal Marsden Hospital and ICR have jointly launched the Integrated Discovery and Diagnostics initiative, which merges genomic, histologic, radiologic, and clinical data, health care economics data, and research and development data from clinical trials.

One key challenge for multimodal integration of data across clinical silos is the extent to which data need to be curated versus obtained in an automated fashion directly from the source, said Salto-Tellez. He noted that Royal Marsden Hospital and ICR approached 20 vendors to help address this challenge. Of the nine that responded, six said they could offer a holistic approach; they had higher technical capability and cost. Three vendors said they could not integrate everything but could offer a piecemeal process that could help (they had lower technical capability and cost).

Salto-Tellez also described work by a consortium of the Precision Medicine Centre of Excellence at Queen’s University Belfast, Roche, and Sonrai to improve early detection of colorectal cancer. The consortium has developed an integrated diagnostics workflow connecting the pathology and genomics workflows to support the research and development process. The informatics partner, Sonrai, has developed the data analytics algorithms that link the two workflows together at multiple junctures, such as one designed to optimize the selection of tumor material for molecular testing based on histologic staining and digital pathology and to automatically macrodissect the tumor material. Salto-Tellez noted that validation studies of the integrated algorithms are underway.

Improving Diagnostics Through Close Partnership of Radiology and Pathology

Mitchell Schnall, Eugene P. Pendergrass professor of radiology and chair of the department of radiology at the University of Pennsylvania Perelman School of Medicine, observed that diagnostics has long been part of the art of medicine, often requiring the clinician to gather information from multiple sources to reach a diagnosis. However, the exponentially expanding volume of diagnostic data makes it impractical for one individual to integrate all of these inputs. Schnall said that data science can be leveraged to integrate a patient’s diagnostic information and present it to the clinician to review and act on, similar to how the cockpit of a plane presents all the necessary information to a pilot (Schnall et al., 2023).

Schnall discussed using partnerships to address silos, emphasizing that a closer partnership is needed between the two largest diagnostic disciplines—

radiology and pathology. He described this as a “natural partnership” because they have complementary expertise and approaches and face similar clinical and operational challenges. For example, pathology brings expertise in biology, informatics, and quality systems to the partnership, Schnall explained, and radiology brings expertise in anatomy and physiology, data science and IT, and workflow.

Schnall outlined the University of Pennsylvania’s approach to establishing a closer partnership between radiology and pathology, highlighting strategies in five tactical areas:

- Joint research includes building a joint data science program focusing on image analytics and overall diagnostic analytics to improve efficiency, defining the value of diagnostic outcomes, and developing novel combined modality diagnostics.

- Harmonization of systems includes using a single medical record system, the same picture archiving and communication system, and developing diagnostic decision support.

- Education includes cross-department house staff rotations, combined modality fellowship training, and a joint informatics/innovation fellowship.

- Joint clinical activities include codevelopment of diagnostic pathways, joint diagnostic consultation services, and promoting interaction through colocation of select pathology and radiology services.

- Integrated activities include the management and communication of results; decision support; and IT, billing, and marketing activities.

Schnall also said that the University of Pennsylvania recently launched the Center for AI and Data Science for Integrated Diagnostics (AI2D). Codirected by faculty in radiology and pathology, AI2D focuses on addressing the challenges of integrated diagnostics. “Diagnostics is critical to precision medicine,” Schnall concluded, and “closer integration of radiology and pathology will improve the diagnostic process.”

Integrated Diagnostics for Earlier Detection of Cancer

Garry Gold, Stanford Medicine Professor of Radiology and Biomedical Imaging and chair of the Department of Radiology at Stanford University, discussed the wide-ranging applications of integrated diagnostic approaches to early detection of cancer, when treatment is more likely to be successful.

Gold first described several studies by the late Sam Gambhir, a founder of the Canary Center at Stanford for Cancer Early Detection and pioneer in the integration of radiation and pathology. In one example, Gambhir assessed

whether integrating FDG-PET imaging with assessment of circulating tumor cells (Nair et al., 2013) or circulating tumor microemboli (Carlsson et al., 2014)18 in patients with non-small-cell lung cancer could improve diagnostic accuracy over using radiology or pathology markers alone. Ghambir also initiated the Baseline Study,19 a longitudinal study collecting baseline clinical, imaging, molecular, and other data from 10,000 participants to characterize what “normal” values are and changes that occur in association with disease.

Gold highlighted a novel approach known as “theragnostics,” which combines diagnostic testing with therapy, such as the integration of diagnostic radiology and targeted molecular radiotherapy. The Canary Center is also developing new in vitro diagnostic (IVD) tests for early cancer detection, including the Exosome Total Isolation Chip, which can detect extracellular vesicles in clinical fluid samples (Liu et al., 2017); a magnetic wire inserted into a vein to capture circulating tumor cells that have been immunolabeled with magnetic particles (Vermesh et al., 2018); and an approach that correlates detection of volatile organic compounds in breath with PET-CT (Vermesh et al., 2022).

Gold highlighted wearable and in-home monitoring technologies as another opportunity for integrated diagnostics. One example of a wearable technology is a microneedle patch on the arm that integrates sampling and molecular testing to continuously monitor for proteins in interstitial fluid. Gold also noted that integrated diagnostics are being developed in other fields, such as combining neuroimaging and histopathology data to assess traumatic brain injury.

Achieving the Vision of Integrated Diagnostics

Several panelists discussed potential actions that could help realize the vision of integrated diagnostics in precision cancer care. “It’s early days in integrated diagnostics,” Salto-Tellez said, with a need for “significant and specific investment in this area.” Lennerz encouraged an increased focus on regulatory science and actively engaging with regulators on issues related to practical implementation (e.g., the data and performance metrics needed for radiologists to fully integrate AI) (Lennerz et al., 2022). Schnall stressed that broad collaboration among academia, industry, regulators, and payers is necessary

___________________

18 Circulating tumor cells are cells that have separated from primary or metastatic tumors. Circulating tumor microemboli are multicellular aggregates that include circulating tumor cells, and both of these can seed metastatic cancer in disparate sites in the body (Tao et al., 2022).

19 See https://medicine.stanford.edu/annual-report-2018/the-project-baseline-study.html (accessed September 1, 2023).

to achieve effective integrated diagnostics. Gold said the next generation of scientists and clinicians need to be able to communicate across disciplines and implement an integrated approach to diagnostics. “We won’t be able to truly integrate until we have people who understand the entire landscape,” he said.

Several participants discussed the challenges of integrating the vast volumes of data generated by consumer wearables and in-home monitoring technologies. Shah raised the issue of access to these data by hospital systems or clinicians, noting that wearables are often commercially developed, and the developers often have their own interests in the data collected. Gold agreed and noted the regulatory, payer, and patient privacy concerns to be addressed.

Perspectives from Industry

Opportunities to Leverage AI in Diagnosis

Dorin Comaniciu, senior vice president of Artificial Intelligence and Digital Innovation at Siemens Healthineers, discussed several opportunities to leverage AI for integration and use of the massive volumes of health data generated by medical technologies and devices. He said that computational power and bandwidth for information exchange are increasing exponentially, including low-cost supercomputing power available at the point of care.

Comaniciu said that one approach to handling the ever-increasing volume of data is AI self-supervised learning.20 He cited a study of self-supervised learning using 100 million medical images, which found that it resulted in improved accuracy, more robust results, accelerated training, and improved scalability (Ghesu et al., 2022). Comaniciu noted that it would take 35 years for one person to look at each of the 100 million images for 10 seconds.

The results of self-supervised learning would need to feed into a harmonized user interface, which Comaniciu referred to as a “diagnostic cockpit,” with access to clinical information, the results of AI analyses, advanced data visualization, actionable reporting, and research and innovation. Comaniciu pointed out that structuring data has been the general approach to managing complex clinical data so it can be integrated. However, AI has the capability to shift the model from one in which the clinicians sort through structured data to one in which clinicians ask questions (comparable to Internet searching). He said large language models will support connectivity and access, provide interpretation support, and aid in synthesizing information. In addition,

___________________

20 Self-supervised learning is an ML technique that teaches a model to predict hidden parts of an input using other parts of the input that are visible to the model. It can be used to perform tasks, such as image comprehension and object detection (Rani et al., 2023).

this workspace could incorporate virtual assistants capable of responding to questions about the patient. To illustrate this, Comaniciu shared a video of a conversation with an AI radiologist assistant that responded to successive questions about the patient’s testing and findings.

Comaniciu presented several examples of AI-powered diagnostics for oncology, such as cancer risk prediction, brain tumor analysis and metastasis digitation and tracking, lung cancer screening via chest CT, assessment of pulmonary lesions via chest X-ray, breast cancer screening, prostate MRI, and analysis of cardiotoxicity. He also highlighted AI tumor fingerprinting, an approach under development to create a digital biopsy of molecular changes in tumors by integrating digital pathology, proteomic, metabolomics, and genomic profiling. Another fingerprinting approach is using an image-based deep neural network to optimize radiation therapy by stratifying patients as responders or nonresponders to guide individualized dosing (Lou et al., 2019; Randall et al., 2023).

Looking to the future, Comaniciu anticipated patients would have a “health digital twin,” which he characterized as a “lifelong, personalized, physiological model that is updated with each scan exam.” Simulations with a digital twin could inform treatment decisions, for example, or guide individualized preventive actions, he noted.

Building in Feedback Loops to Improve Quality of Care

Torbjörn Kronander, president and chief executive officer of Sectra AB, compared diagnostics to telecommunications in that both must detect signals in noise. Diagnostic testing detects signals of disease.

Kronander outlined some of the key features of IT systems for health environments. All relevant data should be available in the same place at the same time because clinicians do not have time to go back and forth among systems to find patient information, he said. Deep integration with AI is needed, and “AI should be applied both to radiology and pathology at the same time,” he said. The challenge, however, is that these data often reside in different systems. Uniform user interfaces can increase patient throughput, he noted, because moving to a user interface with a different format requires time to “reconfigure your brain” and adds “mouse miles” (computer mouse movements needed to engage with interfaces). Kronander added that unified tumor boards would help ensure that patient treatment is not delayed while discordant conclusions from pathologists and radiologists are resolved. Early identification and resolution of discordance among specialties can help the tumor board to work more efficiently, he noted.

Kronander explained that Sectra is working to integrate “radiology, pathology, and AI in one single IT system.” There is close integration with

information in the patient’s EHR, and Sectra is developing prototype structured reports that combine radiology, pathology, and concordance activities in one report sent to the EHR (he noted that the Sectra system currently only interfaces with the Epic EHR system). He added that Sectra is also collaborating with the University of Pennsylvania School of Medicine to develop a single user interface that incorporates pathology, radiology, and genomics.

Sectra’s approach to integrated diagnostics includes radiology, pathology, and omics data combined with current population health and probability data, Kronander said. He noted that probability data are often overlooked but are important for establishing a diagnosis. He explained that a key element of the Sectra integrated diagnostics model is feedback loops to support a learning system. Data from the structured diagnostic report in the EHR (where the final interpretation/outcome is entered) are also fed back to radiology, pathology, and omics functions and used to update population health and probability data.

The vision for integrated diagnostics, Kronander said, is “faster and better diagnosis, improved patient care, built in feedback loops [for] improving quality of health care, and at lower cost and less complexity.” He referred to Gambhir’s prediction that “[t]oday’s radiologists and pathologists will be replaced by diagnosticians plus AI” (Gambhir, 2018). Achieving this vision, Kronander said, will require “common basic training in decision theory for radiologists and pathologists.”

Kronander said that changes in reimbursement are also needed to achieve the vision of integrated diagnostics. He suggested that payers should reimburse clinicians for making the diagnosis rather than conducting specific diagnostic procedures. He acknowledged that such changes take time and observed that the U.S. system of reimbursement can be a barrier to the timely advancement of health care practice. Elenitoba-Johnson added that one challenge is coming to an agreement regarding “who is going to do the work and who is going to pay for it.” He emphasized that incentives need to be aligned to promote the adoption of integrated diagnostics into clinical practice.

Harnessing the Power of Data for Diagnosticians, Clinicians, and Patients

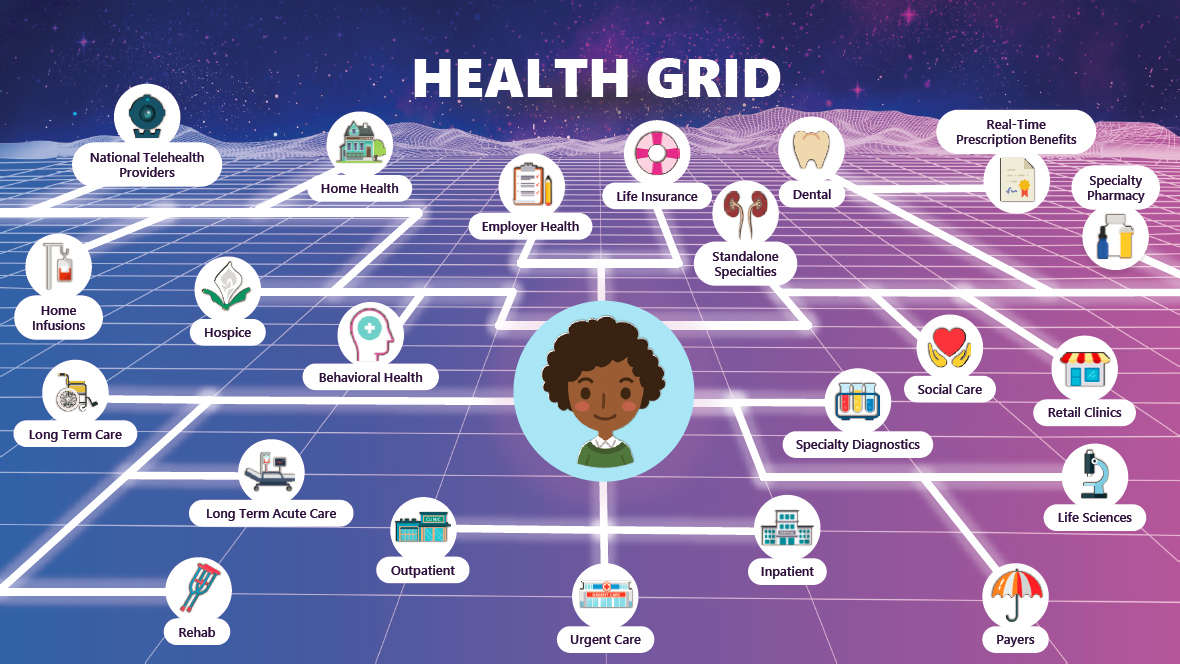

Nick Trentadue, director of laboratory and diagnostics informatics at Epic, identified some settings in which patient health care data are generated (see Figure 2). Traditionally, test results would be reported to the patient’s clinician, who would share them with the patient and discuss treatment options. Today, the results are also provided immediately to patients. Trentadue shared a screenshot of an actual report received by a patient awaiting cancer screening results. These reports, written by and for medical professionals, can be confusing and frightening to patients, he cautioned. Trentadue highlighted the need for tools that can better convey test results to patients.

SOURCE: Nick Trentadue presentation, March 6, 2023.

Trentadue said integrated diagnostics need to be considered from three primary perspectives: diagnosticians, treating clinicians, and patients. For diagnosticians, a key element supporting the diagnostic process is the bidirectional exchange of interoperable data across systems. Interoperability requires that health data vendors adhere to industry standards for exchanging health information across systems (e.g., the HL7 standards for structured clinical genomics data discussed by Osterman). For treating clinicians, combining the results from the diagnosticians with AI and ML creates models that can support clinical decision making and the development of care plans that meet individual patient needs. For patients, Trentadue said that timely updates via the patient portal should leverage integrated data to provide results (e.g., laboratory, pathology, radiology), easily understood interpretations, and next steps for patient care.

Trentadue emphasized that for integrated diagnostics to optimize the power of data, the information should be discrete and actionable and follow standards that enable interoperability and support bidirectional flow among the care team, regardless of data system: this ensures that “the right person, at the right time, has all the appropriate information for diagnosis as well as for treatment.” Trentadue also emphasized the importance of ontology21 when developing ML models, because a lack of clear ontology could lead to unexpected outcomes from the models, and actionable decision support, in which diagnosticians, treating clinicians, and patients have the information they need, readily available, to support decision making.

Integrating Patient Goals and Treatment Outcomes Data

Aanand Naik, professor and the Nancy & Vincent Guinee Chair of Geriatrics at the University of Texas Health Science Center, Houston, School of Public Health and Consortium on Aging, highlighted the need to incorporate patient goals and health outcomes (including the potential for adverse effects) into integrated diagnostics. Kronander added that continuous, intensive monitoring of treatment outcomes is needed because of the increasing number of treatment options. If one therapy is not achieving an expected outcome, then a change may be necessary. This type of monitoring will also drive the use of diagnostics, he said. Comaniciu noted that it is often not possible to determine the best treatment approach for a given patient based on available guidelines. He anticipated that computer simulations could eventually be able to rank the top treatment choices for a patient to consider.

___________________

21 An ontology, in the context of computer science, describes the relevant concepts, relationships, and specifications that are important for modeling a particular domain (Gruber, 2016).

Transparency of AI Models

Osterman said clinicians are reluctant to base their decision making on recommendations from closed-box AI models. Trentadue responded that transparency is essential when presenting information gleaned from AI, ML, or algorithms. Kronander noted the ongoing research on explainable AI,22 emphasizing that AI should not be fully independent; humans need to be in the loop to identify instances when AI does not work.

Perspectives from Large Health Care Organizations

Precision Oncology in VA Medical Centers

Gil Alterovitz, director of the National Artificial Intelligence Institute (NAII) of the U.S. Department of Veterans Affairs (VA), said the vision of NAII is “to lead the way in trustworthy AI” with the goal of “ensuring the health and well-being of our veterans.” NAII is building an organization focusing on AI research, development, and training through a network of AI sites to “pilot, iterate, and scale” AI initiatives within the VA, Alterovitz said; it has four sites and several pilot programs.

The VA is well situated to take the lead in advancing AI in health care, Alterovitz said. The VA is the largest U.S. integrated health care system, serving more than 9 million patients. The VA has a repository of more than 10 billion medical images and a database of more than 800,000 genomes linked to medical records. In addition, the VA has a broad reach, with more than 1,200 facilities across all U.S. states and territories. He added that most clinicians in the United States have undertaken part of their training at a VA facility.

Alterovtiz said the “VA is the largest provider of oncology services in the country, making oncology care a priority.” He shared several examples of how the VA is bringing advances in technology and precision care to veterans. As part of the federal Cancer Moonshot initiative, the VA has established a public–private partnership with IBM to enable precision care for patients with stage 4 cancer who have exhausted available treatment options. Researchers use IBM Watson AI to analyze a patient’s tumor for mutations to identify potential options for targeted therapy. Alterovitz said that this approach has expanded patient access to precision oncology therapy; more than one-third of the tumor samples are from patients who live in rural areas, where access to such therapies may be limited.

___________________

22 See https://en.wikipedia.org/wiki/Explainable_artificial_intelligence (accessed January 25, 2024).

Another example is the VA’s Lung Precision Oncology Program,23 a network of 23 hub locations working to “increase access to screening and improve early detection” using data analytics to evaluate access and quality. Alterovitz noted that more than 5,000 veterans received molecular testing through this program as of May 2021.

For VA research programs, such as the Lung Precision Oncology Program, Alterovitz said participants undergo a consent process to share their data and a terms and conditions process by which veterans agree that their data can be used for operational purposes. Depending on who will be using the data and how, data use agreements and contracts may also ensure patient privacy and data security in accordance with patient preferences. He noted that it is better to consider potential data uses and secure patient consent at the beginning rather than having to reconsent patients later for additional uses.

Alterovitz also discussed the use of AI-assisted screening to detect precancerous polyps during routine colonoscopy. Alterovitz noted that “every 1 percent increase in the precancerous polyp detection rate reduces future risk of death by 5 percent.” Studies of this AI-assisted colorectal cancer screening tool have demonstrated a 14 percent increase in the rate of precancerous polyp detection.

Alterovitz noted that the VA has an app store with a range of apps to help veterans access their medical information, receive care (e.g., testing, medications), or find appropriate clinical trials based on information in their medical record.24 In closing, he said that the VA is looking for opportunities to leverage its data gathering and analysis capacity in collaborations that advance the development and use of AI in precision cancer care.

Precision Oncology at University of California Health

Atul Butte, the Priscilla Chan and Mark Zuckerberg Distinguished Professor and director of the Baker Computational Health Sciences Institute at the University of California, San Francisco, and chief data scientist for University of California Health (UCH), provided an overview of UCH. The University of California (UC) system has more than 227,000 employees and 280,000 students per year across its 10 campuses. UCH incorporates UC’s 6 medical schools and 14 other health professional schools (nursing, pharmacy, veterinary, dental, and public health) and includes five National Cancer Institute (NCI)-designated comprehensive cancer centers and five institutes funded by the NIH National Center for Advancing Translational Sciences (NCATS) Clinical and Translational Science Award program. UCH employs

___________________

23 See https://www.research.va.gov/programs/pop/lpop.cfm (accessed September 1, 2023).

24 See https://mobile.va.gov/appstore (accessed May 26, 2023).

approximately 5,000 faculty physicians and 12,000 nurses and trains half the medical students and residents in California. It receives $2 billion in funding from NIH and brings in more than $16.5 billion in clinical operating revenue.

Butte said one original goal was to transform UCH into a single accountable care organization, facilitated by UC’s approach to data warehousing. The UCH-wide central data warehouse facilitates broad access to deidentified, structured clinical data from across all six academic health centers. A warehouse is still maintained at each of the six academic medical centers, which facilitates access to “deidentified, limited, and identified structured and unstructured clinical data” and additional information (e.g., images, genomes, notes). The central database has longitudinal, structured data going back to 2012. This includes data from 437 million patient encounters, 1.17 billion procedures, 1.5 billion medication orders, 48 million device uses, and 5.2 billion laboratory tests and vital signs, from nearly 9.2 million people. Data are merged with California state data and the California death index, Butte noted.

More than 100,000 patients with cancer receive care at a UCH comprehensive cancer center each year, noted Butte. The same UC-wide data warehouse contains demographic information, including information on a patient’s cancer diagnosis, insurance coverage and social risk, and more than 32,000 cancer genomic reports. Butte said these data can be queried to address a wide range of questions, such as whether patients who have a particular type of cancer with a specific gene mutation are receiving appropriate and equitable cancer treatment.

Butte explained that the UCH academic medical centers use the Observational Medical Outcomes Partnership Common Data Model. He noted that a systemwide committee meets every 2–4 weeks to harmonize data elements. Certain analyses require curation of unstructured clinical notes and data in the warehouses. Not having thousands of curators available, Butte described how UCH is looking at using an AI chatbot to curate deidentified clinical notes. He described one situation in which ChatGPT25 was asked to summarize all cancer biomarkers in a complicated deidentified clinical note. The AI system was able to find and summarize data for all of the biomarkers, including one that the UCH oncology curators had missed.

UC researchers (including graduate students) who want access to UCH-wide data first write and optimize their queries in their campus system and then use a cloud-based tool to run the queries in the central UCH-wide warehouse. The goal is to facilitate “safe, respectful, regulated, responsible use of clinical data,” explained Butte. He said that data in the UCH-wide central data ware-

___________________

25 ChatGPT stands for Chat Generative Pre-trained Transformer and was created by OpenAI. It is a large language model–based chatbot. See https://openai.com/blog/chatgpt (accessed September 1, 2023).

house are deidentified and can therefore be shared in compliance with the Privacy Rule26 promulgated under the Health Insurance Portability and Accountability Act (HIPAA), and no institutional review board (IRB) approval is needed to conduct studies with these data. UCH has also determined that tumor mutation data are nonidentifiable and can be shared. He also noted that every patient is required to sign a terms and conditions of service document, which outlines how the university may, in compliance with HIPAA, use their data. A governance process is also in place to review external requests for the use of UCH data.

Training AI requires vast amounts of data, but competition among health systems is a barrier to data sharing across the country, Butte noted. While acknowledging the challenges, Butte contended that the goal is achievable, offering the NCATS/NIH National COVID Cohort Collaborative27 as an example. Butte described how NCATS successfully motivated more than 100 health institutions to contribute deidentified data for all of their patients with COVID-19 to a central, third-party repository to support research on the disease. Data are publicly accessible through dashboards. Butte emphasized that this collaborative has led to nearly 500 data projects and 53 publications thus far. Butte pointed out that NCI does not require similar data sharing among their funded comprehensive cancer centers, and he advocated for the creation of a common data warehouse that could enable the development of precision medicine tools for patients with cancer.

Butte said that interoperability of health data is achievable, and a business need might drive the implementation of data sharing, but a culture that supports data sharing is also needed. Converting unstructured to structured data remains a challenge, but there is a potential role for AI. Other challenges noted by Butte include ongoing resistance to cross-campus clinical trials despite incentives and central IRBs and a lack of precision cancer care options for patients.

IMPROVING EVIDENCE GENERATION FOR INTEGRATED DIAGNOSTICS

Many speakers discussed approaches to generating evidence to inform and support evaluating, implementing, and scaling integrated diagnostics.

___________________

26 The Privacy Rule, promulgated under the Health Insurance Portability and Accountability Act of 1996 (HIPAA), establishes national standards to protect individuals’ medical records and other individually identifiable health information (collectively defined as “protected health information”) and applies to health plans, health care clearinghouses, and those health care providers that conduct certain health care transactions electronically (see https://www.hhs.gov/hipaa/for-professionals/privacy/index.html).

27 The National COVID Cohort Collaborative is also known as N3C. See https://ncats.nih.gov/n3c (accessed September 1, 2023).

A Cancer Risk Management Learning System for Evidence Generation

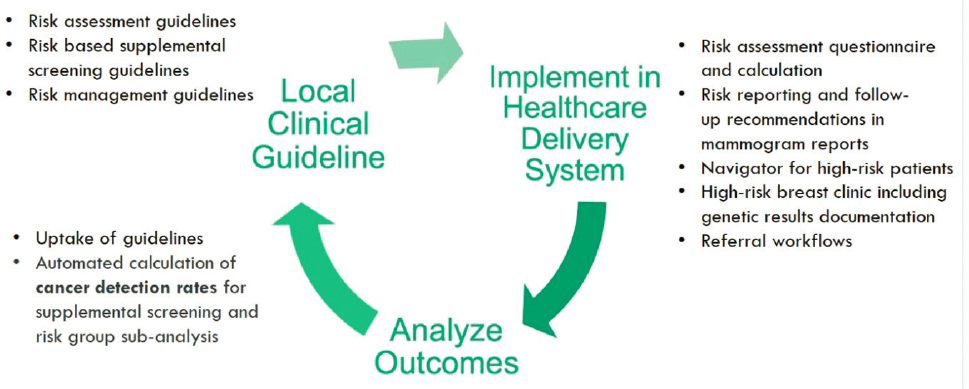

Levy shared opportunities to leverage a learning health care system to evaluate integrated diagnostics. In such a system, she explained, local clinical guidance is developed based on national guidelines and scientific literature and implemented in the health care delivery system. Outcomes data generated as part of routine care are analyzed and used to inform updates to the local guidelines. Information also feeds back to research and publication in the scientific literature. This cycle results in iterative improvement to the care delivery system.

Levy described Rush University’s experience implementing a learning health care system for breast cancer risk management to address the challenges of evidence generation for breast cancer screening. Levy noted that the patient population eligible for breast cancer screening is large and diverse. While there are conflicting clinical practice guidelines for breast cancer screening, all guidelines recommend that screening begin with mammography. Mammography is highly regulated, and the use of structured mammography and other screening data documentation in the EHR has been increasing, Levy noted. There are also well-defined outcome measures for screening that can be extracted from the EHR. Although these could be used to evaluate new screening methods in prospective randomized trials, Levy pointed out the many challenges, from ensuring adequate patient accrual to the rapid evolution of screening technologies, and suggested a learning health care system could help generate evidence to evaluate integrated diagnostics.

In support of this model, Levy said the Rush system has structured data based on the Breast Imaging Reporting and Data System from more than 500,000 screening and diagnostic breast imaging studies dating from 2008. These data are from a diverse patient population (less than 50 percent White) and span a wide range of ages (from under 30 to more than 90, with most data from individuals aged 40–70). The amount and types of structured data available continue to increase, including breast density data (available since 2015) and cancer risk assessment information (from 2020 onward). “As our structured data has grown, so have our efforts to leverage this data to learn more and more from the experiences of every patient,” Levy said.

Levy outlined the elements of Rush’s breast cancer risk management learning health care system: implementing new clinical guidelines for risk assessment, risk-based supplemental screening, and risk management; implementing risk assessment tools, reporting, and patient navigation; and analyzing guideline uptake, distribution of risk, and automated calculation of cancer detection rates from EHR data (see Figure 3).

Levy noted that “breast screening is not a moment in time.” Integrated diagnostics need to account for the patient’s longitudinal screening experience (e.g., comparing new images to prior images). Furthermore, integrated

SOURCE: Mia Levy presentation, March 6, 2023.

diagnostics need to extend beyond test interpretation to include informing the next steps in patient management, she emphasized.