Digital Model–Based Project Development and Delivery: A Guide for Quality Management (2025)

Chapter: 5 Components of Review

CHAPTER 5

Components of Review

5.1 Introduction

A successful review process requires a well-organized model development environment and workflow. This chapter elaborates on model environment components that agencies can establish to implement a robust quality management process. The quality management process can be completed within design authoring software or model review applications. Implementing recommendations from this chapter will streamline the review process. In addition to model development aspects and specific model review tools, agencies can look at the following standards for guidance:

- National BIM Standard (NBIMS-US), and

- ISO 19650, Parts 1 and 2.

NBIMS-US provides standards, guidelines, templates, and other resources for implementing a stable production environment. These standards are continually supported and expanded. Agencies may need to review and adopt future releases—scheduled to be published every three years—as appropriate. ISO 19650, Parts 1 and 2 are international standards that support the consistent management of information on a BIM-enabled project. Agencies can use these standards to assist in the development of a collaborative, model-based production environment.

5.2 Model Development Standards

A standards-based approach to model development provides the design team and reviewers with a structured framework for planning, creating, and verifying model-based deliverables. These standards are the basis for the modeling standards and model integrity reviews. Clearly defined naming conventions, model content, and element information yield finished products that are consistent and predictable. Suggestions in this section provide guidance for the development of foundational components to advance a stable review product.

5.2.1 Information Modeling Standards

Information modeling standards specify the LOD and information needed for a specific purpose (i.e., LOIN). (See Section 6.3.2 for suggestions on implementing information standards across an agency.) Information modeling standards are composed of the following key elements, which can be used to establish frameworks for specific types of reviews:

- Geospatial positional accuracy and point density requirements for base survey mapping.

- Object-based design elements, organized following a specific MET.

- Specifications for LOIN for a particular milestone deliverable.

- Software configuration specifications, including naming conventions, symbology of the drawing geometry (e.g., points, lines, annotation), and 2D and 3D object libraries. (See Sections 5.2.3 and 5.2.4.)

- Requirements for software packages and versions for model development, if applicable.

Specific guidance for survey reviews includes the following:

- Identify which review information documents are not part of the agency’s library of publications, and prepare a plan to develop similar standards. These documents are a prerequisite for consistent and rigorous reviews of survey models. (Examples of review information documents are listed in Section 4.3.1; Appendix E provides survey review procedures.)

- Assess the agency’s current geomatics or survey manual for its application of modern data collection methods and the level of positional accuracy needed to reduce survey staking in construction. This manual is an authoritative publication that governs standards for collecting, processing, and validating survey models, and it is imperative that the manual be kept current with industry standards.

Specific guidance for modeling standards includes the following:

- Update CADD standards to expand or revise libraries for symbology, standardized 2D and 3D objects, requirements for naming conventions, and standardized drawing production processes. Model-based design software is configured to provide libraries of intelligent, object-based design elements that design teams can use as building blocks for their designs. These intelligent objects make up the pieces of an engineering system that can be grouped by element types. For example, an engineered drainage system consists of culverts, drains, open channels, retention and detention systems, and storm sewers (Figure 9). Each element group can be broken down into individual elements, such as inlet controls, pipes, and outlet controls. With this standard in place, an agency can create a checklist that is specific to the drainage system model review.

- Set up a MET for any discipline that does not have one yet. The MET provides a basis for the LOIN. The LOIN required for design elements increases gradually as the project design advances toward the final deliverable, which is issued for construction. Appendix B can be used as a starting point when piloting digital delivery. Creating the MET may entail preparing a breakdown of LOIN, which is composed of LOD and level of information (LOI). These components define the completeness of a particular design element within the overall project design. An example of these specifications for drainage system elements is shown in Table 8.

5.2.2 Common Data Environment

Chapter 3 outlines how the CDE supports processes for approval and records management. The CDE provides a space for collaborative production of federated models that bring together information containers from multiple sources and parties. A CDE workflow protects the security and quality of information throughout production, review, and delivery. Agencies may want to investigate CDE developments or expansions that enhance the use of protocols for 3D model and model-based reviews. Considerations for a well-configured CDE that fully supports review protocols include

- Approval and verification workflows that can be configured to support project methods and procedures,

- Notifications and alerts that aid collaborative workflows and establish an audit trail,

- Permissions controls that are customizable and provide gated CDE workflows, and

- Workflow engines to automate review and approval processes.

Source: PennDOT, with permission.

Table 8. LOD/LOI specification by milestones.

| Model Element | 30% Model | 60% Model | 90% Model | Final Model | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Min. LOD | 2D/3D | Min. LOI | Min. LOD | 2D/3D | Min. LOI | Min. LOD | 2D/3D | Min. LOI | Min. LOD | 2D/3D | Min. LOI | |

| Outlet structure (drainage) | 100 | 2D | Use 2D shapes to determine location and measure area. | 200 | 3D | Provide 3D solid to represent structure and location. | 300 | 3D | Refine 3D solid to demonstrate details and interaction with pipes: Attach attributes. | 300 | 3D | Refine 3D solid to demonstrate details and interaction with pipes: Attach attributes. |

| Inlet protection (drainage) | N/A | N/A | N/A | 100 | 2D | Use 2D shapes to determine location and measure area. | 300 | 3D | Model in 3D to output volume quantity. | 300 | 3D | Model in 3D to output volume quantity: Attach attributes. |

| Pipe (utilities) | 100 | 2D | Use 2D lines to determine location and measure length. | 200 | 3D | Provide 3D solid to represent pipe. | 300 | 3D | Provide 3D solid to represent pipe: Attach attributes. | 300 | 3D | Provide 3D solid to represent pipe: Attach attributes. |

| Conduit (utilities) | N/A | N/A | N/A | 100 | 2D | Use 2D lines to determine location and measure length. | 300 | 3D | Provide 3D solid to represent conduit: Attach attributes. | 300 | 3D | Provide 3D solid to represent conduit: Attach attributes. |

Another aspect of CDEs that should be incorporated into agency processes is the management of information containers (e.g., folders and files) and associated metadata. Within a CDE, information containers can have revision states tagged to identify the current state as a container moves through the review workflow. Suggested states that are permission-controlled include work in progress, shared, published, and archived. A folder’s revision state is modified after the review processes and can be documented through transmittals. Metadata should also be associated with individual files throughout the review process, as discussed in Section 3.2.2.

Documentation of agency-defined CDE protocols should be described in quality management documents. Project-specific protocols should be outlined by the project team in the BEP and project quality plan.

5.2.3 Naming Conventions

Consistent naming conventions for folders and files should be adopted in digital models. Logical names help users effectively and efficiently identify and retrieve data when reviewing models. Most agencies have standardized file and folder naming conventions for traditional files and deliverables. Agencies should review their naming conventions, paying special attention to 3D model files and delivered models. Adapting them based on ISO 19650 standards and best practices for consistency will prevent confusion and duplication.

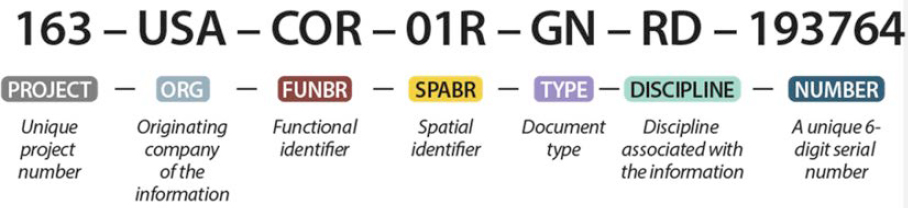

The National Annex to ISO 19650-2 advances naming conventions for information containers that can be adapted based on project scale and complexity. The standard defines a series of field codes separated by delimiters to create a unique string. These naming conventions follow a specific format that provides consistency and scalability for different types of projects. Figure 10 depicts field code types and descriptions that can be expanded through revision metadata and tracked in the CDE.

While an agency need not conform to specific ISO naming conventions, it can reorganize the field codes to accommodate its current definition and provide a standard, yet customizable, naming practice. Agencies can further customize naming conventions to make it easier to track a file’s milestone and status in the quality management workflow.

There are naming restrictions that agencies need to consider, such as string length and the use of specific characters. Certain CDEs limit the number of characters in a file path. Some platforms or operating systems specify characters and words that are invalid or not allowed in file and folder names. For example, names and words that can be misconstrued by programming languages are not allowed for information containers. Agencies should review these limitations prior to finalizing a standard naming convention.

5.2.4 Software Configuration Development and Management

Design authoring software enables agencies to create a configuration that standardizes how product resources are used to design and draw objects to align with specific modeling and design standards. Defining an agency software configuration gives users the resources to repeat the same process, providing consistency across files and projects. Utilizing a well-defined software configuration allows manual or automated review processes to check against the defined standards.

Software configurations are installed along with a software application to coordinate symbology standards (e.g., line styles, labeling), the design standards file (e.g., superelevation calculations), and resources (e.g., drawing or sheet layouts). Terms used to describe software configurations vary across software platforms and include “workspaces,” “support configurations,” “state kits,” and “country kits.”

Implementing configurations for agency software is challenging because software, platforms, and operating systems undergo continual updates. Agencies need to be aware of these updates and plan accordingly, particularly when updates require new versions of software configurations. Detailed processes to implement and manage these versions can support file compatibility, reduce the risk of file corruption or data loss, and provide consistency between projects and collaborations.

There are also numerous advantages of implementing an agency software configuration, such as

- Defining customized, repeatable processes and workflows using modeling standards,

- Promoting consistent design and modeling standards between users, and

- Providing the ability to implement process control and checks against the configuration.

Most agencies have defined software configurations for their design authoring software and platforms. Additional information and configuration management may be required to facilitate the quality management of 3D models created in these platforms. Defining the following aspects of a software configuration will advance modeling standards reviews for 3D models:

- Property sets describe an object or element—either graphically or nongraphically—and can be assigned automatically or manually. Types of property sets include pay items or reference alignments. Property sets incorporated into software configurations can be used to generate reports, such as quantity reports organized by pay item, or establish rules for clash detection.

- Standardization of 2D or 3D cells or components (e.g., standard details for and elements of drainage structures, bridges, and traffic signals) allows designers to utilize predefined design standards that reviewers know are correct and up to date.

- While IFC usage on transportation projects is still in its infancy, agencies can begin mapping property and dataset information within their software configuration to IFC property sets.

Adoption will take several years, but the transportation industry is shifting toward wider usage of open data standards, which can be used to collaborate outside of proprietary software and sidestep versioning issues. While IFC, associated data dictionaries, and information delivery manuals are being developed for transportation projects, agencies have an opportunity to set up their software configurations for future implementation. Building out the proper software configuration and documentation is important for the quality management process, and it allows for future automation of design standards.

5.2.5 Model Management Tools

Model management establishes parameters for documenting how designs are modeled. When designers include documentation of model contents along with their models, reviewers can quickly understand what is included (or omitted) and what needs to be inspected. Agency-defined modeling standards give designers requirements to follow and can also be validated against in review. Organizing and documenting a model’s contents improves transparency and accessibility for information reviewers as they complete the different review types discussed in Chapter 4. Two model management tools that can help reviewers understand the content within a model include the BEP and MET.

As explained in Section 2.2.3, the BEP is provided to reviewers for their reference to help them understand the project needs and implementation process for model generation and management. Reviewers oversee the model management procedures outlined in the BEP and confirm that they are implemented correctly. Agencies can refer to several BEP guidance documents within the architecture, engineering, and construction industry when developing or expanding their own. The NBIMS-US BEP guide is one example agencies can use.

As defined in Section 3.3.1, the MET helps manage how a model develops and communicates expectations for interdisciplinary coordination at each milestone. To incorporate a MET into the formal review process, agencies can

- Maintain a standardized MET template that is scalable for different project types.

- Provide detailed MET documentation to modelers and reviewers.

- Utilize open-source software and file formats for the MET template, such as a fillable PDF form or CSV (comma-separated values).

5.3 Review Tools and Job Aids

Using a 3D model (as opposed to paper or PDF) changes the medium for sharing information, but the information requirements for assets being constructed remain the same as in a traditional plan set. Reviewers need a sound process for verifying that a model contains the design information necessary for the contractor and meets design criteria and calculations (e.g., clearance requirements, sight distances, element thicknesses and dimensions, transition rates, and material types). This section describes review tools and job aids, such as checklists, reports, automated tools that can be used as quality artifacts, and review software, that can streamline 3D model reviews.

5.3.1 Checklists

Checklists are a foundational quality management tool that provides reviewers with clear criteria—in a specified order—and becomes a quality artifact once the review process is complete. Checklists are just one type of job aid that can be used as part of a comprehensive review process with other review artifacts. Agency checklists standardize centralized review processes, mitigating risks that have been identified based on the current business context. Agencies must review their checklists periodically as part of the PDCA cycle.

Most agencies have checklists for design reviews that reflect the practices and standards defined in design manuals. A challenge that many agencies are currently confronting is how to develop checklists for 3D modeling standards and model integrity reviews. These standards are based on the CADD/BIM manual, model development standards, LOIN standards, and model integrity checks that can be automated, like clash detection for overlapping elements.

Creating a single quality management checklist that encompasses all disciplines and model review types would be difficult. Defining checklists for specific review types used at different points in the project review timeline provides standardization and flexibility. Agencies may already have discipline or milestone review checklists that are used for traditional quality management processes. Developing multiple checklists allows agencies to supplement or revise what already exists.

Reviewers will continue to inspect the same design content, but information is organized differently within a 3D model than it is on paper. For example, verifying station and offset information on a plan sheet requires checking annotations at points required by the checklist. To examine those same points in a 3D model, software tools are used to reveal location data of selected points in the model environment. Thus, the process of finding information differs even though the checklist content does not change. Agencies can expand their current discipline-specific checklists to include aspects unique to 3D models (e.g., element attributes) or incorporate review tools available in the 3D environment (e.g., specifying clash detection rules and routines to assess constructability and verify compliance with design standards).

Checklists are static documents, separate from model files. Users have to go outside a model to look at reviewer comments and see how they were addressed. When used as the quality management record, reviewers and CDE managers need to act with care to verify that checklists and models are stored appropriately in relation to each other. If a checklist contains a link or file path to a model, it needs to be updated when files are moved.

Some types of 3D model–review checklists that agencies may not have developed yet include the following:

- Federated model checklist confirms that data from all disciplines are properly packaged into a single information container that can be exchanged without data loss. It lists the

- information container breakdown structure requirements for referencing information models into a single information container, all of which need to be verified before the data within models is reviewed. Compliance with CDE standards is verified here. The checklist can outline the reference architecture, file structure, file formats, and file naming conventions, or it can point reviewers to any sections of the standards where these rules are described.

- Modeling standards checklist confirms that data within each discipline-specific model complies with BIM/CADD standards. This checklist follows the MET, so reviewers can work through each element in the table and confirm compliance with LOIN and metadata requirements. It is used to verify seed/template files and coordinate and unit systems; any associated software configurations should also be included. The checklist can itemize these requirements or point reviewers to any sections of the agency standards where they are dictated.

- Model integrity checklist confirms the completeness of model structure content, including geometry and surface accuracy, without consideration of design calculations. These checklists can be general or discipline specific. General checks include identification of gaps and overlaps between models, gaps and overlaps between model elements, duplicate elements, and disturbances across elements. Discipline-specific checks include evaluating surface density and spacing for template drops. To help reviewers inspect model elements systematically, the checklist should list potential elements or the project MET.

- Model element and detailing checklist confirms the completeness of review by providing an ordered list that guides model assessment. When working through a plan set, reviewers know they are done once they have reviewed every page. However, 3D models consist of model elements, saved views, annotated details, notes, and supplemental documents. The design team can create a high-level list of these items, making use of the MET and BEP for reviewers to check against and mark complete after reviewing all individual elements in a category. For example, a checklist would not list every standard note in a general notes section, but it may include a category for checking all notes. An agency may define an implementation process for reviewers to check these notes by exporting a PDF, reviewing each note individually, and then marking that the review of the notes category is complete. As 3D modeling becomes more common, more agencies will have a standard list of items included in discipline-specific models, which both designers and reviewers can check against.

- Surface/terrain checklist confirms that proposed and existing models are constructed from sufficient data, without anomalies or voids, and meet the required accuracy for intended use. Checklists should point to standards that control data requirements, such as survey, CADD, and roadway design manuals, as well as unique documentation typically included on a project, like survey reports, property information, and project design layout. The checklist should map a systematic method to work through model elements and document review outcomes on each element.

- Constructability checklist confirms model elements can be constructed without issue. Checklists should map out a systematic approach for evaluating each element by utilizing or mirroring the MET. Each element’s interior and boundary can be inspected to determine if either one clashes spatially with other elements or if other elements will obstruct construction at any point.

Appendix F contains sample quality artifacts and checklists.

5.3.2 Generating Reports

Traditional plan sets contain sheets with tabular data and information, such as alignment data, bridge rebar tables, bearing seat elevations, and drainage tables for pipes. In a model-based environment, this information is developed within a 3D model and can be extracted through generated reports for review and verification. These outputs can be reviewed as standalone documents or imported into analytical design tools to verify that a model matches the design.

Reports can be generated through design authoring or design review software. These software packages typically allow for limited report customization.

Additional types of reports can be generated to identify nonconforming elements based on design or modeling standards. These reports can be used to track whether changes have been addressed using attribution within property sets. Utilizing computer-generated reports is much faster than conducting a lengthy, manual, element-by-element check.

Agencies can define the review process to require that designers provide generated reports along with 3D model deliverables or to establish workflows for reviewers to generate reports themselves. If reviewers need to generate reports using specific software, additional core competencies and training should be provided.

5.3.3 Automated Tools

Like generated reports, automated software tools provide a faster way of conducting reviews than manual methods. Several tools are included in design authoring, design review, and construction software that check infrastructure models against design and CADD standards. These automated tools enhance the efficiency of design teams and reviewers. Reviewers can use software outputs to document whether a model complies with standards or use a checklist while running these automated software tools to document the model’s compliance (or lack thereof). Examples of these tools include

- Automated tools embedded in design authoring software, such as drive-through simulations, tolerance checks against design standards (e.g., AASHTO horizontal and vertical curve values), compliance with CADD standards, and clash detection tools.

- Automated tools embedded in design review software, such as clash detection analysis in Autodesk NavisWorks or Bentley Infrastructure Cloud.

- Automated tools within some BIM software can check design compliance using proprietary files.

- Automated tools to validate data schema and information requirements. The new IDS standard from buildingSMART can be used to check alphanumeric information requirements in IFC files, but it does not verify compliance of geometric information. The IDS standard does not check proprietary files at this time, but software vendors could use the standard methodology to verify that exports from their software comply with IFC formatting and information requirements. The level of work required to use the IDS standard to check proprietary files in this way has not been explored.

- Construction tools, such as Trimble Business Center’s surface checking tools and International Roughness Index prediction tools for validating pavement surface models.

5.3.4 Software Applications for 3D Model Reviews

At the time of writing, a number of software applications have been developed for 3D model reviews. Software for reviewing 3D models of transportation projects is still in its infancy. This makes it difficult to train reviewers or generate buy-in for and acceptance of 3D models. While there are some limitations with current technology, the development of 3D model–review tools is rapidly advancing. It is important for agencies to identify a review application that can be integrated within the CDE and technology stack, is compatible with different file types, and delivers a high level of performance with datasets of varying sizes.

When selecting a 3D model–review application to conduct the review types outlined in Chapter 4, agencies should consider the following functional requirements:

- Reference framework,

- 2D views (planar sections),

- Data visualization, and

- Comment resolution functionality.

3D models of transportation projects need a reference framework for geospatial coordination within the review software. Similar to requirements for a 2D plan set review, reviewers need the ability to check station and offset information tied to an alignment or another linear referencing system. Review software applications must also let users inspect project datums, project coordinates, and projections.

Review software should be capable of providing model information in 2D and 3D views. 3D models can be rotated to capture different viewing angles, but reviewers—especially those new to 3D model reviews—still depend on 2D perspectives to examine details within a 3D model. 2D views should be generated from a 3D model and may include roadway or drainage profiles, cross sections, and planar sections from any angle. Agencies can define standard 2D views that need to be provided by the designer within review software, or they may document procedures for reviewers to interrogate the 3D models to create the correct 2D views.

Using software to visualize data is a powerful tool for reviewing 3D models. Outside of BIM/CADD, data visualization implies using colorful graphs and charts to clarify patterns within datasets. 3D information models developed for transportation projects are built using complex datasets, and applying data visualizations clarifies where various types of data exist within a model. Element information like classifications, materials, pay items, build order, associated specifications, review date, or review status can be tied to display styles, indicating through color or transparency any object with the queried properties. This allows reviewers to quickly identify the specific attributes they need to check, minimizing time-consuming tasks.

Another necessary functionality within review software is comment resolution. Reviewers can place or assign comments and issues on any component, view, section, or dimension in either 2D or 3D. Every response to the initial comment, including how it will be resolved and the status of that resolution (e.g., in progress, rejected, or completed), is documented by the review software. Comment resolution functionality supports the PDCA method. It provides greater efficiency and a superior audit trail compared to traditional paper methods because the software can maintain these comments within a model through updates and changes. Current software still does not fully support commenting on different portions of a model; thus, agencies should monitor how software evolves to address this gap.