Implementing Data Governance at Transportation Agencies: Volume 1: Implementation Guide (2024)

Chapter: 5 Practices

5. Practices

Overview

This chapter provides guidance on common data governance initiatives:

- Data Collection/Acquisition Oversight – Coordinating and planning data collection, purchase and licensing activities to avoid duplication and maximize the use and value of new data;

- Data Documentation – Identifying agency data assets and maintaining consistent information (metadata) about them to enable discovery and identify opportunities for rationalization;

- Business Data Glossaries – Establishing official agency definitions for business concepts represented by data to provide the basis for a shared agency language;

- Data Quality Management – Improving practices to ensure that data are fit for their intended purposes;

- Data Element Standards – Adopting authoritative definitions and standardizing formats and representations for data elements used in multiple datasets across the agency;

- Master and Reference Data Management – Establishing authoritative sources for shared agency data to avoid redundancy, increase consistency, and facilitate data integration;

- Data Sharing – Establishing rules and practice guidance for when and how data can be shared;

- Data Security and Privacy – Preventing unauthorized access to data and ensuring that private and confidential data are protected; and

- Data Governance Monitoring and Reporting – Tracking and communicating progress and outcomes from data governance activities.

Cautionary Tale #1: LiDAR Data Collection

One DOT contracted for collection of video and LiDAR data for the state highway system, with extracted information on asset types and locations.

Following contract execution and initiation of data collection, additional requirements emerged to obtain bridge underclearances and culvert locations. However, obtaining information for these assets would require changes to equipment configurations which was not possible to do. Earlier and broader collaboration on requirements gathering would have allowed for these additional items to be included.

Data Collection/Acquisition Oversight

What is meant by data collection/acquisition oversight?

Establishing guidelines and processes to be followed prior to collecting data in the field or purchasing or licensing data from external vendors.

Why is data collection/acquisition oversight important?

The intent of data collection/acquisition oversight is to (1) maximize data collection/acquisition efficiency and orderly adoption of new technologies, (2) prevent acquisition of data that duplicates what already exists in the agency, (3) encourage use of established data standards that enable the new data to be linked as needed to existing data (e.g., through standard location referencing), (4) identify opportunities for multiple business units to collaborate on data acquisition to meet common needs, and

(5) help business units anticipate and plan for activities needed to manage new data throughout its life cycle.

Approaches to data collection/acquisition oversight

There are several options, with varying degrees of formality:

- Scope a project to take a comprehensive look at agency data collection practices, recommend opportunities for combining efforts or transitioning to new data sources, and develop guidelines for bringing on new data of different types. This effort can take advantage of any detailed assessment and data documentation information that has been gathered for individual data programs.

- Have a standing agenda item in data governance group meetings to share upcoming plans for data collection or purchase. Use these meetings as an opportunity to discuss issues and opportunities for collaboration.

- Request each business unit to list their planned data collection efforts or purchases in a shared, accessible repository as part of the annual budgeting process. Review the list to identify potential duplication and facilitate a conversation amongst the affected business units to see if they might combine efforts.

- Develop guidelines for data collection/acquisition and conduct outreach and training to make sure that business units are aware of these guidelines. The guidelines would identify factors to consider in planning for new data collection to maximize efficiency and sustainability. They would also suggest actions to identify and avoid potential duplication, make use of adopted and supported technologies, provide consistency with current data standards, and develop a plan for managing the new data covering storage, metadata, and quality management.

- Establish requirements for formal review and approval of any new data purchases or collection activities.

Cautionary Tale #2: Introducing New VMT Data

The Planning group within one DOT licensed a commercial data source of connected vehicle information and used this to calculate Vehicle Miles of Travel (VMT). They felt that this provided a more accurate estimate of VMT than what the agency’s traffic monitoring group produced based on traditional traffic counts.

However, the result was that two different groups within the DOT were providing two different estimates of VMT to MPOs and other partners.

A data governance process requiring the Planning group to share their data purchase plans with others in the agency would have provided an opportunity for discussion and agreement on how to integrate the new VMT data wtihin the agency – with ground rules for sharing the different types of data and assurance that differences between the two sources would be clearly documented in metadata.

Tips for success

- Clearly describe why you are undertaking this effort, with examples of existing problems or inefficiencies that you are trying to eliminate.

- Find and communicate examples of collaborative data collection efforts that are already occurring in your agency (or peer agencies) to show what is possible.

- Try informal approaches that educate people about the issues and provide opportunities for discussion before you put new requirements in place.

- If you determine that formal approval processes are required, find ways to tap into existing budget or IT approval processes rather than setting up new processes.

![]() See the Communications Guide, Chapter 5 for an example of how to identify target audiences for communication about a planned data acquisition review process

See the Communications Guide, Chapter 5 for an example of how to identify target audiences for communication about a planned data acquisition review process

Example(s)

- Caltrans developed a data collection strategic plan to “leverage existing technologies for implementing an enterprise-wide approach to field asset and mobility data collection and procurement that minimizes duplication and maximizes data value and operational efficiency through a collect once, use many times approach.” [5]

- Florida DOT has included data projects (for collection and acquisition) within its comprehensive process for evaluating promising technology projects. This process engages the appropriate Enterprise Data Steward to identify any similar proposal or project and determine if the effort should have an enterprise perspective (i.e., to serve multiple business needs).

![]() See Chapter 7 for sample data proposal initiation intake questions.

See Chapter 7 for sample data proposal initiation intake questions.

Data Documentation: Inventories, Catalogs and Metadata Management

What is data documentation?

There are several interrelated parts to data documentation:

- Data inventory — compiling a list of your data assets – which may include databases that are managed at the enterprise level as well as spreadsheets and other desktop databases managed by individual business units.

- Data catalog — creating a searchable catalog of your data assets with information that helps your IT staff, data analysts, and other users understand and access the agency’s data.

- Dataset and data element-level metadata (Data Dictionaries) — defining standard metadata elements that you intend to maintain about each of your data assets and their component elements, and putting templates, tools, and processes in place to create, update, and access this metadata.

Sample Data Dictionary Elements

Table Name

Field Name

Field Alias (for report titles)

Field Description

Field Type

Field Length

Field Precision

Required?

Unique?

Field Domain Type

Field Units of Measure Confidential?

Sensitive?

Default Value

Acceptable Values (Min, Max, List)

Primary Key?

Source System

Usage Notes

There are different varieties of metadata to consider, including:

- Descriptive metadata — to provide an understanding of what datasets are about (e.g., title, description, subject categories, geographic and temporal scope)

- Technical metadata — identifying the physical location and characteristics of the data (e.g., table and column names, data types, primary keys)

- Governance or administrative metadata — used to support governance or administrative workflows (e.g., contact information for owners and stewards, security classification)

- Other metadata — describing the quality, provenance or lineage, and usage levels of the data.

Why is data documentation important?

Data documentation is foundational for data governance because it provides an understanding of the scale and nature of the data to be governed. Data documentation can be used to:

- Identify private and sensitive data that requires special protection and handling,

- Identify and track stewards who will serve as the points of contact for each of your data assets,

- Identify duplicative and inconsistent data – and designate authoritative sources for key data elements,

- Keep track of primary users and uses of different data assets,

- Capture institutional memory or tribal knowledge about your data before the individuals who know the data leave the agency, and

- Record business rules that provide the basis for performing data validation and other data quality management activities.

Data documentation also benefits data engineers, analysts, and other data users by enabling discovery and understanding of the agency’s data resources.

![]() See Chapter 7 for an example data flow diagram

See Chapter 7 for an example data flow diagram

Approaches to data documentation

At a minimum, you will want to create a basic data catalog and adopt metadata standards for your stewards to follow.

Steps in building a basic data catalog are:

- Identify what data assets are to be included in the catalog and their level of granularity.

- Decide what type of information to include in the catalog and specify each catalog element.

- Identify existing data or information system inventories that can be leveraged and used as a starting point for the catalog.

- Create a form and process for gathering catalog information. Take advantage of available software to automate this process, keeping in mind that human intervention will be needed to determine which assets should be included in the catalog and to add or validate information that cannot be extracted or inferred from available metadata or content.

- Engage an initial group of data owners/trustees and stewards in providing information for their catalog entries – supplementing any automatically harvested information.

- Validate the information collected to provide consistency and completeness.

- Refine the specification and collection processes based on lessons learned through initial collection activities.

Steps in standardizing metadata are:

- Assess documentation. Review your agency’s current data documentation approaches and resources to identify what you already are doing and the quality of existing data and metadata. At a minimum, this effort should identify and review existing metadata standards, data models (for technical metadata), GIS metadata collections, and open dataset metadata. If you are including unstructured data within the scope of data governance, this assessment should also include metadata built into your document or content management systems.

- Create a dataset metadata standard and template. Identify and specify common metadata elements to be collected at the data asset levels. Be sure to harmonize or crosswalk with existing metadata standards such as ISO 19115 (Geographic Information Metadata), ISO 11179 (Metadata Registries), and the W3C’s Data Catalog Vocabulary (DCAT).

- Create a data dictionary standard and template. Identify and specify common metadata elements to be collected for each data element. Harmonize or crosswalk with the data element metadata portion of ISO 19115.

- Train your data stewards. Cover the new standards and provide support for metadata/data dictionary creation for an initial set of data assets.

- Establish rules for when metadata is to be provided. For example, make provision of metadata a condition for putting a system into production or adding a data source to a data repository.

Once you have the basics in place, you will want to explore bringing on a technology solution for data cataloguing and metadata management. There are several available products, some of which are associated with specific cloud solutions and BI platforms. Because technology implementation can be a lengthy process, it is best to get started with an interim solution while you are pursuing a longer-term implementation project. Be sure to carefully define your business requirements and evaluate alternative solutions prior to moving forward with a particular product.

Tips for success

- Clearly identify your goals, target users, and use cases for a data catalog. A catalog geared toward report developers will have different requirements than one geared towards casual data seekers.

- Begin with a minimum set of required metadata and catalog elements – others can be made optional.

- Leverage the resources you have – avoid creating duplicative and potentially inconsistent metadata formats and repositories.

- Start simple and expand over time. Do not underestimate the amount of time and effort it will take to build your catalog and create metadata.

- Allocate data governance staff resources (or consulting support resources) to facilitate data collection and metadata development and perform quality assurance on the results. Anticipate that getting time from busy data owners/trustees and stewards may be challenging.

- Plan for regular updates – data catalog information will become outdated as new data resources are created and data stewards change jobs.

- Do review available off-the-shelf data catalog and metadata repository solutions and take advantage of capabilities to automate harvesting of metadata information. However, do not jump to implementing a technology solution too early – make sure you clarify your purpose and requirements and test your catalog and metadata creation approach before making a purchase.

- You may not be able to fully centralize metadata management across different repositories (e.g., GIS, BI platforms, open data portals, data lake platforms) so be sure to develop consistent data categorization/subject tags and a metadata integration strategy. Build crosswalks across different existing metadata sets as an interim measure.

- Coordinate with IT on sourcing and integrating technical metadata from data models.

Example(s)

- Minnesota DOT launched their Business Data Catalog (BDC) effort in 2012. The BDC includes descriptive information about the agency’s data and information assets including curated records and applications, which are searchable by subject area. MnDOT developed the BDC in-house.

- Connecticut DOT created metadata and data dictionary standards/templates that adhere to the ISO 1195 standard. A custom-built application manages metadata for data resources curated for inclusion in the agency’s Transportation Enterprise Database. The DOT intends to expand its metadata for all data that is agency-wide in scope or made available to the public.

Example of Leveraging a Business Glossary for Data Dictionary Definitions

Business Glossary Entry:

- Managed Lane - highway facilities or a set of lanes where operational strategies are proactively implemented and managed in response to changing conditions. (Source: FHWA)

Associated Data Dictionary Entry:

- HOV_Lanes (HPMS Item 9) - Maximum number of lanes in both directions designated for managed lane operations.

Business Data Glossaries

What is a business data glossary?

A business data glossary is a managed and governed compendium of business terms and definitions for important concepts related to governed agency data.

Why are business data glossaries important?

Business data glossaries establish authoritative, shared definitions for an organization’s specialized terminology. In the context of data governance, business data glossaries help an organization’s employees to understand the precise meaning of data entities and elements associated with the concepts in the glossary. Business data glossary definitions can provide a foundation for the data element descriptions within data dictionaries by

eliminating the need to repetitively (and possibly inconsistently) include concept definitions for each data element related to a concept.

Style Guidelines for Glossary Definitions (InterISO/IEC 11179–4)

Write term names in the singular form

State descriptions as a phrase or with a few sentences

Express descriptions without embedded concepts and without definitions of other terms

State what the concept of a term is rather than just what it is not

Use only commonly understood abbreviations in descriptions

State the essential meaning of the concept

Be precise, unambiguous, and concise

Be able to stand alone

Be expressed without embedded rationale, functional usage, or procedural information

Avoid definitions that use other term definitions

Use the same terminology and consistent logical structure for related definitions

Approaches to creating a business data glossary

Steps for building a business data glossary are:

- Decide what terms to include/exclude. Establish curation rules for what terms you will be including in your glossary. Some DOTs have focused their business glossary efforts only on terminology that is related to data entities and attributes subject to governance. Other curation rules might be developed to include or exclude names of IT systems, names of specific forms or reports, names of external organizations (e.g., FHWA), and names of transportation-related legislation or funding programs.

- Establish a scope. You may want to pursue an incremental approach to building a business data glossary, focusing on one domain or business area at a time. Alternatively, you may want to support a planned major system implementation (such as an asset management, financial or enterprise resource planning (ERP) system).

- Prioritize. Create a methodology to prioritize glossary terms. For example, terms that may be defined in state or federal law may have higher priority than a term that was previously defined in a handbook or manual created by agency staff.

- Specify the glossary elements. Identify what information you want to maintain for each glossary term. In addition to the name of the term and the definition, you may want to also include acronyms, information to help people search for terms (such as subject categories), references to source documents, assigned owners or stewards for the terms, synonyms, and term status and edit histories. Create precise specifications for each element you want to collect, including style guidelines for glossary definitions.

- Identify existing sources. Most DOTs maintain multiple glossaries as either stand-alone documents, appendices to manuals, policies, or plans, or on web pages. Compile a list of existing glossaries, determine which are actively maintained and identify the business units and individuals that are responsible for updates.

- Create an initial glossary from existing sources. Using the source documents that you have compiled, compile an initial glossary. Use this exercise to assign terms to owners/stewards establish a governance process for term review and approval – including resolution of multiple definitions for the same term.

- Identify and fill gaps. Review data sources and associated reports within your defined scope and make a list of additional concepts to be included in the glossary. Assign responsibility for providing glossary entries to relevant business units.

- Formalize a glossary update process. Establish a process for updating, adding, and deprecating glossary terms. Assign responsibility for managing this process to a member of the data governance team or other appropriate staff member.

Maintaining a Business Glossary: Roles

Glossary Manager:

- Identify term owners

- Initiate and manage regular updates

- Review entries for clarity and consistency

- Elevate issues for resolution to governance group

Term Owners:

- Author and update term definitions

- Help to resolve conflicting definitions

Governance Group:

- Resolve issues escalated by the Glossary Manager

- Monitor and adjust the glossary governance process

Tips for success

- Set your scope carefully – a complete agency glossary is an ambitious undertaking.

- Limit the number of data elements that you maintain for each glossary term to those that are necessary to understand and manage the glossary.

- Recognize that some terms will legitimately have multiple definitions for different business areas and design your glossary to accommodate this.

- Keep in mind that even though glossary terms may be associated with acronyms (e.g., Vehicle Miles of Travel and VMT), an acronym list is different from a glossary because it does not contain definitions. Avoid mixing the two.

- Work with owners/maintainers of existing glossaries to transition them to maintaining their terms in the agency glossary but recognize that there may be business needs to maintain separate glossaries (e.g., within policy documents).

- Educate data stewards about leveraging glossary definitions as they prepare their data dictionaries.

- Educate agency managers about the importance of using the agency glossary and encourage them to promote its use (and discourage independent glossary efforts that provide conflicting definitions).

- Integrate your glossary with your metadata management tools to enable links between glossary terms and data dictionary definitions.

- Monitor how your glossary governance process is working, gather feedback from participants and adjust as needed to eliminate bottlenecks and facilitate updates.

Example(s)

- Minnesota DOT includes glossary terms within their Business Data Catalog.

- Florida DOT created an Enterprise Business Glossary that defines key terms and definitions for agency-wide use. They put a process in place for the review and adoption of agency terms and definitions.

- Caltrans created a glossary including definitions of data governance terminology as well as business terminology related to the agency’s data resources. They manage the glossary using a SharePoint list and provide searchable access to the glossary via a PowerBI Dashboard.

Data Quality Management

What is data quality management?

Data quality management consists of a coordinated set of activities to:

- Define data quality and set objectives and standards,

- Assess existing data quality,

- Analyze root causes of data quality problems, and

- Identify, prioritize, carry out, and track both preventative and corrective actions to improve data quality.

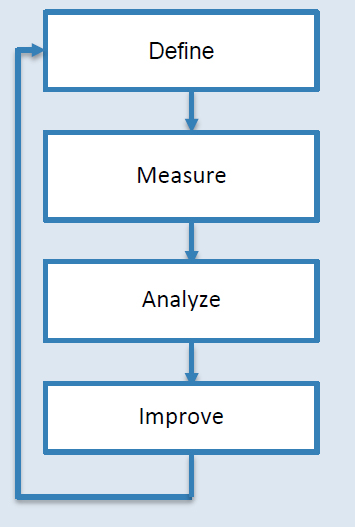

The Data Quality Management Cycle

Why is data quality management important?

Data quality management helps to make sure that investments in data pay off – i.e., that data serve their intended purposes and provide business value. Data quality management helps agencies to avoid the negative consequences of poor data quality, which include:

- Need for staff to spend extra time cleaning rather than analyzing data,

- Inaccurate reports to external control agencies,

- Damage to agency credibility,

- Missed opportunities to provide insights from data, and

- Suboptimal decisions based on faulty information.

Data Quality Dimensions to Consider

Timeliness – amount of time between when an event occurs and when information about the event is recorde

Accuracy – match to ground truth

Completeness – presence of expected records and attributes

Uniformity, Validity or Conformity – consistency across files or database records; adherence to business rules and metadata or to an established standard

Repeatability or Precision – match between repeated measurements

Uniqueness – absence of duplicate records

Accessibility – ability of authorized users to obtain the data

Approaches to improving data quality management

Agencies can pursue one or more of the following approaches to improving data quality management practices within their organizations:

- Create guidelines or policies defining data quality management practices to be followed.

- Create a template for a data quality management plan that can be completed for different data assets.

- Implement tools to support data quality management including profiling, parsing, matching, validation, cleansing and enhancement.

- Implement tools to facilitate data user understanding of data quality such as dashboards or reports showing trends in data quality metrics.

- Integrate capabilities within systems to enable data users to easily report errors.

- Conduct or offer training for data stewards on data quality principles and practices.

- Integrate data quality management activities into system development and BI projects.

Tips for success

- Address data quality as close as possible to the point of collection.

- Fix data problems in source systems, not in reporting systems.

- Recognize that good metadata and business rules are a prerequisite to managing data quality. Define allowable value domains as part of data dictionaries and engage business data stewards to define business rules that can be used to validate data.

- Build validation rules into data entry systems.

- Use dropdown menus and pre-populated fields as much as possible to implement data quality rules.

- Provide a way for data users to report errors that they encounter.

Example(s)

Caltrans adopted a standard data quality management plan template and has trained data stewards in use of a spatial data quality tool.

USDOT’s 2019 Information Dissemination Guidelines present practices followed by operating administrations to ensure data utility, objectivity, integrity, accessibility, public access, and re-use.

NHTSA has defined a “six-pack” of data quality measures to be applied to six core traffic data systems (Crash, Vehicle, Driver, Roadway, Citation/Adjudication, and EMS/Injury Surveillance): timeliness, accuracy, completeness, uniformity, integration, and accessibility.

Example DOT Data Elements to Standardize

DOT Organizational Unit Name

Route ID

Milepoint

Latitude and Longitude

Project ID

Asset ID

Asset Type

Vehicle Identifation Number (VIN)

State Fiscal Year

Employee ID

MPO

Agency ID

Funding Source

City Name

County Name

Event DateTime

Data Element Standards

What are data element standards?

Data element standards define the meaning, naming conventions, type, format, value domains and (where applicable), units of measure and levels of resolution for data elements that appear in multiple datasets.

Why are data element standards important?

Data element standards provide the basis for achieving consistency across different datasets, which enables data to be integrated or combined for analysis and reporting.

Approaches to establishing data element standards

Steps for establishing data element standards are:

- Create a data element standard template (see Chapter 7 for an example)

- Create a list of data elements that are good candidates for standardization because they appear across multiple datasets and a lack of standardization is slowing down or preventing data integration activities.

- Select a priority data element to work on based on the benefit to standardization and the presence of one or more individuals who are interested in helping to create and evangelize for the new standard.

- Assemble a small team to develop the standard and identify others who would be impacted by the new standard (i.e., they might be required to modify the formats or coding of the data they manage.) Appoint a team leader to keep the effort on track.

- Circulate the draft standard for review and revise as needed in response to feedback.

- Escalate issues that arise during this process to the governance lead and/or groups for resolution, as needed.

- Identify the approach you will take for modifying existing datasets to meet the standard. You may decide to implement the standard within reporting datasets only and wait for system upgrades or replacements to do this for source systems.

- Roll out the final standard – post in an accessible location and conduct outreach to make affected stakeholders aware of the standard and how it will be implemented.

Tips for success

- Begin with high-impact standards where there is already strong sponsorship and buy-in (e.g., common location referencing).

- Limit the scope for each standardization effort to improve chances of success.

- Identify current variations and understand reasons for them.

- Recognize that migration to the standard may take time and require changes to systems and business processes.

![]() See Chapter 7 for a sample Data Element Standard.

See Chapter 7 for a sample Data Element Standard.

Example(s)

Iowa DOT created a set of foundational data element standards focusing on dates, times, locations, measures, currency and identifiers for projects, vehicles, equipment, employees, and positions.

Caltrans established a data element standard template and created initial standards for data related to locations and asset management.

Master and Reference Data Management

What are master and reference data management?

Master data management establishes authoritative data sources that provide a consistent and uniform set of identifiers and extended attributes describing the core

entities of an organization. Once created, master data sets are catalogued, documented, and made available for use.

Examples of DOT Master and Reference Data Entities

Reference Data Entities:

- Organizational Units

- Functional Classes

- Asset Types

- Incident Status Codes

Master Data Entities:

- Employees

- Projects

- Programs

- Contracts

- Work Orders

- Permits

- Expenditures

Reference data management entails maintenance of stable, re-usable datasets, such as code tables, for use by multiple systems across the agency. It focuses on harmonizing and sharing data that is used to classify or provide context for other data. Many reference data elements are external – for example, zip codes or state 2-letter abbreviations. For a DOT, reference data would include things like highway functional classifications, asset types, vehicle makes and models, and incident statuses.

There are different architectural options for master and reference data management – including a Registry approach that uses an index to point to master and reference data in source systems, a Hub approach that consolidates master and reference data in a central repository, and a Hybrid approach which leaves the master and reference data in their original source systems but synchronizes the data with a Hub repository for general access.

Why are master and reference data management important?

Master and reference data management is an essential practice that helps agencies to reduce complexity and improve connectivity and consistency of their information systems. Master and reference data management provide the tools and processes needed to establish a single source of truth to guide both internal DOT decision-making and provide information to meet external requirements and inquiries.

Approaches to master and reference data management

The first step is to identify and prioritize specific master and reference data entities and elements to be managed. While specific activities will vary depending on the scope and nature of what is selected, the following activities will generally be needed to establish master and reference data sources:

- Create a master data management strategy and roadmap that identifies and prioritizes master and reference data and establishes the architectural model(s) that you will be using to provide access to the mastered data. This effort should also identify the skills and resources needed to build and maintain the agency’s master and reference data.

- For each target master or reference data entity:

- Identify the existing data sources for the selected entity/element and document data element formats, definitions, and value domains; current values; stewardship responsibilities; and current update processes.

- Identify business needs for using the master or reference data to reduce duplication of effort and improve data consistency across systems.

- Specify target data structures for the master or reference datasets, and review with stakeholders to get agreement.

-

- Identify and obtain concurrence on authoritative source systems for different master and reference data elements.

- Determine ways to make master and reference data available for reporting or access to meet identified business needs – both to other systems and individual data users – with consideration of standard data warehousing, access, and analysis tools.

- Determine where the master and reference data will be managed and how they will be accessed, synchronized, and shared with other systems. (There may be a short-term strategy and a longer-term strategy for these given constraints associated with legacy systems and level of readiness for making business process changes.)

- Define, build, test, and implement data transformation, normalization, de-duplication, and augmentation processes needed to create the master and reference data sets.

- Document and communicate the location, update process, data stewardship responsibilities, and access methods to maximize use of the master/reference data are used.

Tips for success

- Establish a strong partnership between data governance and IT – data governance can adopt standards and facilitate adoption; IT oversees the architecture and implementation.

- Develop a master plan to identify high priority targets and logical grouping and sequencing of efforts to create master and reference data.

- Start with a simple, non-controversial case to work out your process and provide an example.

- Recognize that this is not simply a technical exercise – changes to business processes will likely be needed.

- Recruit the right set of people for each effort that understand the target data and how it is gathered, moved, and used.

- Recognize that there will be a need for a short-term strategy when source system changes are not feasible.

Example(s)

Virginia DOT created a Master Data Management roadmap that identifies data to be mastered over a three-year period. They have allocated resources for data modelling, design, and delivery of the master data.

Caltrans has adopted a standard approach to master and reference data management and has piloted this approach for creating a reference data set with information about California agencies that receive transportation funding.

Data Sharing

What is meant by data sharing?

Data sharing is the process of making data available for use beyond the system or location where it is managed. This maximizes data re-use and avoids duplicative effort. Data can be shared in multiple ways (see sidebar). Data sharing can take place within an agency, across agencies, between an agency and a citizen who requests data, between an agency and a set of registered users, or between an agency and the public at large.

From a data governance perspective, data sharing is an activity that benefits from oversight in the form of policies, guidelines, and agreements. Policies and guidelines for data sharing cover what data can (and should) be shared, with whom, and how. Data sharing agreements are used to define the terms under which data sets are shared (internally or externally).

Ways to Share Data

Via a specialized application (e.g. traffic monitoring software)

Open Data Portal

Agency GIS Portal

Data Warehouse/Mart

Data Lake

File server

FTP site

Cloud data sharing site

Application Programming Interface (API)

Web Service

Why is data sharing important?

Data sharing policies and guidelines have several benefits:

- They reduce risks of unauthorized disclosure of private and sensitive information.

- They provide clarity on what data should be shared, which leads to greater consistency in decisions about sharing data on the part of data owners/trustees.

- They can be used to require that data have sufficient documentation (metadata) and quality checks prior to being shared.

- They encourage people to share data using available repositories that have management protocols in place for security, access, metadata, and adherence to retention schedules.

- They discourage practices that lead to proliferation of multiple copies of datasets.

Data sharing agreements can be used to establish expectations for both the data provider and recipient, including:

- What data will be shared – content and format

- Permissible uses for the data (for example, commercial applications)

- Responsibilities of the recipient to implement appropriate data protection measures

- Responsibilities of the provider, including frequency and timing of updates and correction of reported data errors

- Frequency and timing of updates

- Who can access the data

- Who can modify the data

- How long the data can be used (sunset clause)

![]() See Chapter 7 for a sample Data Sharing Agreement outline.

See Chapter 7 for a sample Data Sharing Agreement outline.

- Disclaimers about the quality of the data

These agreements can be inter-agency or intra-agency. In some cases, they may alleviate concerns about sharing data by stipulating appropriate use.

Approaches to data sharing oversight

DOTs can pursue several avenues for managing how data are shared – both to encourage sharing of available data and to make sure that data are shared in a responsible manner:

- Create a data sharing policy (or section within a broader data governance policy) that communicates what data are to be shared (both internally and externally) and how. This policy can establish the foundation for more specific guidance documents. The policy should cover sharing agency data internally, with external public sector partners, with private-commercial entities and with the public. It should also cover how the agency shares data obtained from third parties.

- Create/adapt data sharing agreement (DSA) templates for both internal data sharing and external data sharing. Provide training on how to use them and examples of completed agreements.

- Provide a data sharing checklist with steps on how to determine whether data can be shared and prepare data for sharing. This can cover topics including private and sensitive data, licensing restrictions, metadata, and data quality checks.

- Provide data sharing guidelines that outline where and how different types of data can be shared for internal and external use. These guidelines can be used to encourage use of available repositories and prevent undesirable practices (such as emailing spreadsheets to large distribution lists).

- Formalize a review process for external data sharing agreements that the agency is asked to sign.

Tips for success

- Set clear agency expectations on data sharing.

- When drafting data sharing agreements, avoid being overly specific to provide flexibility to change without re-executing the agreement.

- Create standard language for consultant contracts that covers consultant use of agency-provided data as well as consultant responsibilities for providing data they collect or produce back to the agency.

- Make sure that senior managers understand the agency policy and apply it in a consistent manner.

- Be prepared to encounter situations where employees are not willing to share data – due to concerns about known limitations, potential misinterpretation, or

- lack of incentive to spend the time needed. The data governance team will need to pick their battles and decide how much resistance to take on.

- Use situations where a data owner/trustee has concerns about sharing the data under their responsibility as an opportunity. Educate managers about the agency policy/guidelines and to improve available guidelines so that they address common concerns.

- Build awareness of available data repositories and how they can be used.

- Understand practical constraints and challenges to data sharing and work to implement solutions.

- Keep track of who has requested and received data.

Example(s)

Washington State DOT (WSDOT) executed a data sharing agreement with the City of Seattle to exchange construction location and schedule information for projects within the city limits.

Florida DOT developed a Data Management Planning (DMP) resource document that lists key considerations for each phase of the data lifecycle, including data sharing.

Examples of Private and Sensitive Data for DOTs

Crash records – person and vehicle details

Driver license and vehicle registration data

License Plate reader data

Vehicle transponder data

Connected vehicle data

Employee cell phone GPS data

Employee personnel records

Professional Engineer (PE) license numbers (can be used to look up home addresses)

Disadvantaged Busienss Enterprise (DBE) certification applications

Locations of archeological sites

Engineer’s Estimates for Construction Projects (prior to bid opening)

Facility security camera locations

Vendor-supplied proprietary data

Computer network security information

IP addresses of Operational Technology (e.g., ITS Devices or Traffic Signals)

Detailed inspection or design data associated with Critical Infrastructure

Classifying and Protecting Private and Sensitive Data

What are private and sensitive data?

Private and sensitive data include personal data (data about specific people that compromises their privacy) as well as other data that, if disclosed, could jeopardize the privacy or security of agency employees, clients, or partners, or cause major damage to the agency.

Most states have information security policies in place that define data or information classification levels and establish guidelines for classifying data. Data classification levels are used to indicate the level of risk associated with disclosure – and to tailor access restrictions based on these risks. State statutes may specify responsibilities for classifying data and for protecting data through access controls. For example, data classification may be a business-informed legal determination and cybersecurity may be a state or agency-level IT responsibility.

Keep in mind that policies and terminology vary from state to state – for example, some states have a legal definition for “sensitive” data whereas others do not.

Protecting private and sensitive data involves identifying and classifying the data and ensuring that appropriate practices are used to protect and control access to the data. Managing private data also includes activities to ensure that: (1) the data are needed for a legitimate purpose and are deleted after that purpose has been achieved (2) the agency is being transparent about what data are collected, how the data are used, and

how they are protected. Agency practices for data assessment should consider not only data that are produced or collected by the agency but also third-party data sets.

Why is protecting private and sensitive data important?

Putting strong protections in place ensures compliance with applicable laws and standards – which vary by state. It helps agencies to avoid or minimize the negative consequences of disclosing private and sensitive data which can include:

- Targeted attacks to critical infrastructure

- Compromised building security resulting in loss or damage to facilities and equipment

- Cybersecurity attacks

- Harassment or harm to employees

- Identity theft impacting employees, partners, or customers

- Lawsuits

- Administrative costs to manage and remediate incidents involving unauthorized disclosure of data

- Reputational damage to the agency

Fair Information Practice Principles (Federal Privacy Council)

Collection Limitation: Data collection should be lawful and gathered with consent.

Data Quality: Personal data should be relevant and accurate.

Purpose Specification: Specify the purposes for which you use personal data.

Use Limitation: Do not disclose personal data.

Security Safeguards: Always implement security safeguards.

Openness: Businesses and entities should keep their practices as open as possible.

Individual Participation: Individuals should have the right to find out what personal data has been used and to regain control of it.

Accountability: The person in control of the data is responsible.

How to implement data security and privacy

Protecting private and sensitive data requires a partnership between the data governance team and the IT team. The following actions are within the scope of data governance:

- Adopt the Federal Privacy Council’s Fair Information Practice Principles (see sidebar). Note the emphasis on openness and transparency so that individuals are kept informed about how their personal data are used and protected.

- Clearly define responsibilities for data classification and protection that are based on state policy and guidance.

- Define standard metadata elements for identifying datasets that include private or sensitive data.

- Define standard data dictionary elements for identifying data elements that store private or sensitive data.

- Provide guidance and training on how to identify private and sensitive data. Include examples of specific types of data elements that are considered private or sensitive.

- Work with IT to implement automated processes to identify private and sensitive data elements within existing systems (based on field names and contents).

- Establish clear policies and procedures for how to store, protect, and provide access to datasets containing private or sensitive elements. These should include both agency-created and third-party datasets. Conduct training to help data managers and users to understand these policies and procedures.

- Conduct audits for major systems and data programs to review data elements and management practices, assess risks, and suggest remediation activities.

- Consider including a representative of your legal office on your data governance council or board to ensure that you are staying consistent with current legislation.

Tips for success

- Partner with your IT group and your information security officer and chief privacy officers (if applicable).

- Educate your staff, building on existing mandatory IT security training. Cover data classification processes – including legal criteria and responsibilities.

- Actively identify and assess your risks – and where applicable, integrate this activity with your agency’s enterprise risk management processes.

- Integrate identifying and managing private and sensitive data throughout the data management life cycle – from collection through retention and disposal.

- Establish a source system of record for identifying private and sensitive data sets and data elements – your metadata repository is a good candidate.

- Tailor your processes for data classification and protection to the level of risk – avoid creating overly stringent and time-consuming processes that are not warranted. (As an example, an employee name and email address are technically personally identifying information (PII), but the level of risk is lower than for an employee’s social security number.)

Privacy Impact Assessments

“A privacy impact assessment is an analysis of how personally identifiable information is handled to ensure that handling conforms to applicable privacy requirements, determine the privacy risks associated with an information system or activity, and evaluate ways to mitigate privacy risks. A privacy impact assessment is both an analysis and a formal document that details the process and the outcome of the analysis.”

Example(s)

- Texas DOT (TXDOT) hired a Chief Privacy Officer, with responsibility for data governance and records management. The agency has adopted the Fair Information Practice Principles, developed a privacy manual and periodically conducts Privacy Impact Assessments for major data systems.

Data Governance Monitoring and Reporting

What is data governance monitoring and reporting

Data governance monitoring and reporting involves tracking your accomplishments and indicators of progress towards achieving your anticipated goals. It also includes gathering and analyzing additional information that helps you to target your data governance activities for maximum impact.

Why is monitoring and reporting important?

Monitoring and reporting provide accountability for the data governance function. They enable the data governance team to communicate to the agency leadership, sponsors, and stakeholders what has been accomplished and how it has helped to achieve the intended outcomes. This information is valuable to have during annual budgeting cycles, when every program and function may be subject to scrutiny. It is especially helpful in

situations where there has been a change in agency leadership and the new leaders need to be briefed about data governance.

Monitoring also provides important feedback to the data governance team that helps them to fine tune tactics and improve based on experience. For example, an employee survey can yield information about the level of awareness of data security classifications that can be used to determine whether further information dissemination on this topic is needed.

Approaches to monitoring data governance accomplishments and benefits

In initial stages of data governance implementation, monitoring and reporting can be kept simple and focus on tracking activities and participation levels. Once data governance has been established and there are specific initiatives underway, a handful of key indicators reflecting outcomes can be introduced. Specific steps that can be considered are as follows:

- Maintain an activity log to track meetings, presentations, and milestones such as task or initiative completion, policy approval, or staff hires.

- Maintain a file with data governance group meeting notes. Use a standard template for the meeting notes that includes participants present/absent, agenda items, decisions, and action items.

- Maintain a file with data governance artifacts, which may include: policies, charters, guidance documents, fact sheets, templates, standards, and position descriptions.

- Ask data governance participants and stakeholders to provide feedback (positive and constructive) via email and maintain a file with this feedback. Review this feedback and consider ways to act on any suggestions for improvement.

- Produce an annual report or presentation slide deck that summarizes planned activities, accomplishments, successes, and new challenges to be tackled.

- Once you have one or more initiatives well underway, begin to track additional indicators. Table 5 below provides a list of indicators to consider.

Example(s)

Caltrans conducts a data management and governance maturity assessment every two years and tracks progress towards target maturity levels. The data governance team maintains a data governance work plan and tracks the status of each item. They brief members of the data governance bodies regularly on the status of the work plan items.

Table 5. Data Governance Monitoring and Reporting: Sample Indicators

| Category | Indicator |

|---|---|

| Data Governance Maturity | Change in data governance maturity level (measured using periodic assessments) |

| Obligations Met | On-time provision of required data or reports to external parties |

| Accomplishments - General | Achievement or delivery of planned tasks, deliverables, and milestones |

| Accomplishments – People | Number of stewards identified and onboarded |

| Number of outreach meetings or training sessions held | |

| Number or percent of senior managers briefed | |

| Number or percent of target employees trained | |

| Accomplishments: Governed Data | Number of data catalog entries added/validated |

| Number of business glossary entries added/validated | |

| Number of enterprise/agency/corporate datasets identified and under governance (which could be defined as catalogued, complete metadata, business rules, and data quality management) | |

| Number or percent of priority datasets with standard metadata complete | |

| Number of data quality management plans produced/updated | |

| Number of business rules documented or coded within a data quality tool | |

| Accomplishments: Standardized Data | Percent of target data entities mastered |

| Number of data element standards adopted | |

| Accomplishments: Risk Reduction | Reduction in number of unmanaged desktop datasets classified as having enterprise or agency-wide data |

| Number of data security or privacy audits completed (or percent of target systems/datasets with a completed audit within the last 3 years) | |

| Activity/Use of Resources | Number of hits on data governance resources (website, catalog, glossary, metadata repository) - trendline |

| Use of available repositories or portals for data sharing - # reports, # hits, # queries, # registered users |

| Category | Indicator |

|---|---|

| Employee Perceptions and Awareness | Change in employee ratings of: Ease of finding, accessing, and using data; ease of sharing data; time to produce useful reports; data quality and consistency (measured through baseline and follow up employee surveys) |

| Employee awareness of: data governance bodies, data stewardship roles and responsibilities, policies, standards, private and sensitive data elements, data sharing practices (measured through employee surveys) | |

| Satisfaction rating for the data governance team’s service delivery (measured through survey following service delivery) |

Tips for success

- Engage your sponsors and data governance team members in developing your monitoring approach.

- Regularly assess and adjust your indicators so that they are meaningful to both the governance team and the sponsors.

- Select a small number of indicators that are feasible to track and then expand in the future.

- Use your Action Plan to track key milestones and activities.

- Keep a list of success stories and a file of testimonials.

- Take advantage of automated ways of tracking activities (such as website hits and downloads)

Summary

This chapter has provided basic guidance on several common data governance initiatives that DOTs can pursue. See the resources and references in Chapters 7 and 8 for additional information.