The Future of Air Traffic Control: Human Operators and Automation (1998)

Chapter: 1 Automation Issues in Air Traffic Management

1

Automation Issues in Air Traffic Management

The pressures for automation of the air traffic control system originate from three primary sources: the needs for improved safety, and efficiency (which may include flexibility, potential cost savings, and reductions in staffing); the availability of the technology; and the desire to support the controller.

Even given the current very low accident rate in commercial and private aviation, the need remains to strive for even greater safety levels: this is a clearly articulated implication of the ''zero accident" philosophy of the Federal Aviation Administration (FAA) and of current research programs of the National Aeronautics and Space Administration (NASA). Naturally, solutions for improved air traffic safety do not need to be found only in automation; changing procedures, improving training and selection of staff, and introducing technological modernization programs that do not involve automation per se, may be alternative ways of approaching the goal. Yet increased automation is one viable approach in the array of possibilities, as reflected in the myriad of systems described in Section II.

The need for improvement is perhaps more strongly driven by the desire to improve efficiency without sacrificing current levels of safety. Efficiency pressures are particularly strong from the commercial air carriers, which operate with very thin profit margins, and for which relatively short delays can translate into very large financial losses. For them it is desirable to substantially increase the existing capacity of the airspace (including its runways) and to minimize disruptions that can be caused by poor weather, inadequate air traffic control equipment, and inefficient air routes. The forecast for the increasing traffic demands

over the next several decades exacerbates these pressures. Of course, as with safety, so with efficiency: advanced air traffic control automation is not the only solution. In particular, the concept of free flight (RTCA,1 1995a, 1995b; Planzer and Jenny, 1995) is a solution that allocates greater responsibility for flight path choice and traffic separation to pilots (i.e., between human elements), rather than necessarily allocating more responsibility to automation. Automation is viewed as a viable alternative solution to solve the demands for increased efficiency. Furthermore, it should be noted that free flight does depend to some extent on advanced automation and also that, from the controller's point of view, the perceived loss of authority whether it is lost to pilots (via free flight) or to automation, may have equivalent human factors implications for design of the controller's workstation.

It is, of course, the case that automation is made possible by the existence of technology. It is also true that, in some domains, automation is driven by the availability of technology; the thinking is, "the automated tools are developed, so they should be used." Developments in sensor technology and artificial intelligence have enabled computers to become better sensors and pattern recognizers, as well as better decision makers, optimizers, and problem solvers. The extent to which computer skills reach or exceed human capabilities in these endeavors is subject to debate and is certainly quite dependent on context. However, we reject the position that the availability of computer technology should be a reason for automation in and of itself. It should be considered only if such technology has the capability of supporting legitimate system or human operator needs.

Automation has the capability both to compensate for human vulnerabilities and to better support and exploit human strengths. In the Phase I report, we noted controller vulnerabilities (typical of the vulnerabilities of skilled operators in other systems) in the following areas:

-

Monitoring for and detection of unexpected low-frequency events,

-

Expectancy-driven perceptual processing,

-

Extrapolation of complex four-dimensional trajectories, and

-

Use of working memory to either carry out complex cognitive problem solving or to temporarily retain information.

In contrast to these vulnerabilities, when controllers are provided with accurate and enduring (i.e., visual rather than auditory) information, they can be very effective at solving problems, and if such problem solving demands creativity or access to knowledge from more distantly related domains, their problem solving

ability can clearly exceed that of automation. Furthermore, to the extent that accurate and enduring information is shared among multiple operators (i.e., other controllers, dispatchers, and pilots), their collaborative skills in problem solving and negotiation represent important human strengths to be preserved. In many respects, the automated capabilities of data storage, presentation, and communications can facilitate these strengths.

As we discuss further in the following pages, current system needs and the availability of some technology provide adequate justification to continue the development and implementation of some forms of air traffic control automation. But we strongly argue that this continuation should be driven by the philosophy of human-centered automation, which we characterize as follows:

The choice of what to automate should be guided by the need to compensate for human vulnerabilities and to exploit human strengths. The development of the automated tools should proceed with the active involvement of both users and trained human factors practitioners. The evaluation of such tools should be carried out with human-in-the-loop simulation and careful experimental design. The introduction of these tools into the workplace should proceed gradually, with adequate attention given to user training, to facility differences, and to user requirements. The operational experience from initial introduction should be very carefully monitored, with mechanisms in place to respond rapidly to the lessons learned from the experiences.

In this report, we provide examples of good and bad practices in the implementation of human-centered design.

LEVELS OF AUTOMATION

The term automation has been defined in a number of ways in the technical literature. It is defined by some as any introduction of computer technology where it did not exist before. Other definitions restrict the term to computer systems that possess some degree of autonomy. In the Phase I report we defined automation as: "a device or system that accomplishes (partially or fully) a function that was previously carried out (partially or fully) by a human operator." We retain that definition in this volume.

For some in the general public the introduction of automation is synonymous with job elimination and worker displacement. In fact, in popular writing, this view leads to concerns that automation is something to be wary or even fearful of. While we acknowledge that automation can have negative, neutral, or even positive implications for job security and worker morale, these issues are not the focus of this report. Rather we use this definition to introduce and evaluate the relationships between individual and system performance on one hand and the design of the kinds of automation that have been proposed to support air traffic controllers, pilots, and other human operators in the safe and efficient management of the national airspace on the other.

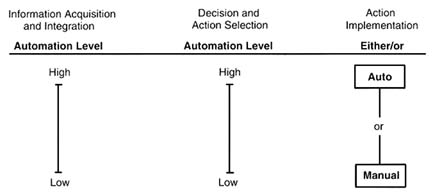

In the Phase I report we noted that automation does not refer to a single either-or entity. Rather, forms of automation can be considered to vary across a continuum of levels. The notion of levels of automation has been proposed by several authors (Billings, 1996a, 1996b; Parasuraman et al., 1990; Sheridan, 1980). In the Phase I report, we identified a 10-level scale, that can be thought of as representing low to high levels of automation (Table 1.1). In this report we expand on that scale in three important directions: (1) differentiating the automation of decision and action selection from the automation of information acquisition; (2) specifying an upper bound on automation of decision and action selection in terms of task complexity and risk; and (3) identifying a third dimension, related to the automation of action implementation.

First, in our view, the original scale best represents the range of automation for decision and action selection. A parallel scale, to be described, can be applied to the information automation. These scales reflect qualitative, relative levels of automation and are not intended to be dimensional, ordinal representations.

Acquisition of information can be considered a separate process from action selection. In both human and machine systems, there are (1) sensors that may vary in their sophistication and adaptability and (2) effectors (actuators) that have feedback control attached to do precise mechanical work according to plan. Eyes, radars, and information networks are examples of sensors, whereas hands and numerically controlled industrial robots are examples of effectors. We recognize that information acquisition and action selection can and do interact through feedback loops and iteration in both human and machine systems. Nevertheless, it is convenient to consider automation of information acquisition and action selection separately in human-machine systems.

Second, we suggest that specifications for the upper bounds on automation of decision and action selection are contingent on the level of task uncertainty. Finally, we propose a third scale that in this context is dichotomous, related to the automation of action implementation, applicable at the lower-levels of automation

TABLE I.1 Levels of Automation

|

Scale of Levels of Automation of Decision and Control Action |

||

|

HIGH |

10. |

The computer decides everything and acts autonomously, ignoring the human. |

|

|

9. |

informs the human only if it, the computer, decides to |

|

|

8. |

informs the human only if asked, or |

|

|

7. |

executes automatically, then necessarily informs the human, and |

|

|

6. |

allows the human a restricted time to veto before automatic execution, or |

|

|

5. |

executes that suggestion if the human approves, or |

|

|

4. |

suggests one alternative, and |

|

|

3. |

narrows the selection down to a few, or |

|

|

2. |

The computer offers a complete set of decision/action alternatives, or |

|

LOW |

1. |

The computer offers no assistance: the human must take all decisions and actions. |

FIGURE 1.1 Three-scale model of levels of automation.

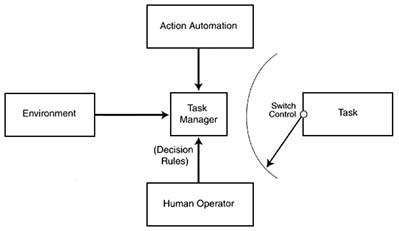

of decision and action selection. The overall structure of this model is shown in Figure 1.1, and the components of the model are described in more detail as follows.

Information Acquisition

Computer-based automation can apply to any or all of at least six relatively independent features involving operations performed on raw data:

-

Filtering. Filtering involves selecting certain items of information for recommended operator viewing (e.g., a pair of aircraft that would be inferred to be most relevant for conflict avoidance or a set of aircraft within or about to enter a sector). Filtering may be accomplished by guiding the operator to view that information (e.g., highlighting relevant items while graying out less relevant or irrelevant items; Wickens and Yeh, 1996); total filtering may be accomplished by suppressing the display of irrelevant items. Automation devices may vary extensively in terms of how broadly or narrowly they are tuned.

-

Information Distribution. Higher levels of automation may flexibly provide more relevant information to specific users, filtering or suppressing the delivery of that same information for whom it is judged to be irrelevant.

-

Transformations. Transformations involve operations in which the automation functionality either integrates data (e.g., computing estimated time to contact on the basis of data on position, heading, and velocity from a pair of aircraft) or otherwise performs a mathematical or logical operation on the data (e.g., converting time-to-contact into a priority score). Higher levels of automation transform and integrate raw data into a format that is more compatible with user needs (Vicente and Rasmussen, 1992; Wickens and Carswell, 1995).

-

Confidence Estimates. Confidence estimates may be applied at higher

-

levels of automation, when the automated system can express graded levels of certainty or uncertainty regarding the quality of the information it provides (e.g., confidence in resolution and reliability of radar position estimates).

-

Integrity Checks. Ensuring the reliability of sensors by connecting and comparing various sensor sources.

-

User Request Enabling. User request enabling involves the automation's understanding specific user requests for information to be displayed. If such requests can be understood only if they are expressed in restricted syntax (e.g., a precisely ordered string of specific words or keystrokes), it is a lower-level of automation. If requests can be understood in less restricted syntax (e.g., natural language), it is a higher level of automation.

The level of automation in information acquisition and integration, represented on the left scale of Figure 1.1, can be characterized by the extent to which a system possesses high levels on each of the six features. A system with the highest level of automation would have high levels on all six features.

Decision and Action Selection and Action Implementation

Higher levels of automation of decision and action selection define progressively fewer degrees of freedom for humans to select from a wide variety of actions (Table 1.1 and the middle scale of Figure 1.1). At levels 2 to 4 on the scale, systems can be developed that allow the operator to execute the advised or recommended action manually (e.g., speaking a clearance) or via automation (e.g., relaying a suggested clearance via data link by a single computer input response). The manual option is not available at the higher levels for automation of decision and action selection. Hence, the dichotomous action implementation scale applies only to the lower-levels of automation of decision and action selection.

Finally, we note that control actions can be taken in circumstances that have more or less uncertainty or risk in their consequences, as a result of more or less uncertainty in the environment. For example, the consequences of an automated decision to hand off an aircraft to another controller are easily predictable and of relatively low risk. In contrast, the consequences of an automation-transmitted clearance or instruction delivered to an aircraft are less certain; for example, the pilot may be unable to comply or may follow the instruction incorrectly. We make the important distinction between lower-level decision actions in the former case (low uncertainty) and higher level decision actions in the latter case (high uncertainty and risk). Tasks with higher levels of uncertainty should be constrained to lower-levels of automation of decision and action selection.

The concluding chapter of the Phase I report examined the characteristics of automation in the current national airspace system. Several aspects of human

interaction with automation were examined, both generally and in the specific context of air traffic management. In this chapter, we discuss system reliability and recovery.

SYSTEM PERFORMANCE

System Reliability

Automation is rarely a human factors concern unless it fails or functions in an unintended manner that requires the human operator to become involved. Therefore, of utmost importance for understanding the human factors consequences of automation are the tools for predicting the reliability (inverse of failure rate) of automated systems. We consider below some of the strengths and limitations of reliability analysis (Adams, 1982; Dougherty, 1990).

Analysis Techniques

Reliability analysis, and its closely related methodology of probabilistic risk assessment, have been used to determine the probability of major system failure for nuclear power plants, and similar applications may be forthcoming for air traffic control systems. There are several popular techniques that are used together.

One is fault tree analysis (Kirwan and Ainsworth, 1992), wherein one works backward from the "top event," the failure of some high level function, and what major systems must have failed in order for this failure to occur. This is usually expressed in terms of a fault tree, a graphical diagram of systems with ands and ors on the links connecting the second-level subsystems to the top-level system representation. For example, radar fails if any of the following fails: the radar antennas and drives, or the computers that process the radar signals, or the radar displays, or the air traffic controller's attention to the displays. This amounts to four nodes connected by or links to the node representing failure of the radar function. At a second-level, for example, computer failure occurs if both the primary and the backup computers fail. Each computer, in turn, can experience a software failure or a hardware failure or a power failure or failure because of an operator error. In this way, one builds up a tree that branches downward from the top event according to the and-/or-gate logic of both machine and human elements interacting. The analysis can be carried downward to any level of detail. By putting probabilities on the events, one can study their effects on the top event. As may be realized by the above example, system components depending on and-gate inputs are far more robust to failures (and hence reliable) than those depending on or-gate inputs.

Another popular technique is event tree analysis (Kirwan and Ainsworth, 1992). Starting from some malfunction, the analyst considers what conditions

may lead to other possible (and probably more serious) malfunctions, and from the latter malfunction what conditions may produce further malfunctions. Again, probabilities may be assigned to study the relative effects on producing the most serious (downstream) malfunctions.

Such techniques can provide two sorts of outputs (there are others, such as cause-consequence diagrams, safety-state Markov diagrams, etc.; Idaho National Engineering Laboratory, 1997). On one hand, they may produce what appear to be "hard numbers" indicating the overall system reliability (e.g., .997). For reasons we describe below, such numbers must be treated with extreme caution. On the other hand, reliability analysis may allow one to infer the most critical functions of the human operator relative to the machinery. In one such study performed in the nuclear safety context, Hall et al. (1981) showed the insights that can be gained without even knowing precisely the probabilities for human error. They simply assumed human error rates (for given machine error rates) and performed the probability analysis repeatedly with different multipliers on the human error rate. The computer, after all, can do this easily once the fault tree or event tree structure is programmed in. The authors were able to discover the circumstances for which human error made a big difference, and when it did not. Finally, it should be noted that the very process of carrying out reliability analysis can act as a sort of audit trail, to ensure that the consequences of various improbable but not impossible events are considered.

Although reliability analysis is a potentially valuable tool for understanding the sensitivity of system performance to human error (human "failure"), as we noted above, one must use great caution in trusting the absolute numbers that may be produced, for example, using these numbers as targets for system design, as was done with the advanced automation system (AAS). There are at least four reasons for such caution, two of which we discuss briefly, and two in greater depth. In the first place, any such number (i.e., r = .997) is an estimate of a mean. But what must be considered in addition is the estimate of the variability around that mean, to determine best-case and worst-case situations. Variance estimates tend to be very large relative to the mean for probabilities that are very close to 0 or 1.0. And with large variance estimates (uncertainty of the mean), the mean value itself has less meaning.

A second problem with reliability analysis pertains to unforeseen events. It seems to be a given that things can fail in the world, failures that the analysts have no way of predicting. For example, it is doubtful that any reliability analyst would have been able to project, in advance, the likelihood that a construction worker would sever the power supply to the New York TRACON with a backhoe loader, let alone have provided a reliable estimate of the probability of such an event's occurring.

The two further concerns related to the hard numbers of reliability analysis are the extreme difficulties of making reliability estimates of two critical components

in future air traffic control automation: the human and the software. Because of their importance, each of these is dealt with in some detail.

Human Reliability Analysis

Investigators in the nuclear power industry have proposed that engineering reliability analysis can be extended to incorporate the human component (Swain, 1990; Miller and Swain, 1987). If feasible, such extension would be extremely valuable in air traffic control, given the potential for two kinds of human error to contribute to the loss of system reliability: errors in actual operation (e.g., a communications misunderstanding, an overlooked altitude deviation) and errors in system set-up or maintenance. Some researchers have pointed out the difficulty of applying human reliability analysis (to derive hard numbers, as opposed to doing the sort of sensitivity analysis described above [Adams, 1982; Wreathall, 1990]). The fundamental difficulties of this technique revolve around the estimation of the component reliabilities and their aggregation through traditional analysis techniques. For example, it is very hard to get meaningful estimates of human error rates, because human error is so context driven (e.g., by fatigue, stress, expertise level) and because the source of cognitive errors remains poorly understood. Although this work has progressed, massive data collection efforts will be necessary in the area of air traffic control, in order to form even partially reliable estimates of these rates.

A second criticism concerns the general assumptions of independence that underlie the components in an event or fault tree. Events at levels above (in a fault tree) or below (in an event tree) are assumed to be independent, yet human operators show two sorts of dependencies that are difficult to predict or quantify (Adams, 1982). For one thing, there are possible dependencies between two human components. For example, the developmental controller may be reluctant to call into question an error that he or she noticed that was committed by a more senior, full-performance-level controller at the same console. For another thing, there are poorly understood dependencies between human and system reliabilities, related to trust calibration, which we discuss later in this chapter. For example, a controller may increase his or her own level of vigilance to compensate for an automated component that is known to be unreliable; alternatively, in the face of frustration with the system, a controller may become stressed or confused and show decreased reliability.

Software Reliability Analysis

Hardware reliability is generally a function of manufacturing failures or the wearing out of components. With sophisticated testing, it is possible to predict how reliable a piece of hardware will be according to measures such as mean time between failures. Measuring software reliability, however, is a much more difficult

problem. For the most part, software systems need to fail in real situations, in order to discover bugs. Generally, many uses are required before a piece of software is considered reliable. According to Parnas et al. (1990), failures in software are the result of unpredictable input sequences. Predicting failure rate is based on the probability of encountering an input sequence that will cause the system to fail. Trustworthiness is defined by the extent to which a catastrophic failure or error may occur; software is trusted to the extent that the probability of a serious flaw is low. Testing for trustworthiness is difficult because the number of states and possible input sequences is so large that the probability of an error's escaping attention is high.

For example, the loss of the Airbus A330 in Toulouse in June 1994 (Dornheim, 1995) was attributed to autoflight logic behavior changing dramatically under unanticipated circumstances. In the altitude capture mode, the software creates a table of vertical speed versus time to achieve smooth level-off. This is a fixed table based on the conditions at the time the mode is activated. In this case, due to the timing of events involving a simulated engine failure, the automation continued to operate as though full power from both engines was available. The result was steep pitchup and loss of air speed—the aircraft went out of control and crashed.

There are a number of factors that contribute to the difficulty of designing highly reliable software. First is complexity. Even with small software systems, it is common to find that a programmer requires a year of working with the program before he or she can be trusted to make improvements on his or her own. Second is sensitivity to error. In manufacturing, hardware products are designed within certain acceptable tolerances for error; it is possible to have small errors with small consequences. In software, however, tolerance is not a useful concept because trivial clerical errors can have major consequences.

Third, it is difficult to test software adequately. Since mathematical functions implemented by software are not continuous, it is necessary to perform an extremely large number of tests. In continuous function systems, testing is based on interpolation between two points—devices that function well on two close points are assumed to function well at all points in between. This assumption is not possible for software, and because of the large number of states it is not possible to do enough testing to ensure that the software is correct. If there is a good model of operating conditions, then software reliability can be predicted using mathematical models. Generally, good models of operating conditions are not available until after the software is developed.

Some steps can be taken to reduce the probability of errors in software. Among them is conducting independent validation using researchers and testing personnel who were not involved in development. Another is to ensure that the software is well documented and structured for review. Reviews should cover the following questions:

-

• Are the correct functions included?

-

Is the software maintainable?

-

For each module, are the algorithms and data structures consistent with the specified behavior?

-

Are codes consistent with algorithms and data structures?

-

Are the tests adequate?

Yet another step is to develop professional standards for software engineers that include an agreed-upon set of skills and knowledge.

Recently, the capacity maturity model (CMM) for software has been proposed as a framework for encouraging effective software development. This model covers practices of planning, engineering, and managing software development and maintenance. It is intended to improve the ability of organizations to meet goals for cost, schedule, functionality, and product quality. The model includes five levels of achieving a mature software process. Organizations at the highest level can be characterized as continuously improving the range of their process capability and thereby improving the performance of their projects. Innovations that use the best software engineering practices are identified and transferred throughout the organization. In addition, these organizations use data on the effectiveness of software to perform cost-benefit analyses of new technologies as well as proposed changes to the software development process.

Conclusion

Although the concerns described above collectively suggest extreme caution in trusting the mean numbers that emerge from a reliability analysis conducted on complex human-machine systems like air traffic control, we wish to reiterate the importance of such analyses in two contexts. First, merely carrying out the analysis can provide the designer with a better understanding of the relationships between components and can reveal sources of possible failures for which safeguards can be built. Second, appropriate use of the tools can provide good sensitivity analyses of the importance (in some conditions) or nonimportance (in others) of human failure.

System Failure and Recovery

Less than perfect reliability means that automation-related system failures can degrade system performance. Later in this chapter we consider the human performance issues associated with the response to such failures and automation-related anomalies in general. Here we address the broader issue of failure recovery from a system-wide perspective. We first consider some of the generic properties of failure modes that affect system recovery and then provide the framework for a model of failure recovery—that is, the capability of the team of

human controllers to recover and restore safety to an airspace within which some aspect of computer automation has failed.

We distinguish here between system failures and human operator (i.e., controllers) failures or errors. The latter are addressed later in this chapter and in the Phase I report. System failures are often due to failures or errors of the humans involved with other aspects of the air traffic control system. They include system designers, whose design fails to anticipate certain characteristics of operations; those involved in fabrication, test, and certification; and system maintainers (discussed in Chapter 7). Personnel at any of these levels can be responsible for a ''failure event" imposed on air traffic control staff controlling live traffic. It is the nature of such an event that concerns us here. We also use the term system failure to include relatively catastrophic failures of aircraft handling because of mechanical damage or undesirable pilot behavior.

System failures can differ in their severity, their time course, their complexity, and the existing conditions at the time of the failure.

-

Severity differences relate to the system safety consequences. For example, a failed light on a console can be easily noticed and replaced, with minimal impact on safe traffic handling. A failed radar display will have a more serious impact, and a failed power supply to an entire facility will have consequences that are still more serious. As we detail below, the potential seriousness of failures is related to existing conditions.

-

In terms of time courses, failures may be abrupt (catastrophic), intermittent, or gradual. Abrupt failures, like a power outage, are to some extent more serious because they allow the operator little time to prepare for intervention. At the same time, they do have the advantage of being more noticeable, whereas gradual failures may degrade system capabilities in ways that are not noticeable—e.g., the gradual loss of resolution of a sensor, like a radar. Intermittent failures are also inherently difficult to diagnose because of the difficulty in confirming the diagnosis.

-

Complexity refers to single versus multiple component failures. The latter may be common mode failures (such as the loss of power, which will cause several components to fail simultaneously, or the overload on computer capacity), or they may be independent mode failures, when two things go wrong independently, creating a very difficult diagnostic chore (Sanderson and Martagh, 1989). Independent mode failures are extremely rare, as classical reliability analysis will point out, but are not inconceivable, and their rarity itself presents a particular challenge for diagnosis by the operator who does not expect them.

-

Existing conditions refer to the conditions that exist when a failure occurs. These will readily affect the ease of recovery and, hence indirectly, the severity of the consequence. For example, failure of radar will be far more severe in a saturated airspace during a peak rush period than in an empty one at 3:00 a.m. We address this issue in discussing failure recovery.

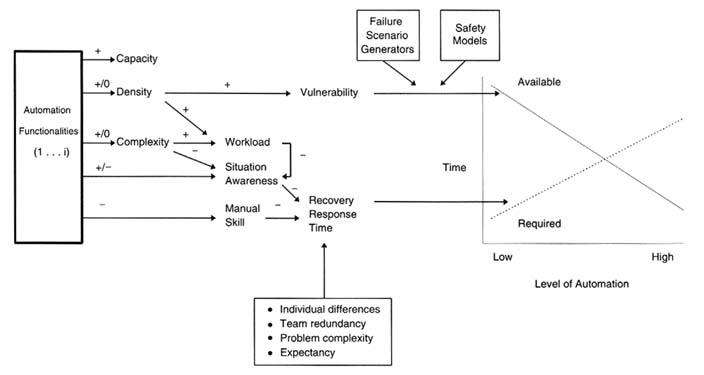

A model framework of air traffic control failure recovery is provided in Figure 1.2. At the left of the figure is presented the vector of possible air traffic control automation functionalities l-i, discussed in Chapters 3-6 of this report. Because automation is not a single entity, its consequences will vary greatly, depending on what is automated (e.g., information acquisition or control action). Next to the right in the figure is a set of variables, assumed to be influenced by the introduction of automation (the list is not exhaustive and does not incorporate organizational issues, like job satisfaction and morale). Associated with each variable is a sign (or set of signs) indicating the extent to which the introduction of automation is likely to increase or decrease the variable in question. These variables are described in the next sections.

Capacity

One motivation for introducing automation at this time is increasing airspace capacity and traffic flow efficiency. It is therefore likely that any automation tool that is introduced will increase (+) capacity.

Traffic Density

Automation may or may not increase traffic density. For example, automation that can reduce the local bunching of aircraft at certain times and places will serve to increase capacity, leaving overall density unaffected. Therefore, two possible effects (+ and 0) may be associated with density.

Complexity

Automation will probably increase the complexity of the airspace, to the extent that it induces changes in traffic flow that depart from the standard air routes and provides flight trajectories that are more tailored to the capabilities of individual aircraft and less consistent from day to day.

Situation Awareness and Workload

Automation is often assumed to reduce the human operator's situation awareness (Endsley, 1996a). However, this is not a foregone conclusion because of differences in the nature of automation and its relation to workload. For example, as we propose in the framework presented in Figure 1.2, automation of information integration in the cockpit can provide information in a manner that is more readily interpretable and hence may improve situation awareness and human response to system failures. In the context of information integration in air traffic management, four-dimensional flight path projections may serve this purpose. Correspondingly, automation may sometimes serve to reduce workload to manageable

levels, such that the controller has more cognitive resources available to maintain situation awareness. This is the reasoning behind the close link with workload presented in Figure 1.2 However, the figure reflects the assumption that the increasing (+) influence of automation on traffic complexity and density will impose a decrease (−) on situation awareness. The effect of increasing traffic complexity on situation awareness will be direct. The effect of traffic density will be mediated by the effect of density on workload. Higher monitoring workload caused by higher traffic density will be likely to degrade situation awareness.

Skill Degradation

There is little doubt that automation of most functions eventually degrades the manual skills for most functions one might automate, given the nearly universal findings of forgetting and skill decay with disuse reported in the behavioral literature (Wickens, 1992), although the magnitude of decline in air traffic control skills with disuse is not well-known. For example, suppose predictive functions are automated, enabling controllers to more easily envision future conflicts (discussed in Chapter 6). Although the controllers' ability to mentally extrapolate trajectories may eventually decay, their ability to solve conflict problems may actually benefit from this better perceptual information, leading possibly to a net gain in overall control ability.

Recovery Response Time

Linking the two human performance elements, situation awareness change and skill degradation, makes it possible to predict the change in recovery response time, that is, the time required to respond to unexpected failure situations and possibly intervene with manual control skills. It is assumed that a less skilled controller (one with degraded skills), responding appropriately to a situation of which he has less awareness, will do so more slowly. This outcome variable is labeled recovery response time (we acknowledge that it could also incorporate the accuracy, efficiency, or appropriateness of the response). Such a time function is a special case of more general workload models in which workload is defined in terms of the ratio of time required to time available (Kirwan and Ainsworth, 1992). As an example, in a current air traffic control scenario, when a transgression of one aircraft into the path of another on a parallel runway approach occurs (discussed in Chapter 5), the ratio of the time required to respond to the time available has a critical bearing on traffic safety.

A plausible, but hypothetical function relating recovery response time to the level of automation, mediated by the variables in the middle of the figure, is shown by the dashed line in the graph to the right of the figure, increasing as the level of automation increases. It may also be predicted that recovery response

time will be greatly modulated by individual skill differences, by the redundant characteristics of the team environment, by the complexity of the problem, and by the degree to which the failure is expected.

At the top of the figure, we see that failures will be generated probabilistically and may be predicted by failure models, or failure scenario generators, which take into account the reliability of the equipment, of the design, of operators in the system, of weather forecasting, and of the robustness (fault tolerance) of the system. When a failure does occur, its effect on system safety will be directly modulated by the vulnerability of the system, which itself should be directly related to the density. If aircraft are more closely spaced, there is far less time available to respond appropriately with a safe solution, and fewer solutions are available. In the extreme case, if aircraft are too closely spaced, no solutions are available. The solid line of the graph reflects the increasing vulnerability of the system, resulting from the density-increasing influences of higher automation levels in terms of the time available to respond to a failure. Thus, the graph overlays the two critical time variables against each other: the time required to ensure safe separation of aircraft, given a degraded air traffic control system (a range that could include best-case, worst-case, median estimates, etc.) and the time available for a controller team to intervene and safely recover from the failure, both as functions of the automation-induced changes in the intervening process variables.

We may plausibly argue that the all important safety consequences of automation are related to the margin by which time available exceeds the recovery response time. There are a number of possible sources of data that may begin to provide some quantitative input to the otherwise qualitative model of influences shown in the figure. For example, work by Odoni et al. (1997) on synthesizing and summarizing models appears to be the best source of information on modeling how capacity and density changes, envisioned by automated products, will influence vulnerability. Work conducted at Sandia Laboratory for the Nuclear Regulatory Commission may prove fruitful in generating possible failure scenarios (Swain and Guttman, 1983). Airspace safety models need to be developed that can predict the likelihood of actual midair collisions, as a function of the likelihood and parameters of near-midair collisions and losses of separation and of decreases in traffic separation. The foundations for such a model could be provided by data such as that shown in Figure 1.3.

Turning to the human component, promising developments are taking place under the auspices of NASA's advanced air transportation technology program, in terms of developing models of pilots' response time to conflict situations (Corker et al., 1997), and pilots' generation of errors when working with automation (Riley et al., 1996). However, models are needed that are sensitive to loss of situation awareness induced by removing operators from the control loop (as well as the compensatory gains that can be achieved by appropriate workload reductions and better display of information). The data of Endsley and Kiris (1995), as

FIGURE 1.3 Air carrier near-midair collisions, 1975-1987. Source: Office of Technology Assessment (1988).

well as those provided by Endsley and Rodgers (1996) in an analysis of operational errors in air traffic control, provide prototypes of the kinds of data collection necessary to begin to validate this critical relation.

Rose (1989) has provided one good model of skill degradation that occurs with disuse, which is an important starting point for understanding the nature of skill loss and the frequency of training (or human-in-the-loop) interventions that should be imposed to retain skill levels. Finally, air traffic control subject-matter experts must work with behavioral scientists to begin to model individual and team response times to emergency (i.e., unexpected) situations (Wickens and Huey, 1993). The models should include data collected in studies of human response time to low-probability emergencies. Policy makers should be made aware that choosing median response times to model these situations can have very different implications from those based on worst-case (longest) response

times (Riley et al., 1996); these kinds of modeling choices must be carefully made and justified.

HUMAN PERFORMANCE

Understanding whether responses to failures in future air traffic management systems can be effectively managed requires an examination of human performance, both individual and team, in relation to automation failures and anomalies. At the same time, an understanding is required of how controllers and other human operators use automation under both normal and emergency conditions. The human performance aspects of interaction with automated systems were considered in some detail in the concluding chapter of the Phase I report. Here we review and summarize the major features of that analysis with reference to current and future air traffic management systems.

Several studies have shown that well-designed automation can enhance human operator and hence system performance. Examples in air traffic management include automated handoffs between airspace sectors and display aids for aircraft sequencing at airports with converging runways. At the same time, many observations of the performance of automation in real systems have identified a series of problems with human interaction with automation, with potentially serious consequences for system safety. These observations have been bolstered by a growing body of research that includes laboratory experiments, simulator studies, field studies, and conceptual analyses (Bainbridge, 1983; Billings, 1996a, 1996b; Parasuraman and Mouloua, 1996; Parasuraman and Riley, 1997; Sarter and Woods, 1995b; Wickens, 1994; Wiener, 1988; Wiener and Curry, 1980). Many of these, although not all, relate to human response when automation fails, either through failure of the system itself or failure to cope with conditions and inputs. Automation problems also have arisen, not as a result of specific failures of automation per se, but because of the behavior of automation in the larger, more complex, distributed human-machine system into which the device is introduced (Woods, 1996). Here we consider specific categories of human performance limitations that surface when humans interact with automation.

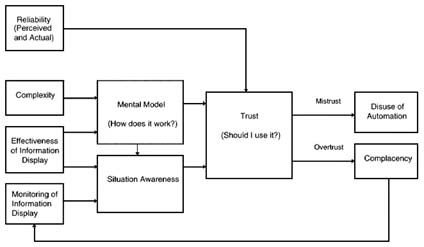

Figure 1.4 presents a general framework for examining human performance issues discussed in this section by illustrating relationships between three major elements of human interaction with dynamic systems—trust, situation awareness, and mental models—as well as factors that can affect these elements. It is not intended to represent a model of the cognitive processes that underlie the elements illustrated. Generally speaking, the controller's mental model of an automated function and the system in which it is embedded reflects his or her understanding of the processes by which automation carries out its functions ("How does it work?"). The controller's mental model is affected by the complexity of the system and by the effectiveness with which information about system functioning, status, and performance are displayed. The controller's mental

FIGURE 1.4 Framework for examining human performance issues.

model affects his or her awareness of the current and predicted state of the situation that is being monitored. Situation awareness is also affected by the degree of effectiveness of both the information display and the controller's monitoring strategy.

A key driver of the human operator's trust of the automation ("Should I use it?") is the reliability or unreliability of the system being monitored. The controller's perception of reliability or unreliability may differ from the actual reliability of the system. The degree of correspondence between actual and perceived reliability may change over time; the software in new systems is often complex, not completely tested, and, therefore, may fail or degrade in ways that may surprise the controller. The controller's trust is also affected by expectations that are based on the controller's mental model and by the controller's situation awareness. Mistrust can lead to disuse of the automation. Overtrust can lead to complacency, which can lead, in turn, to poor monitoring by the controller. Poor monitoring will have a negative effect on the controller's situation awareness.

Trust

Trust is an important factor in the use of automated systems by human controllers (Lee and Moray, 1992; Muir, 1988; Parasuraman and Riley, 1997; Sheridan, 1988). Although the term trust in common usage has broader emotional connotations, we restrict its treatment here to human performance. For example, automation that is highly but not perfectly reliable may be trusted to the point that the controller believes that it is no longer necessary to monitor its

behavior, so that if and when a failure occurs, it is not detected. Conversely, an automated tool that is highly accurate and useful may nevertheless not be used if the controller believes that it is untrustworthy.

Attributes of Trust

Trust has multiple determinants and varies over time. Clearly, one factor influencing trust is automation reliability, but other factors are also important. Below is a listing of the characteristics of the most relevant determining factors:

-

Reliability refers to the repeated, consistent functioning of automation. It should also be noted that some automation technology may be reliably harmful, always performing as it was designed but designed poorly in terms of human or other factors; see the discussion of designer and management errors later in this chapter.

-

Robustness of the automation refers to the demonstrated or promised ability to perform under a variety of circumstances. It should be noted that the automation may be able to do a variety of things, some of which need not or should not be done.

-

Familiarity means that the system employs procedures, terms, and cultural norms that are familiar, friendly, and natural to the trusting person. But familiarity may lead the human operator to certain pitfalls.

-

Understandability refers to the sense that the human supervisor or observer can form a mental model and predict future system behavior. But ease of understanding parts of an automated system may lead to overconfidence in the controller's understanding of other aspects.

-

Explication of intention means that the system explicitly displays or says that it will act in a particular way—as contrasted to its future actions having to be predicted from a model. But knowing the computer's intention may also create a sense of overconfidence and a willingness to outwit the system and take inappropriate chances.

-

Usefulness refers to the utility of the system to the trusting person in a formal theoretical sense. However, automation may be useful, but for unsafe purposes.

-

Dependence of the trusting person on the automation could be measured either by observing the controller's consistency of use, or by using a subjective rating scale, or both. But overdependence may be fatal if the system fails.

Overtrust and Complacency in Failure Detection

If automation works correctly most of the time, the circumstances in which the human will need to intervene because automation has failed are few in number. We can liken this process to a vigilance monitoring task with exceedingly

rare events (Parasuraman, 1987). Many research studies have shown that, if events are rare, human monitors will relax their threshold for event detection, causing the infrequent events that do occur to be more likely to be missed, or at least delayed in detection (Parasuraman, 1986; Warm, 1984). Thus, we may imagine a scenario in which a highly reliable automated conflict probe carries out its task so accurately that a controller fails to effectively oversee its operations. If an automation failure does occur, the conflict may be missed by the controller or delayed in its detection. Parasuraman et al. (1993) showed that, when automation of a task is highly and consistently reliable, detection of system failures is poorer than when the same task is performed manually. This "complacency" effect is greatest when the controller is engaged in multiple tasks and less apparent when only a single task has to be performed (Parasuraman et al., 1993; Thackray and Touchstone, 1989). One of the ironies of complacency in detection is that, the more reliable the automation happens to be, the rarer "events" will be and hence the more likely the human monitor will be to fail to detect the failure (Bainbridge, 1983).

Numerous aviation incidents over the past two decades have involved problems of monitoring of automated systems as one of, if not the major cause of, the incident. Although poor monitoring can have multiple determinants, operator overreliance on automation to make decisions and carry out actions is thought to be a contributing factor. Even skilled subject-matter experts sometimes have misplaced trust in diagnostic expert systems (Will, 1991) and other forms of computer technology (Weick, 1988). Analyses of ASRS (aviation safety reporting system) reports have provided evidence of monitoring failures linked to excessive trust in, or overreliance on, automated systems (Mosier et al., 1994; Singh et al., 1993). Although most of these systems have involved flight deck rather than air traffic management automation, it is worthwhile noting that such problems may also arise in air traffic management as automation increases in level and complexity.

Many automated devices are equipped with self-monitoring software, such that discrete and attention-getting alerts will call attention to system failures. However, it is also true that some systems fail "gracefully" in ways such that the initial conditions are not easily detectable. This may characterize, for example, the gradual loss of precision of a sensor or of an automated system that uses sensor information for control, which becomes slowly more inaccurate because of the hidden failure of the sensor. An accident involving a cruise ship that ran aground off Nantucket island represents an example of a such a problem. The navigational system (based on the global positioning system) failed "silently" because of a sensor problem and slowly took the ship off the intended course. The ship's crew did not monitor other sources of position information that would have indicated that they had drifted off course (National Transportation Safety Board, 1997a).

Overtrust and Complacency in Situation Awareness

There is by now fairly compelling evidence that people are less aware of the changes of state made by other agents than when they make those changes themselves. Such a conclusion draws from basic research (Slameca and Graf, 1978), applied laboratory simulations (Endsley and Kiris, 1995; Sarter and Woods, 1995a), and interpretations of aircraft accidents (Dornheim, 1995; Sarter and Woods, 1995b; Strauch, 1997).

On the flight deck, then, accident and incident analyses have revealed cases in which higher automation levels have led to a loss in situation awareness, which in turn has led to pilot error. In air traffic management, the connection is slightly less direct. However, recent research by Isaac (1997) has revealed the causal link between automation (automated updating of flight strips) and loss of situation awareness in response to radar failure. Endsley and Rodgers (1996), through examination of operational error data preserved on the SATORI system, have linked operational errors to the loss of situation awareness.

It is important to emphasize that simply providing direct displays of what the automation is doing may be necessary, but it is not sufficient to preserve adequate levels of situation awareness, which would enable rapid and effective response to system failure. Execution of active choices seems to be required to facilitate memory for the system state. There is clearly much truth in the ancient Chinese proverb: ''Inform me and I forget but show me how to do and I remember."

Mistrust

Both complacency and reduced situation awareness concern controllers who trust automation more than they should. Operators may also trust automation less than they should. This may occur first because of a general tendency of operators to want to "do things the way we always do." For example, many controllers were initially distrustful of automatic handoffs, but as their workload reducing benefits were better appreciated over time, the new automation was accepted. This problem can be relatively easily remedied through advance briefing and subsequent training procedures.

More problematic is when distrust is a result of an experience with unreliable automation. Following the introduction of both the ground proximity warning system and the traffic alert and collision avoidance system (TCAS) in the cockpit, pilots experienced unacceptable numbers of false alarms, the result of bugs in the system that had not been fully worked out (Klass, 1997). In both cases, there was an initial and potentially dangerous tendency to trust the system less than would be warranted, perhaps ignoring legitimate collision alarms.

Mistrust of automated warning systems can become prevalent because of the false alarm problem (Parasuraman and Riley, 1997). What should the false alarm

rate be and how can it be reduced? Technologies exist for system engineers to design sensitive warning systems that are highly accurate in detecting hazardous conditions (wake vortex modeling, ground proximity, wind shear, collision course, etc.). These systems are set with a decision threshold (Swets, 1992) that minimizes the chance of a missed warning while keeping the device's false alarm rate below some low value. Because the cost of a missed event (e.g., a serious loss of separation or a collision) is phenomenally high, air traffic management alerting systems such as the conflict alert are set with decision thresholds that minimize misses.

Setting the decision threshold for a particular device's false alarm rate may be insufficient by itself for ensuring high alarm reliability and controller trust in the system (Getty et al., 1995; Parasuraman et al., 1997). Alarm reliability is also determined by the base rate of the a priori probability of the hazardous event. If the base rate is low, as it often is for many real events, then the posterior odds of a true alarm can be quite low even for very sensitive warning systems. Parasuraman et al. (1997) carried out a signal detection theory/Bayesian analysis that illustrates the problem and provides guidelines for alarm design. For example, the decision threshold can be set so that a warning system can detect hazardous conditions with a near-perfect hit rate of .999 (i.e., that it misses only 1 of every 1,000 hazardous events) while having a relatively low false alarm rate of .059. Nevertheless, application of Bayes' theorem shows that the controller could find that the posterior probability (or posterior odds) of a true alarm with such a system can be quite low. When the a priori probability (base rate) is low, say .001, only 1 in 59 alarms that the system emits represents a true hazardous condition (posterior probability = .0168).

Consistently true alarm response occurs only when the a priori probability of the hazardous event is relatively high. There is no guarantee that this will be the case in many real systems. Thus, designers of automated alerting systems must take into account not only the decision threshold at which these systems are set (Kuchar and Hansman, 1995; Swets, 1992) but also the a priori probabilities of the condition to be detected (Parasuraman et al., 1997). Only then will operators tend to trust and use the system. In addition, a possible effective strategy to avoid operator mistrust is to inform users of the inevitable occurrence of device false alarms when base rates are low.

Finally, with other types of automation, the consequence of failure-induced mistrust may be less severe. For example, a pilot who does not trust the autopilot may simply fly the aircraft manually more frequently. The primary cost might then simply be an unnecessary increase of workload or lack of precision on the flight path. Similarly, a controller who finds the conflict probe automation untrustworthy may resolve a traffic conflict successfully using manual procedures, but at the cost of extra mental workload. In either case, the system performance-enhancing intention of the automation is defeated.

Calibration of Trust

As the preceding shows, either excessive trust of or excessive mistrust of automation on the part of controllers can lead to problems. The former can lead to complacency and reduced situation awareness, the latter to disuse or under-utilization. This suggests the need for the calibration of trust to an appropriate level between these two extremes. Lee and Moray (1992, 1994) have shown that such optimization requires assessment of the human operator's confidence in his or her manual performance skills. Appropriate calibration of trust also requires that the controller has a good understanding of the characteristics and behavior of the automation. This understanding is captured by the controller's mental model of the automation.

Mental Models

A mental model is an abstract representation of the functional relations that are carried out by an automated system or machine. The model reflects the operator's understanding of the system as developed through past experience (Moray, 1997). A mental model is also thought to be the basis by which the operator predicts and expects the future behavior of the system. A mental model can also refer to a conscious Gedanken (thought) experiment that is "run" in a mental simulation of some (typically physical) relation between conceptually identifiable variables. This can be done to test "what would happen if" (in a hypothetical process) or ''what will happen" (in an observed ongoing actual process). It is implied that a person can "use" the mental model to predict some outputs, given some inputs. Given two aircraft having specified positions and velocities, a controller presumably has a mental model capable of predicting whether they will collide.

When automation is introduced into real system contexts, experience has shown that in many cases it fails. Often it fails or is perceived to have failed because operators do not understand sufficiently well how the system really works (their mental models are wrong or incomplete), and they feel that to be safe they should continue with many of the steps previously used with the old system. But this gets them into trouble. An operator who does not understand the new system is likely to feel safe doing things the old way and does not do what is expected, thus causing failure downstream or at least jeopardizing proper system functioning.

The solution can be: (a) better training, particularly at the cognitive level of understanding the algorithms and logic underlying the automation, rather than just the operating skill level, and including failure possibilities and (b) having operators participate in the decision to acquire the automation, as well as its installation and test, so that they "own it" (share mental models and other assumptions

with the designers and managers) and are not alienated by its introduction.

One of the causes of mistrust of automation results when operators do not understand the basis of the automation algorithms. A poor mental model of the automation may have the consequence that the operator sees the automation acting in a way different from what would be expected, or perhaps even doing things that were not expected at all. Mental models may also be shared among teams of operators, as we discuss below.

Mode Errors

A large body of research has now demonstrated that, when a human operator's mental model of automation does not match its actual functionality and behavior, new error forms emerge. New error forms have been most well documented and studied with respect to flight deck automation, and the flight management system in particular. Several studies have shown that experienced pilots have an incomplete mental model of the flight management system, particularly of its behavior in unusual circumstances (Sarter and Woods, 1995b). This has led to a number of incidents in which pilots took erroneous actions based on their belief that the flight management system was in one mode, whereas it was in fact in another (Vakil et al., 1995). Such confusions have been labeled mode errors (Reason, 1990), in which an operator fails to realize the mode setting of an automated device. In this case, the operator may perform an action that is appropriate for a different mode (and observe an unexpected system response, or no response at all). The crash of an Airbus 320 aircraft at Strasbourg, France, occurred when the crew apparently confused the vertical speed and flight path angle modes (Ministère de l'équipement, des transports et du tourisme, 1993). Another form of mode error occurs when the system itself responds to external inputs in a manner that is unexpected by the operator.

Because air traffic management automation has been limited in scope to date, examples of mode errors or automation surprises have not frequently been reported, although Sarter and Woods (1997) have reported mode errors in the air traffic control voice switching and control system (VSCS). Because new and proposed air traffic management automation systems will increase in complexity, authority, and autonomy in the future, it is worthwhile to keep in mind the lessons regarding mode errors that have been learned from studies of cockpit automation.

Skill Degradation

Given that automation is reliable, understandable (in terms of a mental model), and appropriately calibrated in trust, controllers will be able to use air traffic management automated systems effectively. However, effective automation raises the additional issue of possible skill degradation, which was considered

earlier in this chapter in the context of human response to system failure. The question arises: even if automation that addresses all the human performance concerns raised previously is designed and fielded, will safety be affected because the controller skills used before automation was introduced will have degraded?

There is no doubt that full automation of a function eventually will lead to manual skill decay because of forgetting and lack of recent practice (Rose, 1989; Wickens, 1992). An engineer who has to trade in his electronic calculator for a slide rule will undoubtedly find it tough going when trying to carry out a complex calculation. The question is, to what extent does this phenomenon apply to air traffic management automation, and if so, what are its safety consequences? To better understand this issue, it is instructive to distinguish between situations in which any skill degradation from disuse may or may not have consequences for system safety.

With the level of automation in the current air traffic management system, skill loss is unlikely to generate safety concerns. Most air traffic management automation to date has involved automation of input data functions (Hopkin, 1995). Controllers may be less skilled in such data acquisition procedures than they once were, but the loss of this skill does not adversely affect the system. In fact, because of their workload reducing characteristics, systems such as automated handoffs and better integrated radar pictures have improved efficiency and safety.

In contrast, however, safety concerns should be considered for future air traffic management automation, because these systems are likely to involve automation of decision making and active control functions. For example, the CTAS (center TRACON automation system) that is currently undergoing field trials will provide controllers with resolution advisories (discussed in Chapter 6). If controllers find these advisories to be effective in controlling the airspace and come to rely on them, their own skill in resolving aircraft conflicts may become degraded. Design functionality should act as a countermeasure and not require the use of degraded skills. Research is urgently needed to examine the issue of skill degradation for automation of high level cognitive functions.

If evidence of skill degradation is found, what countermeasures are available? Intermittent manual performance of the automated task is one possibility. Parasuraman, Mouloua, and Molloy (1996) showed that a temporary return to manual control of an automated task benefited subsequent monitoring of the task, even when the task was returned to automation control. In recurrent check rides with automated aircraft, pilots are required to demonstrate hand flying capabilities. Given that these monitoring benefits reflect enhanced attention to and awareness of the task, the results suggest that they would also be manifested in improved retention of manual skills. Rose (1989) also proposed a model of the skill degradation that occurs with disuse and provided guidelines for the frequency of training (or human-in-the-loop) interventions that should be imposed

to retain skill levels. An alternative possibility is to pursue design alternatives that will not rely on those skills that may be degraded, given a system failure.

Cognitive Skills Needed

Automation may affect system performance not only because controller skills may degrade, but because new skills may be required, ones that controllers may not be adequately trained for. Do future air traffic management automated systems require different cognitive skills on the part of controllers for the maintenance of efficiency and safety?

In the current system, the primary job of the controller is to ensure safe separation among the aircraft in his or her sector, as efficiently as possible. To accomplish this job, the controller uses weather reports, voice communication with pilots and controllers, flight strips describing the history and projected future of each flight, and a plan view (radar) display that provides data on the current altitude, speed, destination, and track of all aircraft in the sector. According to Ammerman et al. (1987), there are nine cognitive-perceptual ability categories needed by controllers in the current system: higher-order intellectual factors of spatial, verbal, and numerical reasoning; perceptual speed factors of coding and selective attention; short-and long-term memory; time sharing; and manual dexterity.

As proposed automation is introduced, it is anticipated that the job of the controller will shift from tactical control among pairs of aircraft in one sector to strategic control of the flow of aircraft across multiple sectors (Della Rocco et al., 1991). Current development and testing efforts suggest that the automation will perform such functions as identifying potential conflicts 20 minutes or more before they occur, automatically sequencing aircraft for arrival at airports, and providing electronic communication of data between the aircraft and the ground using data link. Several displays may be involved and much of the data will be presented a graphic format (Ei-feldt, 1991). These prospective aids should make it possible for controllers in one sector to anticipate conflicts down the line and make adjustments, thus solving potential problems long before they occur.

Essentially, as automation is introduced, it is expected that there will be less voice communication, fewer tactical problems needing the controller's attention, a shift from textual to graphic information, and an extended time frame for making decisions. However, it is also expected that, in severe weather conditions, emergency situations, or instances of automation failure, the controller will be able to take over and manually separate traffic.

Manning and Broach (1992) asked controllers who had reviewed operational requirements for future automation to assess the cognitive skills and abilities needed. These controllers agreed that coding, defined as the ability to translate and interpret data, would be extremely important as the controller becomes involved in strategic conflict resolution. Numerical reasoning was rated as less

relevant in future systems, because it was assumed that the displays would be graphic and the numerical computations would be accomplished by the equipment. Skills and abilities related to verbal and spatial reasoning and to selective attention received mixed ratings, although all agreed that some level of these skills and abilities would be needed, particularly when the controller would be asked to assume control from the automation.

The general conclusion from the work of Manning and Broach (1992), as well as from analyses of proposed automation in AERA 2 and AERA 3 (Reierson et al., 1990), is that controllers will continue to need the same cognitive skills and abilities as they do in today's system, but the relative importance of these skills and abilities will change as automation is introduced. The controller in a more highly automated system may need more cognitive skills and abilities. That is, there will be the requirement for more strategic planning, for understanding the automation and monitoring its performance, and for stepping in and assuming manual control as needed. An important concern, echoed throughout this volume, is the need to maintain skills and abilities in the critical manual (as opposed to supervisory) control functions that may be performed infrequently. Dana Broach (personal communication, Federal Aviation Administration Civil Aeromedical Institute, 1997) has indicated that the Federal Aviation Administration is currently developing a methodology to be used in more precisely defining the cognitive tasks and related skill and ability requirements as various pieces of automation are introduced. Once in place, this methodology should be central to identifying possible shifts in both establishing selection requirements and designing training programs.

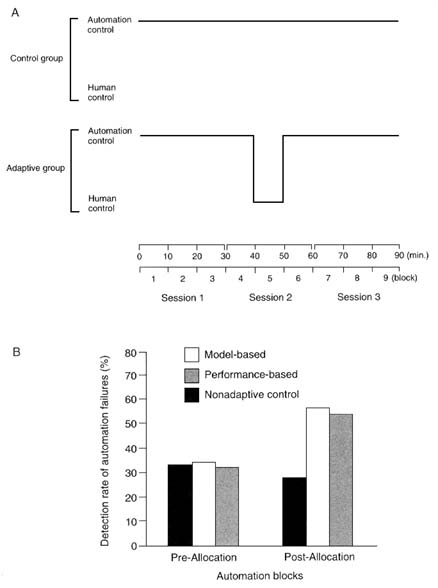

ADAPTIVE AUTOMATION

The human performance vulnerabilities that have been discussed thus far may be characteristic of fixed or static automation. For example, difficulties in situation awareness, monitoring, maintenance of manual skills, etc., may arise because with static automation the human operator is excluded from exercising these functions for long periods of time. If an automated system always carries out a high level function, there will be little incentive for the human operator to be aware of or monitor the inputs to the function and may consequently not be able to execute the function well manually if he or she is required to do so at some time in the future. Given these possibilities, it is worthwhile considering the performance characteristics of an alternative approach to automation: adaptive automation, in which the allocation of function between humans and computer systems is flexible rather than fixed.

Long-term fixed (or nonadaptive) automation will generally not be problematic for data-gathering and data integration functions in air traffic management because they support but do not replace the controller's decision making activities (Hopkin, 1995). Also, fixed automation is necessary, by definition, for

functions that cannot be carried out efficiently or in a timely manner by the human operator, as in certain nuclear power plant operations (Sheridan, 1992). Aside from these two cases, however, problems could arise if automation of controller decision making functions—what Hopkin (1995) calls computer assistance—is implemented in such a way that the computer always carries out decisions A and B, and the controller deals with all other decisions. Even this may not be problematic if computer decision making is 100 percent reliable, for then there is little reason for the controller to monitor the computer's inputs, be aware of the details of the traffic pattern that led to the decision, or even, following several years of experience with such a system, know how to carry out that decision manually. As noted in previous sections, however, software reliability for decision making and planning functions is not ensured, so that long-term, fixed automation of such functions could expose the system to human performance vulnerabilities.

Under adaptive automation, the division of labor between human operator and computer systems is flexible rather than fixed. Sometimes a given function may be executed by the human, at other times by automation, and at still others by both the human and the computer. Adaptive automation may involve either task allocation, in which case a given task is performed either by the human or the automation in its entirety, or partitioning, in which case the task is divided into subtasks, some of which are performed by the human and others by the automation. Task allocation or partitioning may be carried out by an intelligent system on the basis of a model of the operator and of the tasks that must be performed (Rouse, 1988). This defines adaptive automation or adaptive aiding. For example, a workload inference algorithm could be used to allocate tasks to the human or to automation so as to keep operator workload within a narrow range (Hancock and Chignell, 1989; Wickens, 1992). Figure 1.5 provides a schematic of how this could be achieved within a closed-loop adaptive system (Wickens, 1992).

An alternative to having an intelligent system invoke changes in task allocation or partitioning is to leave this responsibility to the human operator. This approach defines adaptable automation (Billings and Woods, 1994; Hilburn, 1996). Except where noted, the more generic term adaptive is used here to refer to both cases. Nevertheless, there are significant and fundamental differences between adaptive (machine-directed) and adaptable (human-centered) systems in terms of such criteria as feasibility, ease of communication, user acceptance, etc. Billings and Woods (1994) have also argued that systems with adaptive automation may be more, not less, susceptible to human performance vulnerabilities if they are implemented in such a way that operators are unaware of the states and state changes of the adaptive system. They advocate adaptable automation, in which users can tailor the level and type of automation according to their current needs. Depending on the function that is automated and situation-specific factors (e.g., time pressure, risk, etc.), either adaptive or adaptable automation may be

FIGURE 1.5 Closed-loop adaptive system.

appropriate. Provision of feedback about high level states of the system at any point in time is a design principle that should be followed for both approaches to automation. These and other parameters of adaptable automation should be examined with respect to operational concepts of air traffic management.

In theory, adaptive systems may be less vulnerable to some of the human performance problems associated with static automation (Hancock and Chignell, 1989; Parasuraman et al., 1990; Scerbo, 1996; Wickens, 1992; but see Billings and Woods, 1994). The research that has been done to date suggests that there may be both benefits and costs of adaptive automation. Benefits have been reported with respect to one human performance vulnerability, monitoring. For example, a task may be automated for long periods of time with no human intervention. Under such conditions of static automation, operator detection of automation malfunctions can be inefficient if the human operator is engaged in other manual tasks (Molloy and Parasuraman, 1996; Parasuraman et al., 1993). The problem does not go away, and may even be exacerbated, with highly reliable automation (Parasuraman, Mouloua, Molloy, and Hilburn, 1996).